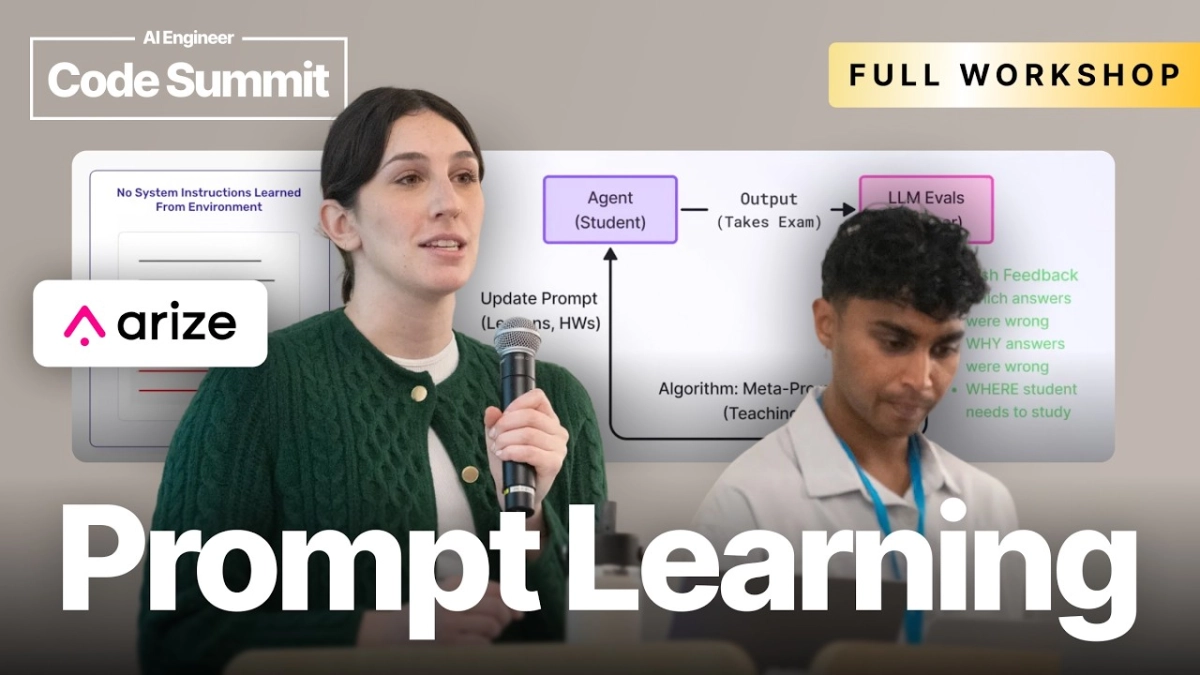

The transition from a proof-of-concept large language model to a reliable, production-grade application hinges entirely on solving one problem: prompt degradation. Models deployed in the real world are subject to concept drift, adversarial inputs, and shifting user expectations, rendering even the most carefully engineered initial prompts obsolete within weeks. This fundamental fragility necessitates a shift in thinking, moving LLM operations away from static deployment and toward continuous, adaptive iteration. This concept, the Prompt Learning Loop, was the central thesis articulated by Arize experts SallyAnn DeLucia and Fuad Ali.

DeLucia and Ali, both key contributors at Arize, recently detailed the necessary infrastructure required to move beyond static prompt engineering, speaking following Aparna Dhinakaran’s preceding talk. They focused specifically on establishing a systematic methodology for continuously refining AI behavior based on real-world usage and feedback. For founders and engineering leaders building on the LLM stack, the learning loop is not an optional feature; it is the core mechanism of governance and performance optimization, fundamentally elevating prompt engineering from an art form to a measurable engineering discipline.

The cost of repeated failure prompts is not trivial. Latency spikes and poor response quality directly erode user trust and increase operational expenditure.

The Prompt Learning Loop, as described, follows a three-stage architectural pattern: Observe, Evaluate, and Improve. The Observation stage mandates comprehensive data logging. This goes far beyond logging the final output; it requires capturing the input prompt, the model version, all retrieved context (in RAG architectures), intermediate chain steps, and critical metadata like latency and cost. This comprehensive logging stack provides the necessary telemetry to understand when and where performance begins to falter.

The Evaluation stage presents the greatest technical challenge because traditional machine learning metrics—like accuracy or F1 score—rarely apply directly to generative outputs. Success must be defined by subjective criteria such as tone, safety, relevance, and adherence to specific brand guidelines. SallyAnn DeLucia emphasized the complexity of this initial definition: "You need to figure out what good looks like, and then you need to figure out how to measure that, and that is often the hardest part." This measurement often requires hybrid evaluation methods, combining automated LLM-as-a-Judge techniques with structured, human-in-the-loop (HITL) feedback.

The quality of this feedback loop determines the velocity of improvement. A simple binary rating—thumbs up or thumbs down—provides insufficient diagnostic information. DeLucia stressed that simple feedback is insufficient for debugging: "A thumbs-down tells me nothing about why it failed. We need high-quality, structured labels to isolate the root cause." The system must encourage human evaluators to categorize the failure mode—was it hallucination, poor context retrieval, safety violation, or style deviation? Only with this granular labeling can engineers pinpoint whether the root issue is the prompt structure, the underlying foundation model, or the retrieval mechanism itself.

This structured feedback feeds directly into the Improvement stage. This is where the system closes the loop, triggering targeted interventions. These interventions can range from modifying parameters or context to entirely revising the prompt itself. The key insight here is the necessity of version control. Fuad Ali highlighted the operational reality: "If you don't have a learning loop, you are essentially deploying a static system that is guaranteed to degrade over time." The static system inherently assumes that the environment and user behavior remain constant, an assumption that LLM production environments immediately invalidate.

To manage this constant state of flux, the speakers strongly advocated for treating prompt assets with the same rigor applied to production code. This means prompt versioning, testing, and deployment must be integrated into the MLOps pipeline, rather than existing as isolated scripts managed by a single prompt engineer. They argued that for serious enterprise applications, this governance is non-negotiable. "Treating your prompts like code, versioning them, and having a prompt registry is absolutely non-negotiable for production reliability," they stated, underscoring the shift in required infrastructure.

For VCs evaluating early-stage AI infrastructure plays, the presence of a robust, automated prompt learning loop is increasingly becoming a critical diligence item. It separates companies that understand the maintenance cost of generative AI from those treating LLMs as simple APIs. The system must not only detect performance decay but also automatically surface the highest-leverage opportunities for prompt optimization, thereby maximizing the return on investment for human labeling efforts.

Furthermore, the concept of the learning loop extends beyond mere performance to encompass safety and governance. By continuously monitoring the distribution of generated responses against safety guardrails, companies can proactively detect and mitigate harmful outputs or toxic prompt injection attempts. This continuous evaluation methodology transforms compliance from a periodic audit requirement into a real-time, automated process. The ability to demonstrate a controlled, measured approach to prompt iteration and failure recovery is fast becoming the competitive moat in the rapidly maturing landscape of applied generative AI.