Positron AI, founded by industry veterans Thomas Sohmers and Mitesh Agrawal, is challenging the conventional wisdom of AI hardware design, asserting that memory bandwidth, not raw compute power, has become the primary bottleneck for large language model inference. This critical insight, detailed by Sohmers and Agrawal on the Latent Space podcast, underscores their unique architectural approach to accelerating AI. Their innovation promises to deliver significantly more efficient and cost-effective solutions for the burgeoning AI landscape.

Thomas Sohmers, Co-founder and CTO, brought a rich background in semiconductor startups, having taped out his first chip at 19 and later serving as Principal Hardware Architect at Lambda Labs. Mitesh Agrawal, CEO, spent nearly a decade at Lambda Labs, driving growth from inception to a half-billion-dollar annual run rate, focusing on cloud operations and data center infrastructure. Their combined experience revealed a fundamental disconnect between prevailing hardware design and the actual demands of modern AI models.

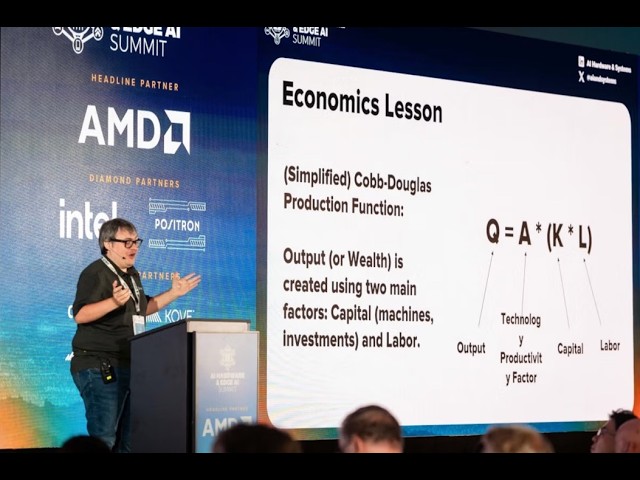

The core of Positron AI’s thesis lies in the shift from traditional Convolutional Neural Networks (CNNs), which are largely compute-bound, to transformer models, which are inherently memory-bound. As Sohmers explains, while CNNs might require "500 to a thousand FLOPS for every byte that actually needs to be moved," transformer inference operates on a "one-to-one ratio of FLOPS to bytes that have to be moved." This dramatic change renders traditional GPU architectures, optimized for compute, inefficient for the vast majority of AI inference tasks.

Positron AI addresses this by prioritizing memory bandwidth and capacity in their architecture, diverging from competitors who continue to chase higher FLOPS. Their current FPGA-based cards, available today, demonstrate remarkable performance. They claim to be "70% faster than NVIDIA [H100], 1/3 the power, 1/2 the price" for Llama 2-70B inference, consistently achieving 93% of their theoretical memory bandwidth across various use cases. This contrasts sharply with NVIDIA's H100, which reportedly utilizes only about 29% of its theoretical memory bandwidth in the best-case scenario.

Their market strategy hinges on seamless integration into the existing NVIDIA ecosystem. Positron AI’s solution directly ingests raw binary model weights from NVIDIA GPUs, providing an OpenAI-compatible API that allows users to send and receive tokens without altering their existing engineering workflows. This "zero-step process" eliminates the complex and time-consuming recompilation or retooling often required by alternative hardware solutions.

Looking ahead, Positron AI plans to transition to dedicated Application-Specific Integrated Circuits (ASICs), which will further enhance their performance per dollar and per watt. This move is a capital-efficient progression, building on their proven FPGA designs. Their operational agility, delivering rack-scale solutions to customers within two years of founding, underscores their ability to execute rapidly in a fast-evolving market. By focusing on the true bottlenecks of AI inference and offering a compelling economic advantage, Positron AI positions itself as a critical enabler for the widespread, cost-effective deployment of advanced AI models.