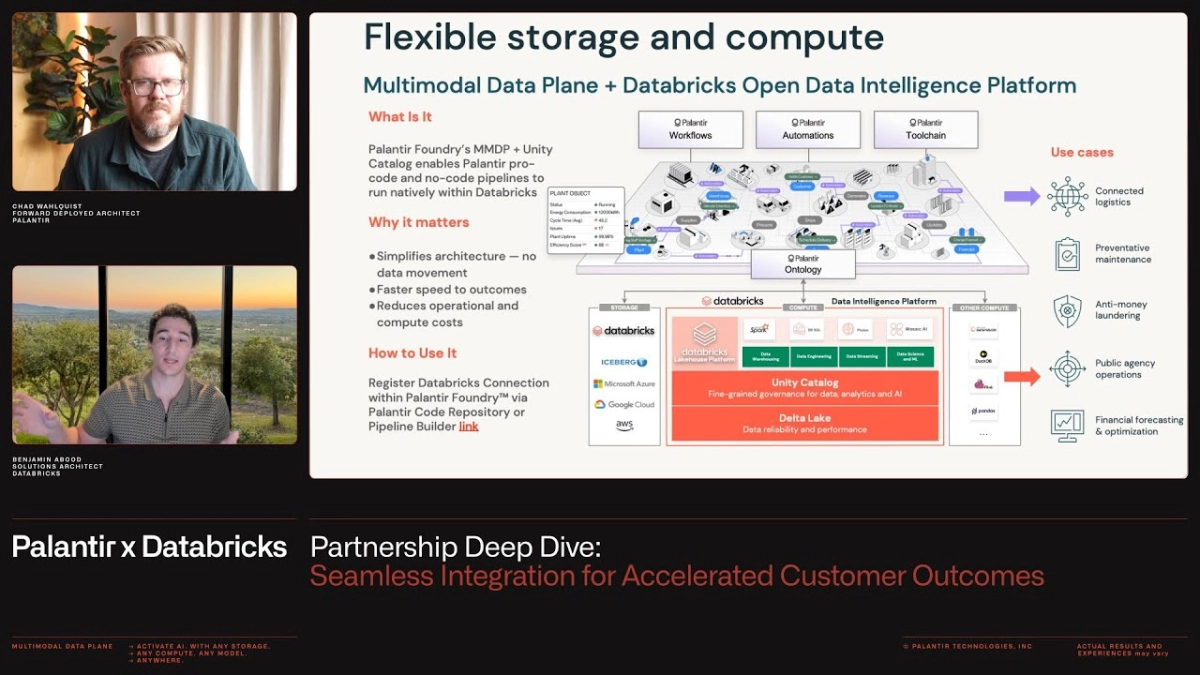

The future of operational artificial intelligence hinges not just on sophisticated models, but on eliminating the architectural friction that plagues most enterprise data stacks. This was the central theme of a recent deep dive presentation detailing the collaboration between Palantir and Databricks, demonstrating how two of the industry’s most powerful platforms are integrating at the foundational level to accelerate customer outcomes. The partnership is a direct response to a common reality: customers were already utilizing both Palantir Foundry and the Databricks Open Data Intelligence Platform side-by-side, but the lack of seamless interoperability created unnecessary complexity and latency.

Chad Walkowski, a Deployed Architect at Palantir, and Ben Abood, an Architect at Databricks, jointly presented the technical pillars of the newly deepened integration. They emphasized that the collaboration was inherently “customer-driven,” born from joint executive discussions last year centered on how to resolve the impedance mismatch between the two ecosystems. The integration focuses on four key pillars: Data Federation, Governance, Compute, and AI & Workflows, all designed to deliver value quicker by removing the necessity of moving and copying data across platforms.

The primary technical breakthrough lies in achieving true bi-directional interoperability. Historically, integrating such disparate platforms required cumbersome ETL (Extract, Transform, Load) pipelines, leading to data duplication, increased storage costs, and stale information. Databricks’ Unity Catalog acts as the unified governance layer, allowing Palantir Foundry to register Databricks tables as "Virtual Tables" directly within its environment. This mechanism means that Foundry can access and utilize data residing in the Databricks Lakehouse without physically moving it.

“Really, this is the synchronous bi-directional integration backward and forward so that I can have data in Databricks and register it in Palantir, I can create data in Palantir, register it in Databricks, and move those back and forth seamlessly, all with a unified catalog and governance around it,” Walkowski explained. This architectural simplification is paramount.

The core advantage is immediate, secure data sharing and simplified governance. Databricks’ Unity Catalog manages the fine-grained access control permissions, which are enforced when data is federated into Foundry. Palantir leverages service principal and workload identity federation for authentication, ensuring that Foundry accesses data in Databricks only with the necessary permissions, eliminating the need for hard-coded credentials or secrets.

The integration is designed to ensure that the computational workload is performed where the data resides, a concept known as compute pushdown. If a user defines a transformation or pipeline within Palantir Foundry’s low-code tools, the execution is automatically pushed down to Databricks’ serverless compute infrastructure. This means customers can leverage the tools they are familiar with in Foundry—such as Pipeline Builder or Code Repositories—while benefiting from Databricks’ performance and scalability for data processing. This combination drastically reduces operational overhead and speeds up the transformation process.

“If I've already got all my data together and I'm pipelining, I'm doing this work in Databricks, now I want to build operational applications and I want to model into the ontology the data, not only the data but also the logic and the actions to really be able to drive these integrated workflows across your business,” Walkowski noted, emphasizing the shift toward operational applications driven by real-time data. The ability to perform complex data transformations and filtering in Foundry, while the actual computation occurs seamlessly in Databricks, demonstrates a first-class product integration, not merely a rudimentary connector.

The flexibility extends into the AI and machine learning lifecycle. Data science teams often prefer the notebooks and MLflow environment provided by Databricks for training complex models and handling large datasets. Once a model is trained and registered in Databricks, it can be registered directly within Palantir Foundry’s Ontology. This allows the model to be immediately deployed within Foundry’s operational applications, closing the critical gap between experimental data science and real-world operational deployment. This ability to register models, whether hosted in Databricks or imported into Foundry, provides critical optionality across the architecture.

The partnership is focused on reducing the time and complexity involved in moving data and managing disparate systems. “We read the data from Databricks, we used Databricks compute to run compute where the data lived, you know, at the Databricks storage, and we wrote that output data set directly back to Unity Catalog,” said Abood, underscoring the achievement of minimal data movement. This level of architectural synergy allows organizations to concentrate resources on building valuable operational applications rather than maintaining complex data infrastructure, ultimately maximizing the velocity of delivering AI-driven insights across the enterprise.