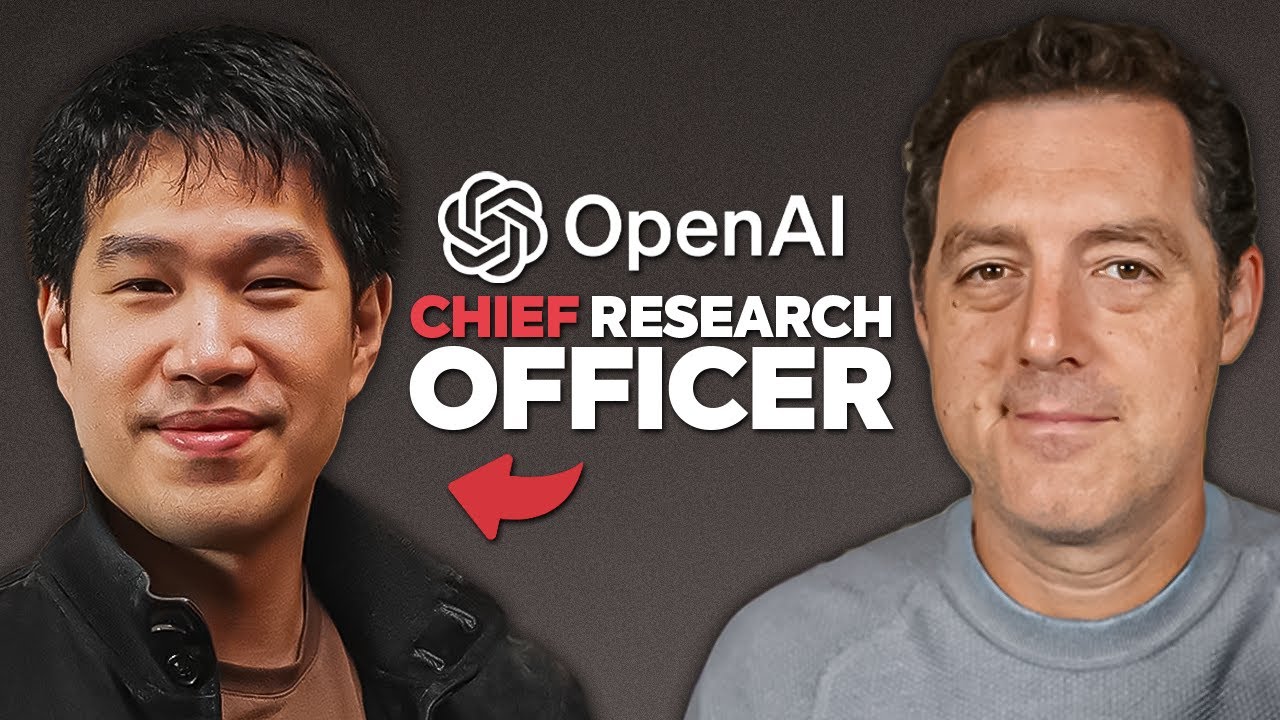

The launch of a new GPT model invariably ignites fervent anticipation, a sentiment Mark Chen, Head of Research at OpenAI, describes as palpable within the company. Speaking with Matthew Berman, Chen offered a rare glimpse into the internal dynamics and strategic pivots guiding OpenAI's trajectory, particularly concerning the forthcoming GPT-5 and the broader future of artificial intelligence. His insights underscore a unique organizational philosophy and key technological advancements shaping the AI landscape.

Chen articulated a core tenet of OpenAI's operational ethos: "the research really is the product." This perspective suggests a symbiotic relationship where breakthroughs in fundamental AI research directly translate into tangible products that, in turn, enable further research. He highlighted the "high emotion" and "internal uncertainty" that accompany each major model launch, emphasizing the rigorous "vibe checking" process to ensure a model is truly ready for public release, devoid of pathological behaviors. This delicate balance between pushing scientific boundaries and delivering robust, user-ready products is central to OpenAI's identity.

A significant analytical point emerged around the challenge of data scarcity for training increasingly powerful models. While publicly available data is finite, Chen revealed that GPT-5 is leveraging synthetic data. This data, generated by prior models rather than humans, is proving surprisingly effective, particularly in domains like coding. Chen noted, "we've had a very healthy synthetic data program... and it helps us improve coverage in areas that we really want GPT-5 to shine." This innovative approach suggests a path to overcoming current data limitations, although Chen acknowledges it remains an active research area with much room for improvement.

The conversation also delved into the architectural shifts and philosophical underpinnings of GPT-5. Chen explained that GPT-5 is among the first models to seamlessly marry "both the pre-training paradigm and the reasoning paradigm together." This convergence aims to deliver both rapid, intuitive responses and deep, complex reasoning capabilities without requiring users to choose between modes. This "omnimodel" approach, as some term it, contrasts with specialized models, pointing towards a future where a single, highly capable AI can handle a vast array of tasks.

The ambition extends to the concept of "organizational AI," where AI agents collaborate to achieve high-level objectives. While still an active research area, Chen believes this collaborative intelligence is a core component of developing general intelligence. Memory and context management are critical hurdles, but advancements in efficiency mean models like GPT-5 can now process "real-world long repositories," pushing past previous limitations. This continuous improvement in robustness and reliability, reducing hallucinations, is setting new benchmarks for what models can achieve. The focus on verifiable outputs, particularly in coding, is accelerating progress, allowing for objective measurement of advancements. This strategic emphasis on coding, Chen believes, is a powerful accelerant for scientific progress and a key pathway to AGI.