"We are heading to a world of absolute compute scarcity," declares Greg Brockman, OpenAI's President, encapsulating a foundational challenge that permeates the cutting edge of artificial intelligence development. This stark reality, far from a mere logistical hurdle, is fundamentally reshaping the strategic priorities and operational realities for every entity striving to push the boundaries of AI.

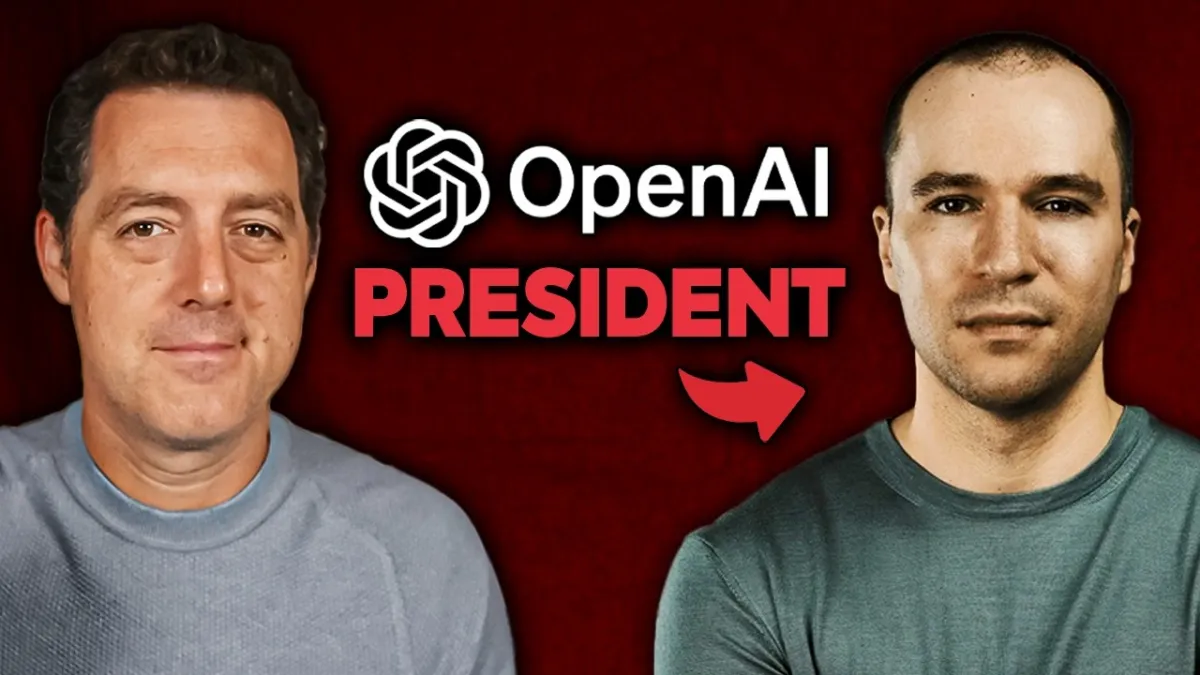

In a recent interview with Matthew Berman, Greg Brockman offered a candid and insightful glimpse into OpenAI’s ambitious mission, discussing topics ranging from the scaling of multimodal models like Sora 2 to the evolving definition of Artificial General Intelligence (AGI), the critical bottlenecks in the AI supply chain, and the profound societal shifts proactive AI is poised to trigger. His commentary painted a picture of relentless innovation driven by a deep understanding of underlying principles, yet constantly constrained by the physical limits of computation.

A striking insight from Brockman is the enduring power of the transformer architecture, which underpins not only large language models but also advanced video generation models like Sora 2. Despite the seemingly vast differences between text and video, he notes, "fundamentally, everything is still just deep learning, same mechanics, same sort of underlying principles. You got to scale up with a massive amount of compute... still transformer." This "deep fact" suggests a unifying computational process across diverse modalities, allowing for continuous scaling of algorithmic progress, compute power, and data. However, this relentless scaling also reveals "multiple limiting reagents," where each component can be tuned for performance, highlighting the sheer complexity and interconnectedness of advancing AI capabilities.

The most pressing of these limiting reagents, according to Brockman, is compute itself, specifically energy. He articulates a profound shift in perspective: AGI, once primarily viewed as a software endeavor, is now increasingly understood as a compute-intensive undertaking. OpenAI’s strategic investments, including a partnership with AMD for hardware and their ambitious Stargate project for data centers, underscore this recognition. The internal allocation of scarce computational resources, he admits, is a process of "pain and suffering," reflecting the intense competition for cycles among various product and research initiatives. This constant struggle for computational power means that the pace of AI advancement is not solely dictated by theoretical breakthroughs, but by the very infrastructure that enables them.

The interview also delves into the impending transformation of the human-internet experience. Brockman observes that ChatGPT "really makes you realize how unnatural it is to go to a static website to just read stuff." This sentiment points to a future where AI agents will increasingly browse and interact with the digital world on our behalf, leading to a "decoupling" of human attention from traditional web interfaces. The vision extends to "fully generated software" and a world of "proactive AI," where systems anticipate user needs rather than merely reacting to commands. Such a shift inevitably poses questions about the future of web monetization, moving beyond traditional advertising models to new, value-driven paradigms. The core insight here is that AI will demand that all digital interactions deliver genuine value, making non-value-add tasks obsolete.

Regarding the societal impact, Brockman acknowledges concerns about job displacement, but frames it as a transformation rather than outright elimination. He envisions a world where AI empowers humans to be "much more protective of their time," automating repetitive or non-value-add tasks and freeing up human creativity and judgment for "grand problems." While the exact balance of new jobs versus displaced ones remains uncertain, the emphasis is on a future of abundance, where human-AI collaboration unlocks unprecedented productivity.

Ultimately, the conversation with Greg Brockman underscores that the path to AGI is not a linear, predictable journey, but a complex, iterative process fraught with both immense potential and formidable challenges. The future, as he suggests, will be "stranger and probably more delightful than we can imagine," but it will be built on the bedrock of compute, guided by human intent, and continually reshaped by the symbiotic evolution of AI and society.