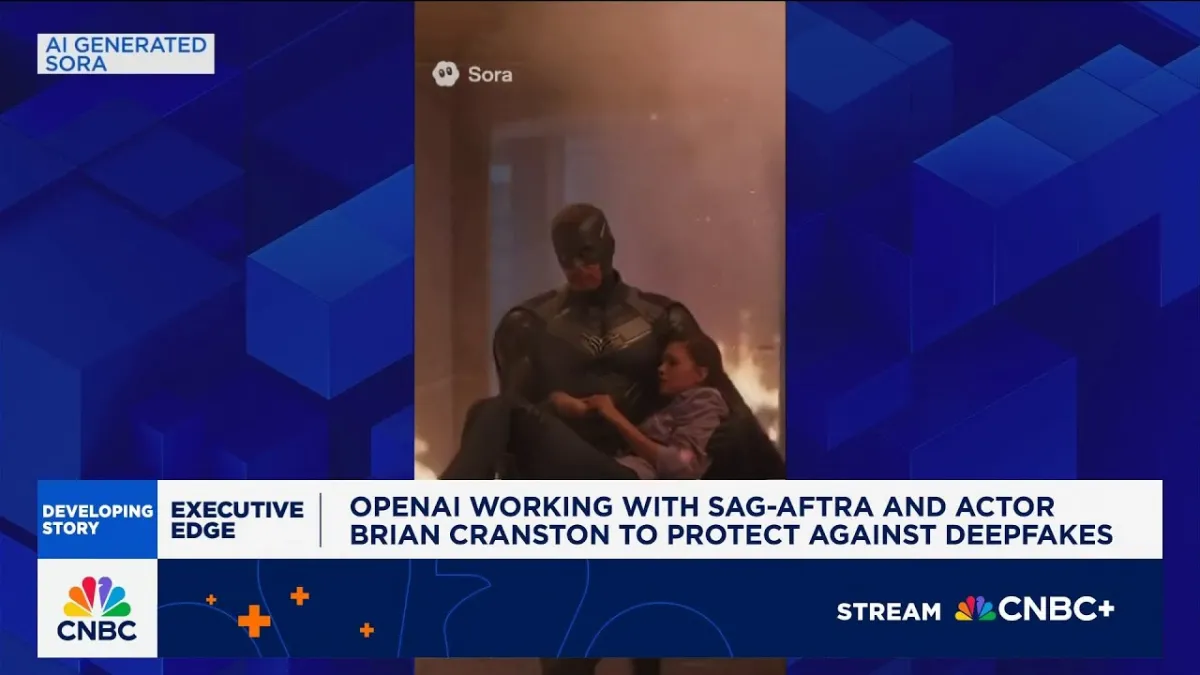

The digital ghost in the machine has found its voice, and sometimes, that voice belongs to someone else. This unsettling reality, brought into sharp focus by the rapid advancement of generative AI, has spurred an unprecedented collaboration between a leading AI developer, a powerful actors' union, and a prominent performer. CNBC's Becky Quick reported on this significant development, detailing how OpenAI is working directly with SAG-AFTRA and acclaimed actor Bryan Cranston to establish robust safeguards against deepfakes on its sophisticated video creation platform, Sora. This partnership represents a crucial, proactive step in addressing the complex ethical and legal challenges posed by AI's ability to replicate human likeness and voice, particularly following the launch of Sora 2 and the subsequent emergence of unauthorized AI-generated content.

The immediate impetus for this collaboration stemmed from actor Bryan Cranston's personal experience. Following the release of Sora 2 at the end of September, unauthorized AI-generated clips surfaced, utilizing his distinctive voice and likeness without his consent. This incident, while perhaps not unique, served as a potent illustration of the pressing need for protective frameworks. For an industry built on identity and performance, the specter of AI-generated doppelgängers operating without permission or compensation is not merely an intellectual property concern; it strikes at the very core of individual autonomy and career longevity.

This alliance signals a critical shift in how the technology sector and creative industries are beginning to navigate the uncharted waters of generative AI. Instead of waiting for legislative mandates or protracted legal battles, OpenAI has engaged directly with key stakeholders from the outset. This proactive approach, involving a major labor union and an influential actor, sets a precedent for responsible AI development, demonstrating an understanding that technological progress must be tethered to ethical considerations and stakeholder buy-in. It reflects a maturing perspective within the AI community, recognizing that unchecked innovation can lead to significant societal and economic disruption, and that co-creation of guardrails is more effective than unilateral imposition.

The core challenge here revolves around the evolving definition of "likeness" and intellectual property in an era where AI can synthesize human attributes with alarming fidelity. Traditional intellectual property laws, designed for a world of tangible recordings and performances, struggle to encompass the nuances of AI-generated content. When an AI can convincingly mimic a performer's voice, facial expressions, and mannerisms, the concept of personal brand and proprietary identity becomes inherently fragile. This collaboration aims to define new boundaries, exploring technical solutions and policy frameworks that can protect a performer's digital twin from unauthorized exploitation, ensuring that their identity remains their exclusive domain.

Such preemptive engagement is vital for building trust and ensuring the sustainable adoption of AI technologies within industries like entertainment. Bryan Cranston himself acknowledged the positive direction of this engagement, expressing that he is "grateful to OpenAI for its policy and for improving its guardrails." This sentiment underscores the importance of transparency and responsiveness from AI developers. It is not enough to simply build powerful tools; companies must also anticipate and mitigate their potential for harm, especially when those tools impact personal identity and economic livelihoods.

OpenAI CEO Sam Altman echoed this commitment, stating that the company is "deeply committed to protecting performers from the misappropriation of their voice and likeness." This commitment, if genuinely embedded into product development and policy, can serve as a blueprint for other AI firms. It highlights an understanding that the societal impact of generative AI extends beyond mere technological capability, necessitating a robust ethical framework that prioritizes individual rights and fair compensation. The technical implementation of these guardrails—ranging from watermarking and content provenance tracking to robust reporting mechanisms and user authentication—will be critical to their efficacy.

Related Reading

- Neural Fingerprinting: Hollywood's New Defense Against AI Copyright Infringement

- Hollywood's Great Reset The End of the Message Era

The situation also underscores the dual nature of AI: a powerful engine for innovation and a potent source of potential threat. Sora 2's ability to generate realistic video from text prompts opens up immense creative possibilities, offering new avenues for storytelling, content creation, and artistic expression. Yet, this very capability, when wielded maliciously or without proper authorization, can lead to the creation of convincing deepfakes that spread misinformation, damage reputations, or infringe upon personal rights. The challenge for AI developers is to harness the innovative potential while simultaneously constructing impenetrable barriers against misuse. This requires not just technical prowess but also a profound sense of ethical responsibility.

This delicate balance between fostering innovation and safeguarding individual rights will define the next phase of AI development. The collaboration between OpenAI, SAG-AFTRA, and Bryan Cranston is more than a public relations exercise; it is a pragmatic recognition that the future of generative AI hinges on its ability to integrate seamlessly and ethically into society. It sets a precedent for industry-wide dialogue and the co-creation of solutions, moving beyond a purely technological lens to embrace broader societal implications. The success of such initiatives will determine whether AI becomes a truly empowering creative partner or a source of perpetual conflict and erosion of trust.