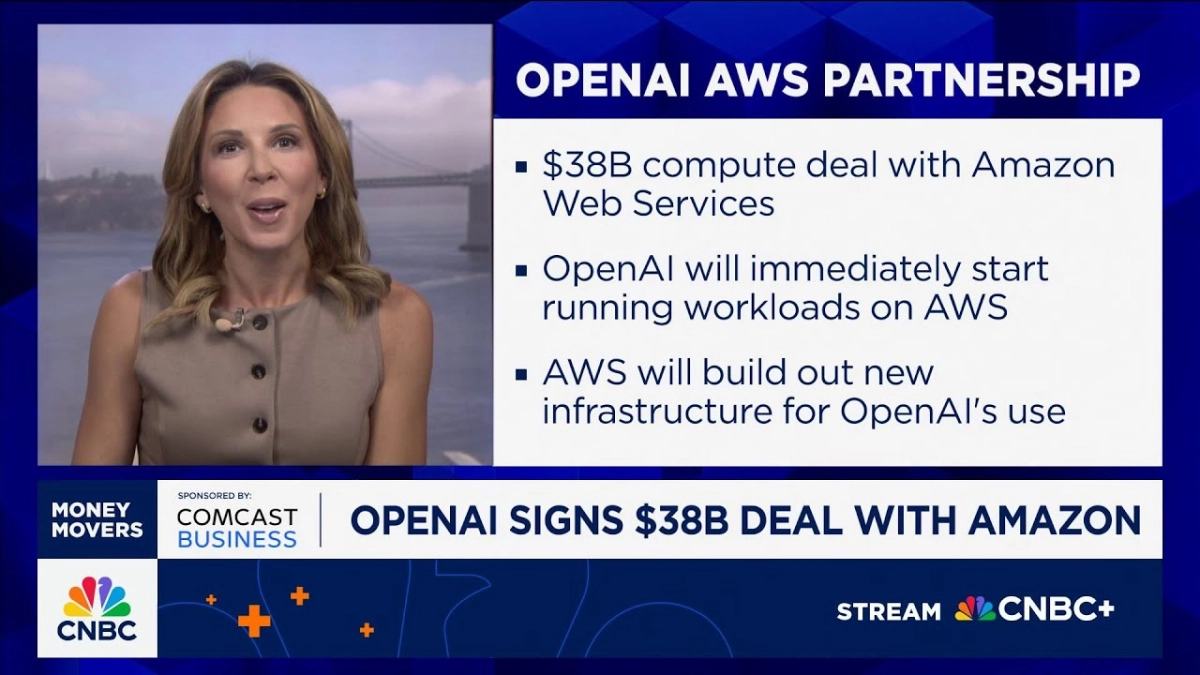

The immense and escalating demand for computational power, a cornerstone of advanced artificial intelligence development, is fundamentally redrawing the strategic alliances within the technology sector. This reality was sharply illuminated by CNBC reporter MacKenzie Sigalos on 'Squawk on the Street,' as she unveiled the details of a groundbreaking $38 billion infrastructure deal between OpenAI and Amazon Web Services. Sigalos's report, which included insights from Dave Brown, Head of Compute and Machine Learning Services at AWS, provided critical context for this pivotal partnership, underscoring its immediate operational impact and its broader implications for the AI and cloud ecosystems.

This agreement marks OpenAI’s inaugural direct contract with Amazon Web Services, a significant development in its infrastructure strategy. For years, Microsoft Azure served as OpenAI's exclusive cloud partner, a relationship that shifted to a right of first refusal in January and subsequently expired just last week. Sigalos underscored the import of this transition, stating, "It's the clear sign yet that it's no longer relying solely on Microsoft for compute." This move signals a deliberate diversification, allowing OpenAI to tap into a wider array of resources and capabilities from various cloud providers.

The strategic pivot towards a multi-cloud future by OpenAI is arguably the most consequential takeaway from this announcement. While Microsoft has reaffirmed its colossal $150 billion commitment from OpenAI to continue scaling on Azure, the addition of AWS, and indeed, prior agreements with Google and Oracle, demonstrates a clear intent to avoid vendor lock-in and optimize for the best available compute, irrespective of provider. For startup founders, this illustrates the evolving blueprint for scaling AI operations: building resilience and flexibility into the core infrastructure is paramount, even if it means managing complex multi-vendor relationships. Venture capitalists and tech insiders should note that this diversification mitigates single-point-of-failure risks and provides leverage in negotiations, ultimately enhancing OpenAI’s long-term operational stability and innovation capacity.

The sheer scale of these compute deals underscores the intensifying AI arms race, where access to cutting-edge hardware is a decisive competitive advantage. OpenAI will immediately begin running workloads on AWS, leveraging "hundreds of thousands of Nvidia GPUs across US data centers." This highlights the critical and unyielding demand for Graphics Processing Units (GPUs), which are the workhorses of modern AI model training and inference. Cloud providers are now central figures in this race, acting as the primary suppliers of the specialized compute necessary to push the boundaries of AI. For AI professionals, this signifies that the availability and cost of high-performance compute will continue to be a dominant factor in research and development, influencing everything from model architecture choices to deployment strategies.

A particularly striking detail emphasized by Sigalos, drawing from her conversation with AWS's Dave Brown, pertains to the immediate readiness of this new capacity. Brown "stressed to me that unlike some of the $1.4 trillion in compute deals that OpenAI has signed lately, this capacity is already live, not just a future-looking partnership." This means OpenAI gains immediate, tangible access to substantial compute power, enabling rapid scaling of its existing projects and accelerating new initiatives. The urgency of this "live" capacity highlights the pressure on AI leaders to continually expand their computational footprint to maintain their competitive edge.

Furthermore, AWS is not merely offering existing services; it plans to "build out new infrastructure specifically for OpenAI's use." This bespoke development illustrates the deep commitment cloud providers are willing to make for marquee AI clients, tailoring their offerings to meet highly specific and demanding requirements. This level of customization and dedicated infrastructure investment is a testament to the strategic value these AI companies represent to the cloud giants. For founders and VCs, this signals that establishing strong, symbiotic relationships with cloud providers can yield significant long-term benefits beyond standard service agreements, potentially including tailored hardware and engineering support.

Related Reading

- Amazon's AI Investments Drive AWS Re-acceleration and Retail Innovation

- Amazon's Cloud and AI Crossroads: Navigating Intense Competition and Infrastructure Demands

- Wall Street's AI Reckoning: Monetization Separates Hyperscaler Winners from Losers

The expiring right of first refusal Microsoft held over OpenAI's cloud partnerships opened the door for this deal. It signals a new era of flexibility for the AI pioneer. This shift transforms the relationship dynamic from an exclusive dependency to a more diversified, strategic partnership model, reflecting OpenAI’s growing maturity and its imperative to secure the best possible resources for its ambitious roadmap.

This move by OpenAI to embrace a multi-cloud strategy, extending its compute relationships beyond Microsoft to include AWS, Google, and Oracle, is a clear indicator of the immense and diversified infrastructure needs of leading AI research. This shift reflects not only the operational imperative for scale and resilience but also the evolving dynamics of power and partnership within the high-stakes world of artificial intelligence.