NVIDIA is significantly expanding its "Physical AI" initiatives, aiming to transform how smart cities, industrial facilities, and critical infrastructure operate globally. This push focuses on enabling systems to perceive, reason, and act within real-world environments, promising enhanced safety, efficiency, and automation across various sectors. In an announcement on its blog, NVIDIA detailed its collaborations with key industry partners and unveiled substantial updates to its Metropolis platform, the foundational technology for these advancements.

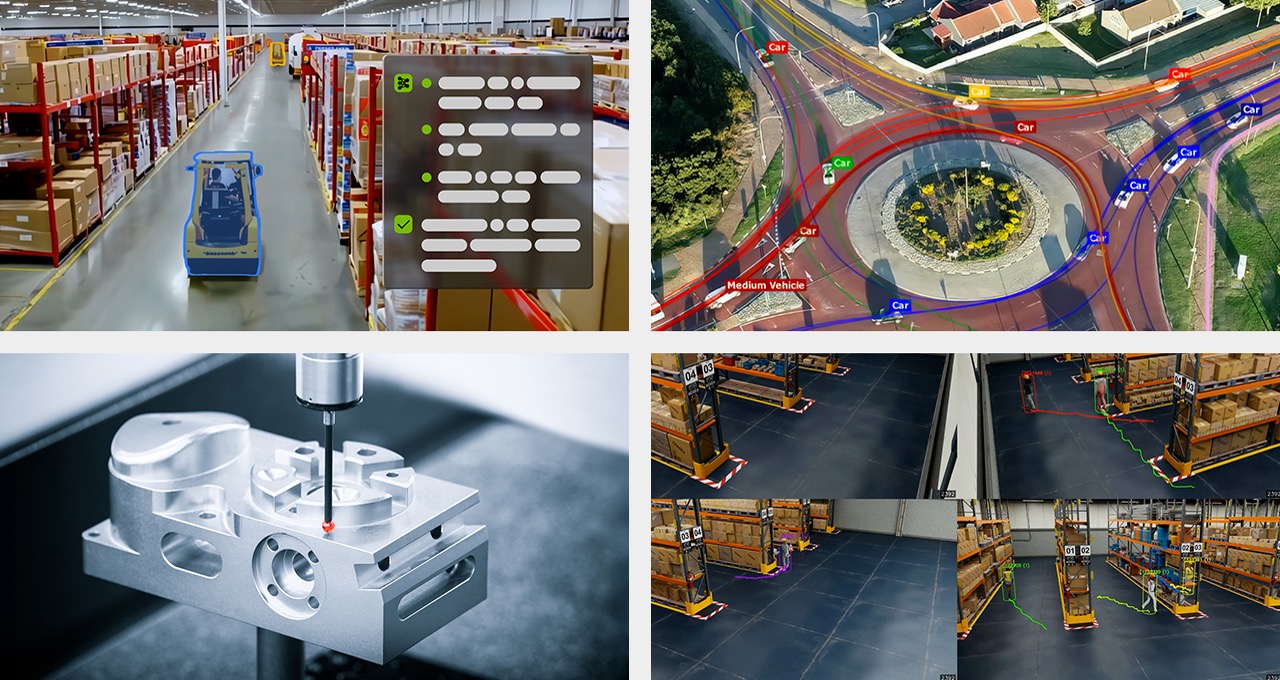

Physical AI represents a continuous loop of simulating, training, and deploying AI agents that interact with the physical world. This paradigm allows for sophisticated industrial automation, such as automating dangerous tasks involving heavy machinery, improving transportation services, bolstering public safety, and detecting manufacturing defects. The core idea is to equip infrastructure with advanced vision AI capabilities, leveraging video sensors to understand and respond to dynamic environments.

At the heart of this strategy is the NVIDIA Metropolis platform, designed to streamline the development, deployment, and scaling of video analytics AI agents from the edge to the cloud. Metropolis provides developers with the tools to integrate visual perception into their systems more rapidly, enhancing both productivity and safety across diverse settings.

NVIDIA highlighted several leading companies already leveraging Physical AI with Metropolis. Accenture, collaborating with Belden, is developing smart virtual fences for factories using OpenUSD-based digital twins in NVIDIA Omniverse. These systems prevent accidents between human operators and large robots, deploying AI models at the edge with Metropolis and DeepStream for real-time inference.

Industrial automation provider Avathon utilizes the Metropolis Blueprint for video search and summarization (VSS) to deliver real-time insights for manufacturing and energy facilities, improving operational efficiency and worker safety. DeepHow has introduced a "Smart Know-How Companion" for industrial employees, transforming workflows into bite-sized, multilingual videos using the Metropolis VSS blueprint. This has notably slashed onboarding time by 80% for companies like Anheuser-Busch InBev.

Milestone Systems, a major platform for IP video sensor data, is building Project Hafnia, a large computer vision data library. This platform provides physical AI developers access to customized vision language models (VLMs), fine-tuned with NVIDIA NeMo Curator for intelligent transportation systems, and is exploring the new NVIDIA Cosmos Reason VLM. Internet-of-things company Telit Cinterion has integrated NVIDIA TAO Toolkit 6 into its AI-powered visual inspection platform, enabling rapid development and deployment of custom AI models for defect detection and quality control.

Key Metropolis Updates Drive Physical AI

NVIDIA also announced five significant updates to its Metropolis platform, designed to make building Physical AI applications faster and easier for developers. The latest version of Cosmos Reason, NVIDIA’s advanced open and customizable 7-billion-parameter reasoning VLM, now offers contextual video understanding and temporal event reasoning, ideal for traffic monitoring, public safety, and visual inspection. Its compact size facilitates deployment from edge to cloud.

The VSS Blueprint 2.4 update simplifies the integration of Cosmos Reason into existing vision AI applications, offering expanded APIs for greater flexibility in augmenting computer vision pipelines with generative AI capabilities. Furthermore, the NVIDIA TAO Toolkit now includes a new suite of vision foundation models, along with advanced fine-tuning methods, optimized for deploying Physical AI solutions across edge and cloud environments, complemented by a new DeepStream SDK Inference Builder.

New extensions in the NVIDIA Isaac Sim reference application address common vision AI development challenges, such as limited labeled data and rare edge-case scenarios. These tools simulate human and robot interactions, generate rich object-detection datasets, and create incident-based scenes to train VLMs, accelerating development and improving real-world AI performance. Finally, all these Metropolis components now support NVIDIA RTX PRO 6000 Blackwell GPUs, the NVIDIA DGX Spark desktop supercomputer, and the NVIDIA Jetson Thor platform, enabling development and deployment from the edge to the cloud.