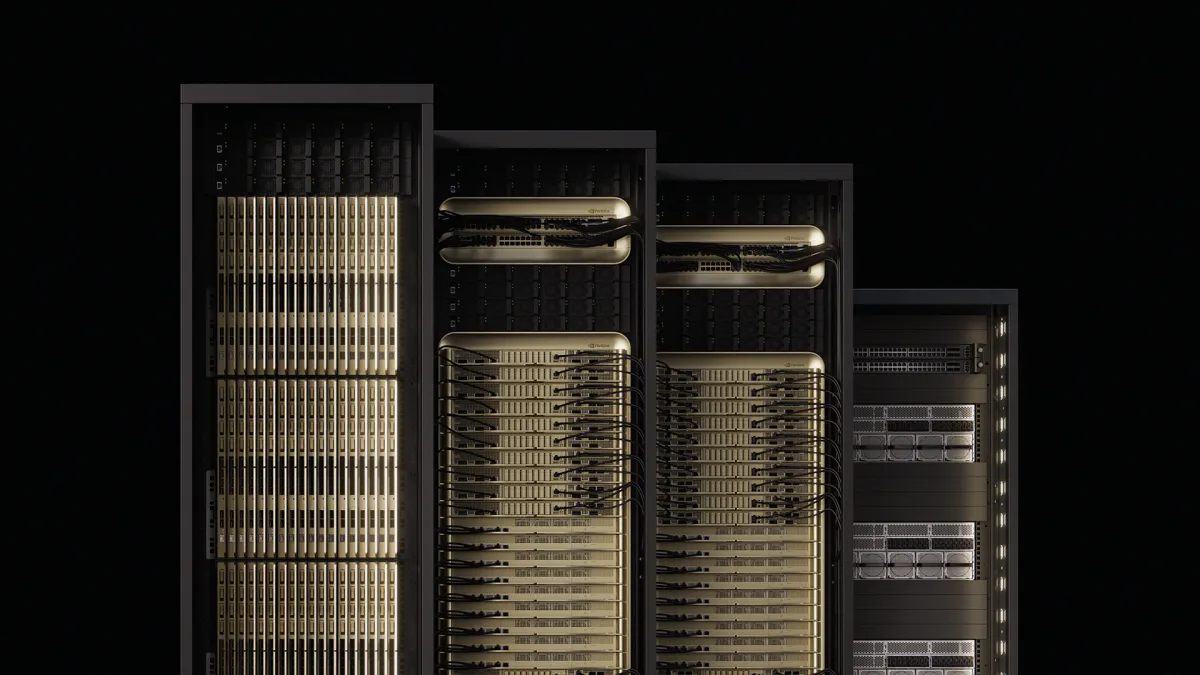

NVIDIA is revealing its plans for the next generation of AI infrastructure. It calls these "gigawatt AI factories." They center around the new NVIDIA Vera Rubin platform. At the OCP Global Summit, the company showed off its Vera Rubin NVL144 MGX-generation rack servers. It also highlighted the larger ecosystem. This represents a huge shift in data center design. It's not just about faster GPUs; it's a fundamental change in how AI compute is powered, cooled, and scaled.

The Vera Rubin NVL144 compute tray is built for extreme efficiency and performance. It has a modular, 100% liquid-cooled architecture. A central circuit board replaces traditional cables. This promises faster assembly and easier servicing. Additionally, it supports NVIDIA ConnectX-9 800GB/s networking and Rubin CPX for large-scale inference. This modular design, plus NVIDIA's plan to make these innovations open standards, aims to speed up deployment. It also lets partners combine components for rapid scaling.

A key part of this future is the switch to 800-volt direct current (VDC) data centers. This is a big leap from older 415 or 480 VAC systems. The higher voltage is already used in electric vehicles and solar power. It offers increased scalability, better energy efficiency, and uses fewer materials for AI factories. Companies like Foxconn and CoreWeave are already designing data centers around this 800 VDC standard. In fact, Vertiv has unveiled a complete power and cooling plan.

Building Hyperscale AI

The NVIDIA Kyber rack server generation will be ready by 2027. This infrastructure shift will house an astounding 576 NVIDIA Rubin Ultra GPUs. The Kyber is engineered to boost GPU density and network scale. It uses vertical compute blades and cable-free NVLink switch blades for seamless networking. The move to 800 VDC is critical. It allows 150% more power through the same amount of copper. As a result, this eliminates the need for bulky, multi-ton copper busbars, saving hyperscalers millions of dollars.

NVIDIA's strategy goes beyond its own hardware. It is fostering a broad ecosystem with over 50 MGX partners. It is also expanding its NVLink Fusion program. This lets companies like Intel and Samsung Foundry integrate their custom silicon into NVIDIA's architecture. This, in turn, accelerates the time to market for custom CPUs and XPUs. The huge number of partners highlights the industry's commitment to this new era of AI factories.

The changes brought by NVIDIA Vera Rubin and the Kyber architecture are profound. They signal a future where AI models will need gigawatts of power. This isn't just a small improvement; it's a total reimagining of the data center. It's built for the massive demands of advanced AI. The shift to 800 VDC and liquid cooling, along with open standards, will unlock unprecedented scale and efficiency. Ultimately, this will fundamentally change what is possible in AI development.