NVIDIA is formalizing its comprehensive hardware and software strategy for the burgeoning field of physical AI, detailing a "three-computer solution" designed to accelerate the development and deployment of advanced robotics. This integrated architecture aims to address the unique challenges of building intelligent systems that can perceive, reason, and interact with the real world, from autonomous vehicles to humanoid robots. In an announcement on its blog, the company outlined how its specialized platforms cover the entire lifecycle of physical AI, from foundational model training to real-time on-robot operation.

Physical AI, as defined by NVIDIA, represents a significant evolution beyond traditional AI models like large language models (LLMs) or image generators. Unlike AI that operates solely in digital environments, physical AI systems are end-to-end models capable of understanding and navigating the three-dimensional world. This shift marks a transition from "Software 1.0," where human programmers wrote serial code, to "Software 2.0," where software writes software, driven by GPU-accelerated machine learning. The goal is to enable robots and other autonomous systems to sense, respond, and learn from their physical surroundings, transforming industries from manufacturing and logistics to healthcare and smart cities.

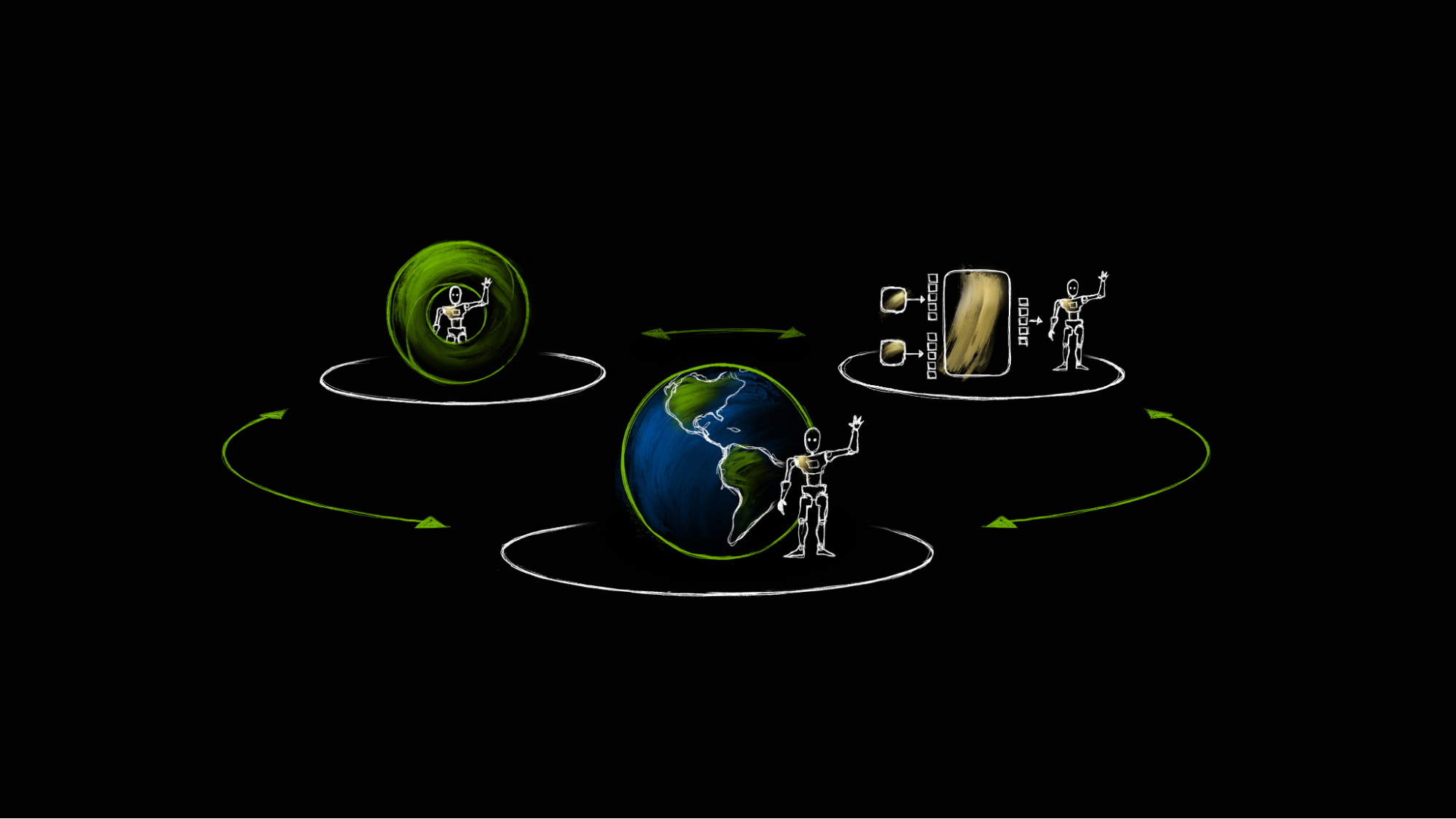

The core of NVIDIA's offering is a trio of distinct computational platforms, each optimized for a specific stage of physical AI development. For the intensive task of AI training, developers leverage NVIDIA DGX AI supercomputers. These systems provide the immense computational power necessary to pre-train large robot foundation models, enabling robots to understand natural language, recognize objects, and plan complex movements simultaneously. This is where the foundational intelligence for a robot's capabilities is forged.

Accelerating Robot Development with Digital Twins

The second pillar is dedicated to simulation and synthetic data generation, powered by NVIDIA Omniverse and Cosmos running on NVIDIA RTX PRO Servers. A critical bottleneck in generalist robotics development is the scarcity of real-world data, which is expensive and difficult to collect, especially for rare edge cases. Omniverse allows developers to generate vast amounts of physically accurate, diverse synthetic data—including 2D/3D images, depth maps, and motion data—to bootstrap model training. Furthermore, this platform facilitates the creation of digital twins, enabling developers to simulate and rigorously test robot models in risk-free virtual environments before real-world deployment. Frameworks like Isaac Sim, built on Omniverse, provide a safe space for robots to learn from mistakes without endangering humans or damaging costly hardware.

Finally, for on-robot inference and real-time operation, NVIDIA offers the Jetson AGX Thor. This compact, energy-efficient computer is designed to run multimodal AI reasoning models directly on the robot. It provides the necessary computational power to process sensor data, reason, plan, and execute actions within milliseconds, ensuring intelligent and responsive interactions with people and the physical world. This enables robots to operate autonomously and safely in dynamic environments.

The synergy between these three components is crucial. For instance, the "Mega" blueprint, built on Omniverse, serves as a factory digital twin, allowing industrial enterprises like Foxconn and Amazon Robotics to test and optimize entire robot fleets in simulation. This software-in-the-loop testing helps anticipate and mitigate potential issues, reducing risks and costs during real-world deployment. Companies across various sectors, including Universal Robots, RGo Robotics, and prominent humanoid robot makers like Agility Robotics and Boston Dynamics, are already adopting NVIDIA's integrated platform to accelerate their robotics initiatives, from cobots to complex humanoids.