MoNaCo is a new benchmark designed to test whether modern language models can answer realistic, research-style questions that require synthesizing information across dozens or even hundreds of sources. The dataset fills a gap in existing benchmarks, which are either too simple or artificially complex without reflecting genuine information needs.

Realistic and Complex Questions

The MoNaCo dataset contains 1,315 human-written questions designed to mimic the type of queries a political scientist, history professor, or amateur chef might ask. On average, each question is just 14.5 words long but requires more than five reasoning steps and evidence from over 43 Wikipedia pages. Supporting evidence is multimodal: 67.8% tables, 29.5% text, and 2.7% lists.

Compared to benchmarks like HotpotQA, Musique, or QAMPARI, MoNaCo demands far more evidence per question and includes advanced aggregation and arithmetic. With 8,549 list questions and over 40,000 boolean questions, it is the largest collection of its kind. Each question is paired with a gold reasoning chain, allowing researchers to inspect, verify, and reproduce the logic.

Innovative Annotation Pipeline

Building MoNaCo required a novel pipeline. Crowd workers created questions from specific personas, such as professors or chefs, ensuring natural phrasing and diverse topics. Expert annotators then decomposed the questions into sub-questions using the QDMR formalism. An automated execution engine generated follow-ups and computed answers for aggregation steps.

The process produced over 90,000 intermediate questions and their evidence, supported by multi-layered quality control. Human reviews, crowd worker vetting, and large language models were all used to validate annotations.

Frontier Model Performance on MoNaCo

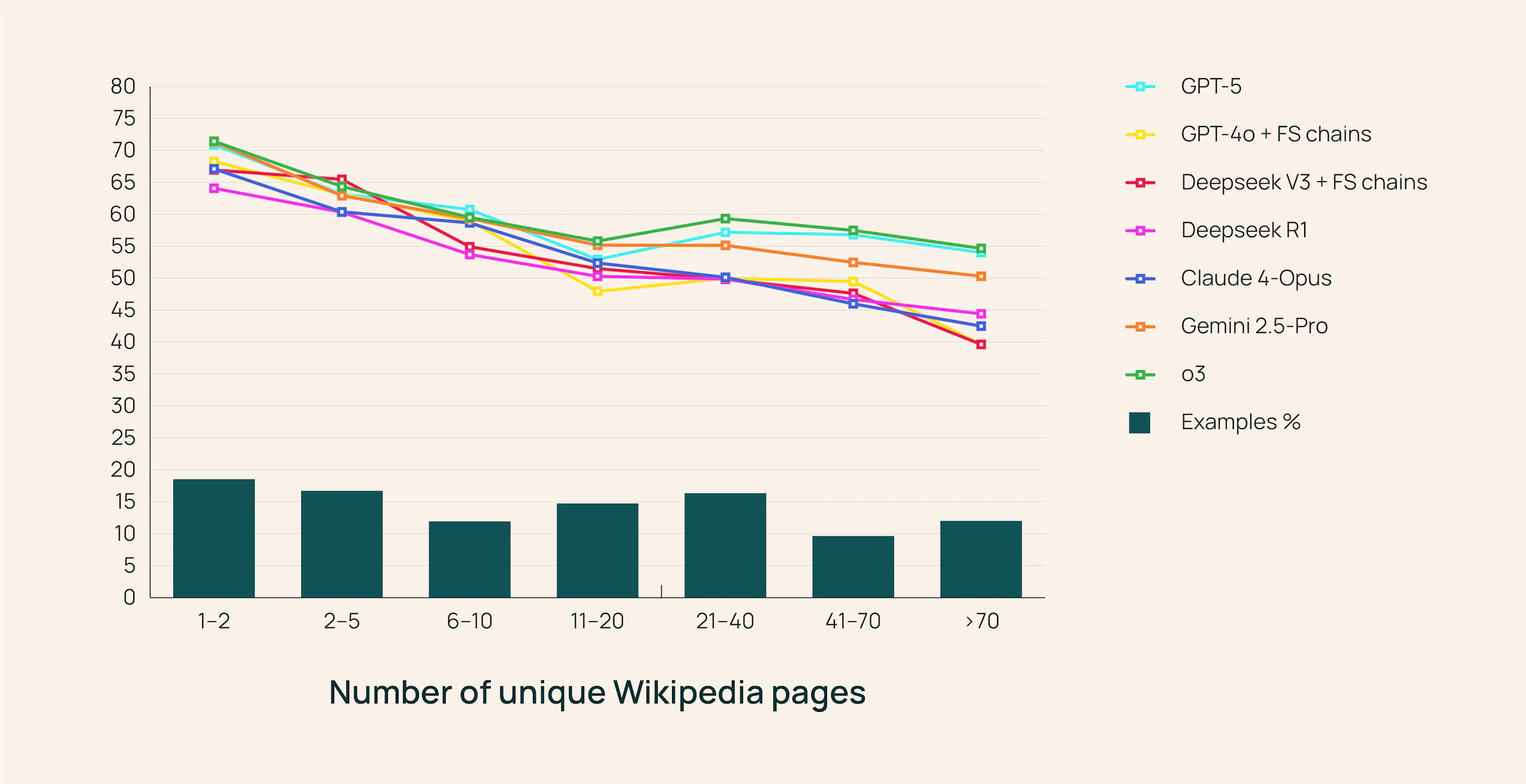

The benchmark exposed weaknesses in today’s most advanced models. Researchers tested 15 frontier LLMs, including GPT-5, Anthropic Claude Opus 4, Google Gemini 2.5 Pro, and OpenAI’s reasoning-focused o3. Even the top performer, o3, achieved only 61.18 F1 and fully answered just 38.7% of questions.

Reasoning-oriented models generally outperformed vanilla ones, but the improvements were modest. GPT-5 scored 60.11 F1 and Claude Opus 4 reached 55.03. Performance dropped sharply as the number of required reasoning steps or supporting documents increased. List questions proved especially difficult, with accuracy collapsing on long lists.

Retrieval and RAG Challenges

MoNaCo also tested retrieval-augmented generation (RAG). Results showed that naive retrieval often hurts performance. Oracle retrieval using gold evidence improved scores for models like GPT-4o and Llama 3.1-405B, but BM25 retrieval actually reduced accuracy compared to closed-book performance. This highlights the difficulty current models face in filtering noise from retrieved documents.

Beyond a Dataset: A Research Framework

MoNaCo goes beyond being a dataset. It provides a framework to evaluate:

- Factuality (parametric knowledge)

- Long-context reasoning

- Multi-document retrieval performance

- End-to-end retrieval-augmented generation

Because it includes complete reasoning chains, MoNaCo can also train next-generation “Deep Research” systems. The dataset, code, and model outputs are publicly available under an ODC-BY license, inviting broad adoption by researchers.

Conclusion: Raising the Bar for AI Reasoning

MoNaCo sets a new benchmark for evaluating language models. Early results make clear that even advanced models cannot yet perform the type of multi-document reasoning that humans handle routinely. Progress will require stronger retrieval integration, better long-context handling, and more robust list generation.

By combining natural questions, detailed reasoning chains, and open access, MoNaCo challenges researchers to build more factual, trustworthy AI systems.