The true measure of an AI model's utility isn't just its benchmark scores, but its seamless integration into the messy, dynamic reality of human workflows. This philosophy underpins MiniMax M2, the latest AI model unveiled by Senior Researcher Olive Song at the AI Engineer Code Summit. Song’s presentation highlighted MiniMax’s distinctive approach as both a leading independent model lab and an application developer, a duality that deeply informs the design and training of M2, particularly its agentic capabilities tailored for coding and workplace tasks.

MiniMax operates uniquely in the AI landscape, simultaneously building foundational models and creating AI-native applications. This integrated strategy provides invaluable "first-hand experience" directly from in-house developers, ensuring that models like M2 are engineered to address the practical needs of the developer community. This direct feedback loop is a crucial differentiator, allowing MiniMax to build models that are not merely theoretically powerful but genuinely useful and efficient in real-world scenarios.

The MiniMax M2 model, characterized as "open-weight, coding-first, best-in-class," boasts approximately 10 billion activated parameters. Its agentic-by-design architecture focuses explicitly on coding and general workplace tasks, prioritizing speed, cost-efficiency, and scalability. These attributes are not abstract ideals but are rigorously pursued through a sophisticated training regimen designed to mirror and enhance developer experiences.

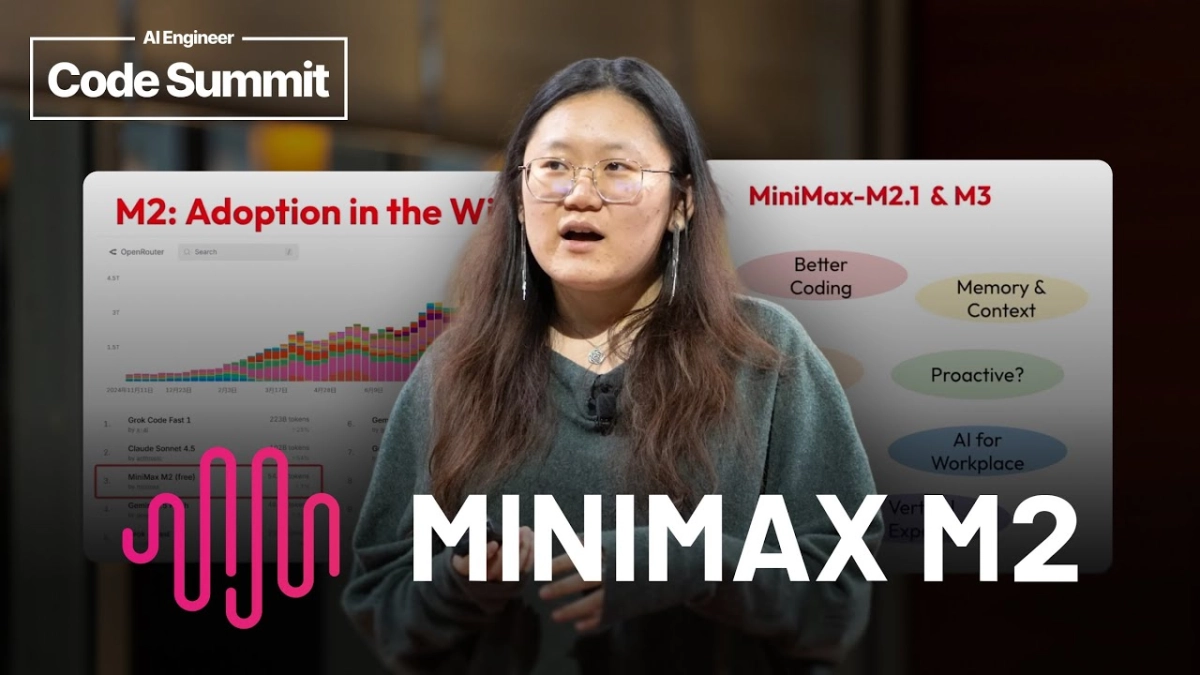

Initial performance metrics indicate M2’s competitive edge. It ranks highly across various intelligence and agentic benchmarks. Critically, its early adoption in the wild demonstrates genuine utility: M2 achieved the most downloads in its first week and climbed to the top three in token usage on OpenRouter. Olive Song pragmatically noted that "numbers don't tell everything," emphasizing that true success lies in community adoption and practical efficacy, not just theoretical superiority. This rapid real-world uptake validates M2’s developer-centric design.

The model’s robust behavior stems from its unique training methodology, which shapes its capabilities for developers. For coding and development experience, M2 leverages "scaled environments and experts." This involves training on over 100,000 real GitHub repositories, issues, and tests, supporting a multitude of programming languages including JS, TS, HTML, CSS, Python, Java, Go, C++, Kotlin, and Rust. A high-concurrency infrastructure, with more than 5,000 sandboxes, enables tens of thousands of concurrent training instances, allowing the model to learn from vast and diverse coding contexts.

A particularly insightful aspect of MiniMax’s approach is the use of "expert developers as reward models." Rather than relying solely on automated metrics, MiniMax integrates its own frontend, backend, and data engineers directly into the model development and training cycle. These experts provide crucial feedback, defining problems, identifying desirable model behaviors, and offering precise evaluation and reward signals for the model's performance. This human-centric reward system ensures M2 is truly "developer-friendly," capable of addressing complex issues like bug fixing and repository refactoring in ways that resonate with human developers.

Furthermore, M2 excels in long-horizon tasks, which demand interaction with complex, dynamic environments and the sequential use of multiple tools with reasoning. This capability is powered by what MiniMax calls "interleaved thinking." Unlike conventional reasoning models that follow a linear thought-tool-response sequence, M2 interleaves thinking and content generation with tool calling and responses. This enables the model to adapt to environmental noise and dynamic conditions, such as unpredictable stock market fluctuations, by re-evaluating its approach and making new decisions based on real-time feedback. It can automate complex workflows across various applications like Gmail, Notion, and Terminal with minimal human intervention, demonstrating a profound understanding of multi-step processes.

The model’s robust scaffold generalization is another critical characteristic. Initially, MiniMax considered this to be a matter of "tool scaling," simply training the model with a sufficient variety of tools. However, they soon realized that true agent generalization requires the model to "adapt to perturbations across the model's entire operational space." This is achieved through a meticulous perturbation pipeline during training, where various elements—tool information, system prompts, user prompts, and tool responses—are systematically varied. This rigorous approach ensures that M2 can generalize effectively to unseen tools and novel agent scaffolds, preventing brittle performance in unfamiliar contexts.

M2's compact size and cost-effectiveness facilitate its multi-agent scalability. It can operate as specialized agents for diverse tasks, such as research, web development, and report generation, collaborating in parallel on complex, long-running agentic tasks. This capability unlocks new possibilities for automating intricate business processes and enhancing team productivity. Looking ahead, MiniMax plans to evolve M2 into M2.1 and M3, focusing on "better coding," enhanced memory and context management, integration of audio and video generation, proactive AI for workplace applications, and specialized "vertical experts" tailored to specific industry domains.