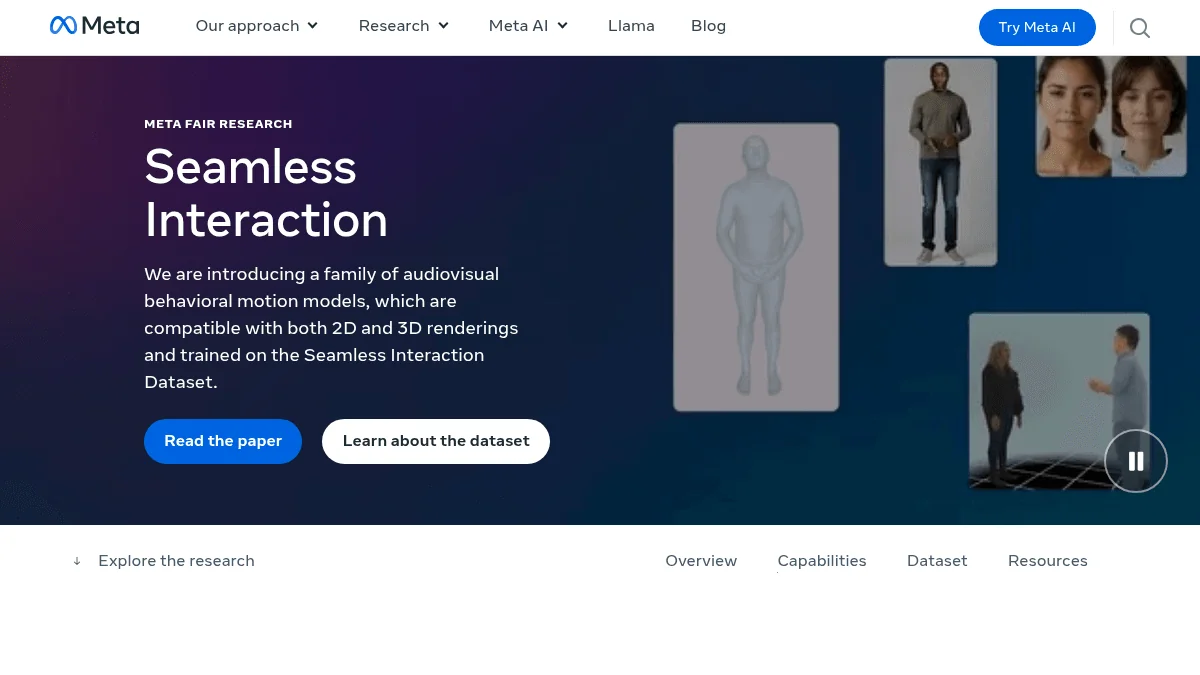

Meta’s AI researchers are edging closer to a long-sought frontier in computing: avatars that don’t just look like us, but move, react, and engage with the nuance of genuine human presence. In their latest announcement, the company’s Fundamental AI Research (FAIR) group unveiled a set of audiovisual behavioral motion models that generate lifelike gestures and facial expressions from audio and video. The project, dubbed Seamless Interaction, is backed by an unprecedented dataset—over 4,000 hours of paired conversations—and aims to bridge the chasm between mechanical avatars and embodied social interaction.

To appreciate the significance, it helps to understand why this problem is hard.

Human conversation is a dynamic dance. People don’t just take turns speaking; they nod, mirror each other’s expressions, and signal attentiveness through micro-gestures. Capturing these subtle signals is difficult enough in a lab, let alone encoding them into a model robust enough to generalize. Meta’s dataset addresses this by blending natural conversations with scripted performances that evoke complex emotions—disagreement, regret, surprise—effectively mapping the long tail of authentic social behavior.

The Dyadic Motion Models themselves are trained to generate expressive motion in two modes. In the simpler configuration, the model consumes audio alone to animate an avatar’s face and body. This alone unlocks an eerie fidelity: think of a podcast recording brought to life with fully rendered visual gestures matching the tone of the conversation. A more advanced version incorporates visual input from both speakers, allowing the system to recreate synchrony—smiles that appear in unison, glances that coordinate, subtle cues that signal rapport or tension.

This technology is not only an academic curiosity. It has the potential to redefine telepresence and social VR, fields that have historically failed to clear the “uncanny valley” of digital interaction. Even Meta’s own Codec Avatars—high-resolution 3D facsimiles of users—have looked and sounded compelling but often behaved like disembodied puppets. The Dyadic Motion Models aim to solve this by providing richer behavioral signals. And crucially, Meta is releasing the dataset and a technical report, inviting other researchers to iterate, critique, and build upon these foundations.

While the scientific ambition here is clear, the practical risks are equally profound. Ultra-realistic avatars raise predictable questions about consent and manipulation: if you can generate a photorealistic version of someone responding to speech that never happened, you also inherit the burden of authenticating what’s real. Meta has anticipated this with a multilayered watermarking system—AudioSeal and VideoSeal—that embed signals into generated content to verify provenance. It’s a reasonable first step, though the challenge of securing trust in synthetic media will inevitably outpace technical safeguards.

The effort also underscores Meta’s broader ambition to own the social infrastructure of immersive computing. As the hype cycles around LLMs and generative art crest and recede, the company is wagering that embodied AI—agents that can converse, gesture, and relate—will be the next wave. In this view, their planned metaverse would not only be a 3D environment but a platform animated by social machines that feel alive.

Skeptics will point out that Meta’s vision still hinges on adoption hurdles. Codec Avatars require specialized capture hardware, and social VR has yet to prove that ordinary users crave this level of realism. But dismissing the research as speculative misses the deeper trajectory: foundational models are evolving beyond language into the territory of behavior, where words, intonation, and movement coalesce into authentic communication.

By releasing the Seamless Interaction Dataset and Dyadic Motion Models, Meta is effectively placing a bet that the future of AI will not just be generative but performative. It’s a bet on avatars that can do more than speak—they can understand, react, and mirror the subtle choreography of being human.