SPEAKERS

Briefcam

Shmuel Peleg, Co-founder and Chief Scientist

Looking at video affects your sound perception, and it’s a tricky problem to solve when viewing many people speaking. There’s plenty of video recognition research breaking barriers but seldom does it tackle audio too. Vid2speech is Briefcam’s new project that aims to accurately isolate the audio and video cues based on a dilated convolutional neural network in an audio-visual deep model architecture. The technology enhances the speech of the subject when using a video that shows the face of the speaker(s). It can be applied to isolating the audio of spoken video. Professor Peleg demonstrated the technology on ESPN’s sports talk show First Take with their notorious personalities Stephen A Smith and Max Kellerman, who commonly talk on top of each other, resulting in incoherent content. Peleg demonstrated Vid2speech’s ability to mute the audio of one of the commentators while both were yelling simultaneously.

Briefcam

Shmuel Peleg, Co-founder and Chief Scientist

Looking at video affects your sound perception, and it’s a tricky problem to solve when viewing many people speaking. There’s plenty of video recognition research breaking barriers but seldom does it tackle audio too. Vid2speech is Briefcam’s new project that aims to accurately isolate the audio and video cues based on a dilated convolutional neural network in an audio-visual deep model architecture. The technology enhances the speech of the subject when using a video that shows the face of the speaker(s). It can be applied to isolating the audio of spoken video. Professor Peleg demonstrated the technology on ESPN’s sports talk show First Take with their notorious personalities Stephen A Smith and Max Kellerman, who commonly talk on top of each other, resulting in incoherent content. Peleg demonstrated Vid2speech’s ability to mute the audio of one of the commentators while both were yelling simultaneously.

IBM

Aya Soffer, VP of AI Tech

Aya Soffer stated audio and image recognition has been conquered by AI computers, surpassing the skill of humans. But the eclipse hasn’t happened for video recognition yet. Still, human intuition can identify the content of a video better than the best deep learning algorithms. Video comprehension is a major focus for IBM’s research team directed at enterprise applications. Their team has created a new mega video data set called Moments In Time, in collaboration with MIT University, comprised of millions of video clips to support their development efforts, such as segmentation of video into semantic scenes, interpolating full videos scenes from select frames, recognizing objects and humans actions, summarized highlights of a video and few shot learning for object recognition.

IBM

Aya Soffer, VP of AI Tech

Aya Soffer stated audio and image recognition has been conquered by AI computers, surpassing the skill of humans. But the eclipse hasn’t happened for video recognition yet. Still, human intuition can identify the content of a video better than the best deep learning algorithms. Video comprehension is a major focus for IBM’s research team directed at enterprise applications. Their team has created a new mega video data set called Moments In Time, in collaboration with MIT University, comprised of millions of video clips to support their development efforts, such as segmentation of video into semantic scenes, interpolating full videos scenes from select frames, recognizing objects and humans actions, summarized highlights of a video and few shot learning for object recognition.

Blink Technologies

Soliman Nasser, Lead Research and Development

Nasser began to explain the geometry of the human eye before detailing their technology for eye tracking. They track the eye movements from RGB cameras in order to predict the gaze point and consequently gaze vectors, the connection between your eye point and gaze point. They reconstruct a 3D model of the eye to find the intrinsic parameters of the eye and define the optical axis of each eye, and consequently calculate the point of gaze using triangulation methods. They crowd source their data (real and synthetic) and feed it into a deep learning algorithm to predict the gaze vector. The market is largely saturated with intrusive infrared sensors whereas Blink Technologies’ algorithms works on any RGB camera in real world environments with limited CPU computation power and without GPU units.

Blink Technologies

Soliman Nasser, Lead Research and Development

Nasser began to explain the geometry of the human eye before detailing their technology for eye tracking. They track the eye movements from RGB cameras in order to predict the gaze point and consequently gaze vectors, the connection between your eye point and gaze point. They reconstruct a 3D model of the eye to find the intrinsic parameters of the eye and define the optical axis of each eye, and consequently calculate the point of gaze using triangulation methods. They crowd source their data (real and synthetic) and feed it into a deep learning algorithm to predict the gaze vector. The market is largely saturated with intrusive infrared sensors whereas Blink Technologies’ algorithms works on any RGB camera in real world environments with limited CPU computation power and without GPU units.

Lightricks

Ofir Bibi, Director of Research

According to Bibi, around 50% of the pixels in the typical image are composed of the sky, and in order to achieve the best capture, Lightricks believes the sky portion should be aesthetically perfect. The startup’s research team trained a neural network to segment the sky background on still images, that’s integrate into their highly popular Quickshot application. Video, similarly poses a greater challenge and the startup developed an algorithm for automatic replacement of the sky portion of video clips on composited videos with enhanced skys. They achieve temporal consistency in the segmentation network by using a complex feedback loop and novel infrastructure and their algorithm trains by actually adding noise to the data in all kinds of areas to extend and dilate the boundaries that mimic the errors of real video. Their work was conducted by Tavi Halperin, Harel Cain and Michael Werman and will be presented at Eurographics 2019.

Lightricks

Ofir Bibi, Director of Research

According to Bibi, around 50% of the pixels in the typical image are composed of the sky, and in order to achieve the best capture, Lightricks believes the sky portion should be aesthetically perfect. The startup’s research team trained a neural network to segment the sky background on still images, that’s integrate into their highly popular Quickshot application. Video, similarly poses a greater challenge and the startup developed an algorithm for automatic replacement of the sky portion of video clips on composited videos with enhanced skys. They achieve temporal consistency in the segmentation network by using a complex feedback loop and novel infrastructure and their algorithm trains by actually adding noise to the data in all kinds of areas to extend and dilate the boundaries that mimic the errors of real video. Their work was conducted by Tavi Halperin, Harel Cain and Michael Werman and will be presented at Eurographics 2019.

Imagry

Adham Ghazali, co-founder and CEO

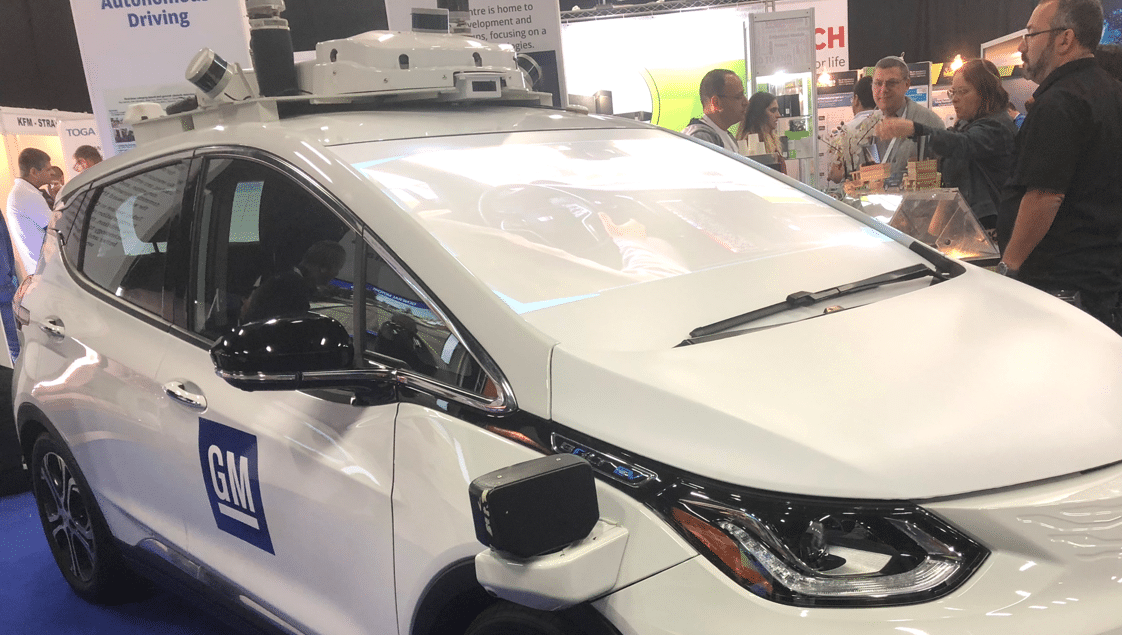

Autonomous driving software is a complex problem. Depth is the major drawback for cameras, and LiDar doesn’t have high resolution information on active perception. Even the leading contender in the Autonomous Driving race, Waymo, has made advances in the localization by creating high definition maps and human labor (1 hour of self driving = 800 hours of annotation to build a high definition map) and SLAM for optimization. But if a map changes, they need to redo the procedure again which poses a problem to scaling. Imagry developed a novel architecture for autonomous driving software that’s able to localize, perceive and drive in unknown environment - learning a driving policy without a heuristic. It’s capable of training on one city’s roads, and drive autonomously in another city. The startup also invented a motion path finding algorithm called Aleph* (open sourced), which takes two hours to train on a laptop for a new function, exhibited promising run time efficiency, and compared well to industry benchmark Nstep Duke UN. Mangan encourage the industry to conduct a comparison to Monte Carlo research.

Imagry

Adham Ghazali, co-founder and CEO

Autonomous driving software is a complex problem. Depth is the major drawback for cameras, and LiDar doesn’t have high resolution information on active perception. Even the leading contender in the Autonomous Driving race, Waymo, has made advances in the localization by creating high definition maps and human labor (1 hour of self driving = 800 hours of annotation to build a high definition map) and SLAM for optimization. But if a map changes, they need to redo the procedure again which poses a problem to scaling. Imagry developed a novel architecture for autonomous driving software that’s able to localize, perceive and drive in unknown environment - learning a driving policy without a heuristic. It’s capable of training on one city’s roads, and drive autonomously in another city. The startup also invented a motion path finding algorithm called Aleph* (open sourced), which takes two hours to train on a laptop for a new function, exhibited promising run time efficiency, and compared well to industry benchmark Nstep Duke UN. Mangan encourage the industry to conduct a comparison to Monte Carlo research.

VayaVision

Shmoolik Mangan, Algorithms Development Manager

VayaVision developed a low level raw data fusion and perception system for autonomous vehicles, as opposed to object fusion architecture. They use unified algorithms - unsupervised neural networks - to detect objects based on the perceived environment from all pixel level data from RGBd sensor data. Deep learning and computer vision algorithms are applied to the upsampled HD 3D model. While the typical sensor set for autonomous vehicles consists of several sensor types, upon sensor failure, the startup’s novel redundancy mechanisms allow the vehicle to continue driving and not become stranded on the active road, albeit continue at reduced speeds and only to the nearest vehicle garage.

VayaVision

Shmoolik Mangan, Algorithms Development Manager

VayaVision developed a low level raw data fusion and perception system for autonomous vehicles, as opposed to object fusion architecture. They use unified algorithms - unsupervised neural networks - to detect objects based on the perceived environment from all pixel level data from RGBd sensor data. Deep learning and computer vision algorithms are applied to the upsampled HD 3D model. While the typical sensor set for autonomous vehicles consists of several sensor types, upon sensor failure, the startup’s novel redundancy mechanisms allow the vehicle to continue driving and not become stranded on the active road, albeit continue at reduced speeds and only to the nearest vehicle garage.

DeePathology.ai

Jacob Gildenblat, Co-founder and CTO

Automating cell detection requires annotating large amounts of data, which is usually very unbalance and to alleviate the arduous task, DeePathology.ai developed the Cell Detection Studio, a DIY tool for pathologists develop their own AI solutions to train deep learning cell detection algorithms on their own data. Using this tool, Pathologists can easily create and train deep learning cell detection algorithms off of their own data sets, with the help of Active Learning.

DeePathology.ai

Jacob Gildenblat, Co-founder and CTO

Automating cell detection requires annotating large amounts of data, which is usually very unbalance and to alleviate the arduous task, DeePathology.ai developed the Cell Detection Studio, a DIY tool for pathologists develop their own AI solutions to train deep learning cell detection algorithms on their own data. Using this tool, Pathologists can easily create and train deep learning cell detection algorithms off of their own data sets, with the help of Active Learning.

Google

Yael Pritch, Leader of Goole Perception AI

The Google team is invested in putting computational photography and Artificial Intelligence inside mobile cameras. According to Pritch, software advancements are the current innovation in the mobile camera today, opposed to the optical hardware that’s constricted by the physical form factors. Google’s innovation in two new camera upgrades were on display - portrait mode and night sight - detailing the convolutional neural networks and phase different auto-focus that's unanimously won over conventional camera critics. Night sight is a feature for the Pixel 1,2,3, which gives you a high quality capture in a low-level light environment. The portrait mode makes use of a machine learning network that learns to segment people from images and a mechanism that utilizes another lens pixel for autofocus which is repurposed to create a high quality depth map. The Google Pixel 2 computational camera won the DPReview Awards in 2017, and the Google Pixel 3 won the same award in 2018. It’s the leading camera today and fueled by the AI software. Everything is running on the device in thermal and memory limitations on the Pixel 1 2 and 3, and the Pixel software is back compatible to all models.

Google

Yael Pritch, Leader of Goole Perception AI

The Google team is invested in putting computational photography and Artificial Intelligence inside mobile cameras. According to Pritch, software advancements are the current innovation in the mobile camera today, opposed to the optical hardware that’s constricted by the physical form factors. Google’s innovation in two new camera upgrades were on display - portrait mode and night sight - detailing the convolutional neural networks and phase different auto-focus that's unanimously won over conventional camera critics. Night sight is a feature for the Pixel 1,2,3, which gives you a high quality capture in a low-level light environment. The portrait mode makes use of a machine learning network that learns to segment people from images and a mechanism that utilizes another lens pixel for autofocus which is repurposed to create a high quality depth map. The Google Pixel 2 computational camera won the DPReview Awards in 2017, and the Google Pixel 3 won the same award in 2018. It’s the leading camera today and fueled by the AI software. Everything is running on the device in thermal and memory limitations on the Pixel 1 2 and 3, and the Pixel software is back compatible to all models.