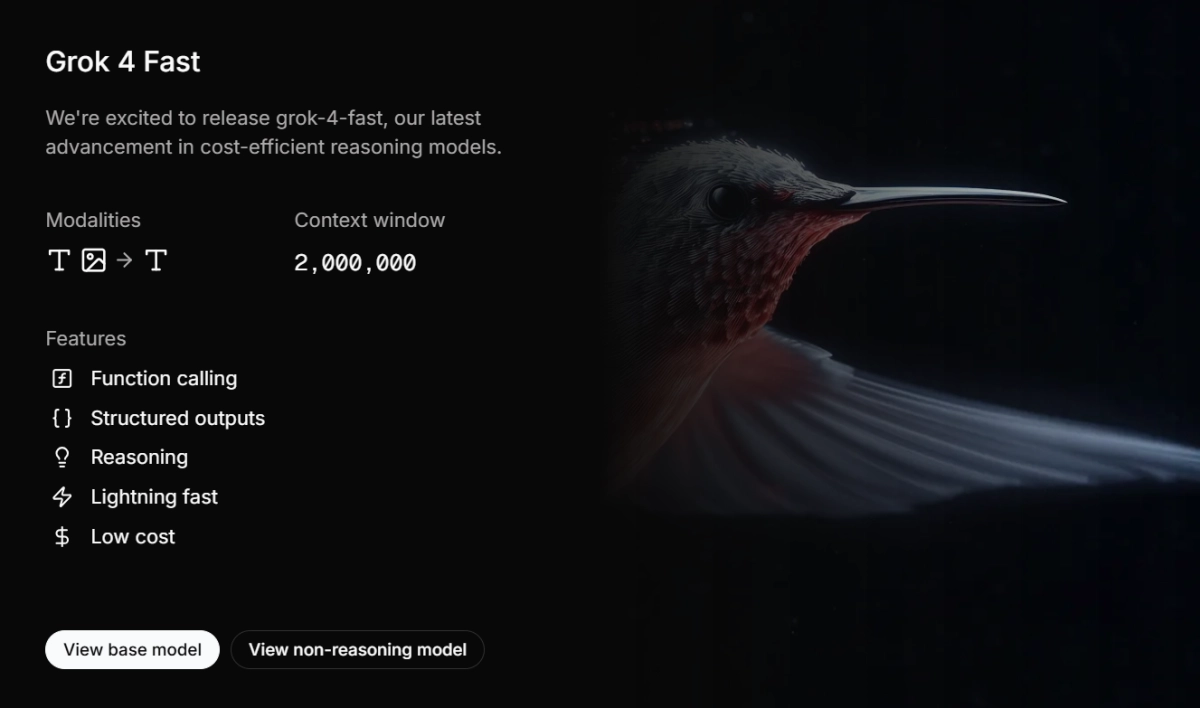

xAI has quietly rolled out a significant upgrade to its Grok 4 Fast models, expanding the context window to an impressive 2 million tokens. The enhancement transforms what was already a cost-efficient reasoning engine into a formidable powerhouse. This move allows Grok 4 Fast to ingest entire codebases, voluminous documents, or extended multi-turn conversations without the typical reliance on retrieval-augmented generation (RAG) pipelines.

Initially launched in September as a leaner alternative to the flagship Grok 4, the Fast variants now boast a unified architecture honed through end-to-end reinforcement learning. This enables seamless tool integration for web searches, code execution, and multimodal analysis, positioning it as a versatile option for developers.

At its core, Grok 4 Fast delivers frontier-level performance with remarkable frugality. Input tokens start at just $0.20 per million, scaling to $0.40 beyond 128K, while outputs range from $0.50–$1.00. This pricing significantly undercuts heavier rivals like OpenAI's GPT-5 or Anthropic's offerings. Generous rate limits and cached prompts further slash costs, making high-volume deployments viable for startups and enterprises alike. Tool invocations, including advanced agentic features, remain free until November 21, 2025, sweetening the deal for developers building autonomous agents. This pricing, paired with a 40% reduction in thinking tokens compared to Grok 4, places it atop Artificial Analysis' efficiency rankings.

Performance and Practical Impact

Performance metrics underscore xAI's claims. Grok 4 Fast achieves 85.7% on GPQA Diamond, 92% on AIME 2025, and dominates LiveCodeBench at 80%. It also leads LMArena's Search Arena with a 1163 Elo score, 17 points ahead of its nearest competitor. In practical terms, developers report using it to refactor 10,000-line "spaghetti code" where competitors like Claude and GPT faltered, parse terabyte-scale logs up to 900K tokens, or orchestrate Kubernetes clusters via tools. The 2M window acts as an amplifier, eliminating the need for embeddings or repeated tool calls in complex workflows.

The developer community is both intrigued and divided. Enthusiasts praise the speed, noting it keeps human focus intact. Grok Code Fast 1, in particular, has topped OpenRouter's usage charts. Critics, however, question long-context fidelity, citing degraded accuracy in needle-in-haystack tests and middle-token neglect.

Debates also persist over perceived biases, from its uncensored edginess to accusations of Musk-influenced prompt tweaks on sensitive topics.

Yet, even detractors concede its edge in agentic coding and data extraction, often outperforming Gemini 2.5 Pro or Haiku 4.5.

By democratizing 2M-token reasoning at budget rates, it challenges the compute-heavy orthodoxy of competitors, empowering solo developers to tackle enterprise-scale problems.