The artificial intelligence landscape is witnessing a significant pivot, with the narrative increasingly favoring Google. This shift, as articulated by Lo Toney, Founding Managing Partner at Plexo Capital, on CNBC's 'The Exchange', is rooted in Google's distinct advantage: a comprehensively vertically integrated AI stack. Toney’s commentary, delivered to interviewer Don, underscored the strategic depth of Google’s approach, positioning the tech giant not merely as a participant but as a potential dominator in the burgeoning AI era.

Lo Toney, a CNBC contributor, engaged with Don on 'The Exchange' to dissect whether Alphabet's ascendancy in AI is justified, particularly considering its "full stack" strategy. The core of Toney's argument revolved around the concept of vertical integration, a playbook he noted was famously mastered by Apple to create an unassailable moat around its business. Google, he contended, has replicated this success in AI by controlling the entire spectrum from hardware to applications.

This comprehensive control begins at the foundational infrastructure layer. Google has invested over a decade in developing its custom Tensor Processing Units (TPUs). These aren't generic chips; they are "optimized around Google's workloads," including Search, YouTube, Ads, and the recently unveiled Gemini models. This bespoke silicon grants Google an efficiency and performance edge that general-purpose GPUs often cannot match for its specific applications.

The strategic importance of these custom TPUs extends beyond mere inference, which is the running of AI applications. Crucially, Toney highlighted that Google's TPUs are now also "competitive on the training side as well," a domain historically dominated by Nvidia's GPUs. This dual capability—excelling in both the development and deployment of AI—creates a powerful, compounding loop.

Google's unique proprietary data, amassed from its vast ecosystem of products, further amplifies this advantage. When combined with its robust training capabilities, this data forms a virtuous cycle, constantly refining and enhancing its AI models. This synergy between custom hardware, extensive data, and advanced models allows Google to innovate at an accelerated pace, continually improving its AI offerings.

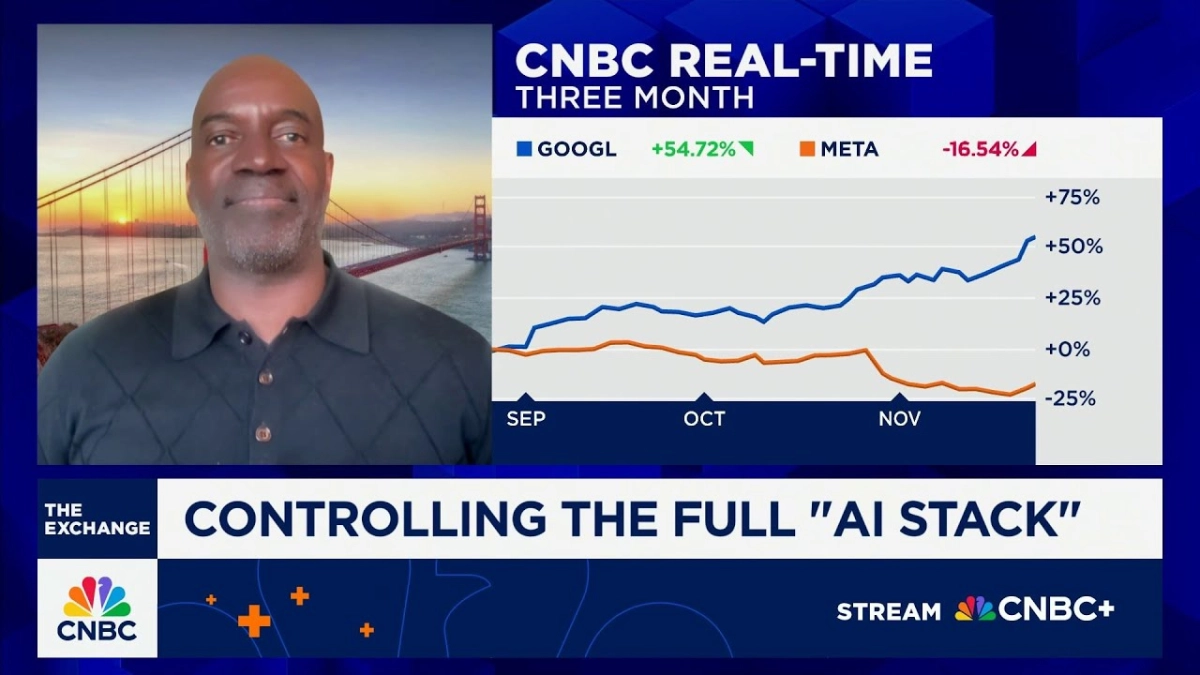

Capital efficiency emerges as another critical differentiator for Google in this high-stakes AI race. Toney explicitly stated, "Alphabet is the most capital efficient of all of Big Tech right now." This financial prowess enables Google to undertake massive, long-term investments in vertical integration, a luxury that many competitors, including Meta, cannot afford without incurring significant leverage. Meta, for instance, faces a conundrum, as it "cannot afford to be able to compete similar to Google and building out vertical infrastructure because they don't have the offering of products." This stark contrast in financial flexibility and product breadth underlines Google's formidable position.

The interviewer raised a pertinent historical counterpoint: a closed system, while offering tight integration, has sometimes led to the downfall of companies by limiting adaptability and external collaboration. Toney acknowledged this, but framed Google's approach not as a closed system but as ushering in a "hybrid era." This hybrid environment recognizes that while some tasks are best suited for traditional GPUs, others can achieve superior optimization and efficiency on Google's custom TPUs. This flexibility allows enterprise customers to leverage the best of both worlds, choosing the optimal hardware for their specific AI workloads.

Ultimately, Google's strategic foresight in building a vertically integrated AI stack—encompassing custom silicon, frontier models, and expansive global distribution—combined with its unparalleled capital efficiency, positions it strongly for leadership in the evolving AI landscape. The ability to control and optimize every layer of the AI stack provides a distinct competitive advantage, fostering innovation and efficiency across its vast product ecosystem.