The UK's Competition and Markets Authority (CMA) has thrown a spotlight on a critical issue shaping the future of the internet: how Google uses publisher content to fuel its burgeoning generative AI services. In a move that could redefine the digital landscape, the CMA has opened consultations on proposed conduct requirements for Google, aiming to inject much-needed transparency and choice for publishers grappling with the tech giant's data appetites. The heart of the matter? Google crawler separation.

The AI Advantage and the Publisher Predicament

At its core, the CMA's intervention stems from a fundamental imbalance. Google, with its dominant 90 percent share of the UK search market, currently wields significant power. Publishers, reliant on search traffic for their ad-supported business models, have little choice but to allow Googlebot, the company's primary web crawler, to access their content. The problem is that this same content, scraped for general search indexing, is also being leveraged to train and power Google's generative AI features, like AI Overviews and AI Mode. This dual-purpose crawling means Google can effectively compete with publishers using their own content, often without direct attribution or compensation, and with minimal impact on the traffic sent back to the original sources.

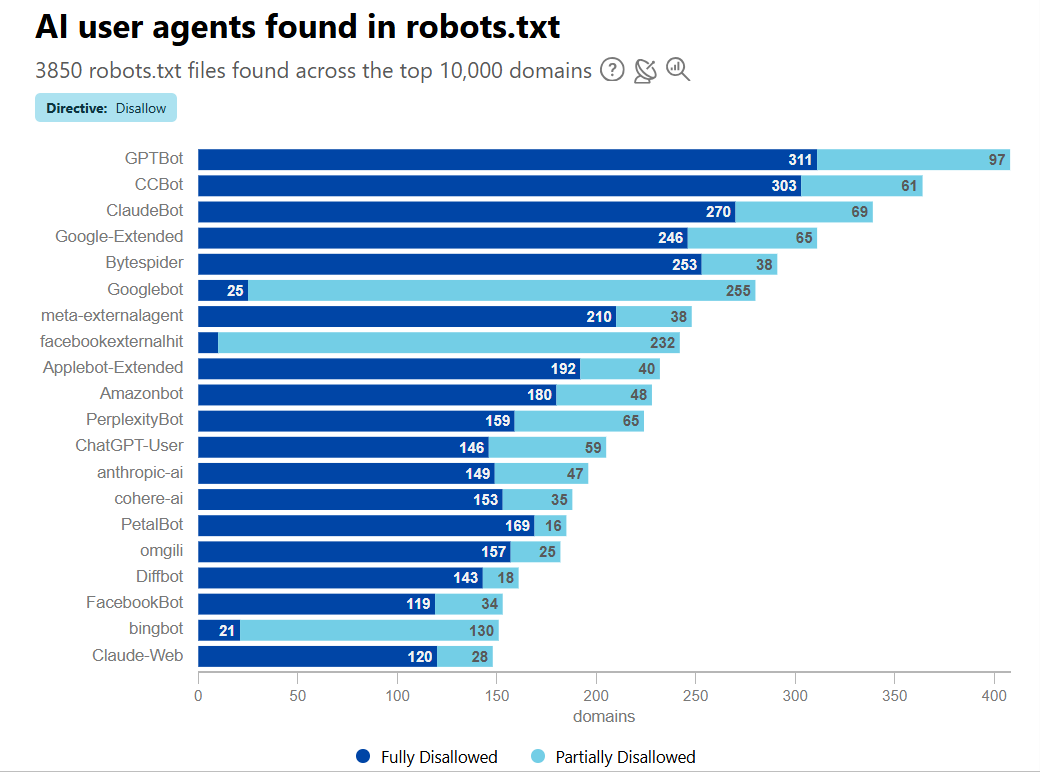

This creates a significant competitive advantage for Google. While other AI developers must negotiate for content or develop their own, Google can leverage its existing search infrastructure to amass vast datasets for its AI models. Cloudflare data starkly illustrates this disparity, showing Googlebot accessing exponentially more unique pages than its closest AI-focused competitors. This asymmetry not only undermines publishers' ability to monetize their work but also disincentivizes other AI companies from entering the market on a level playing field.

The CMA UK's proposed conduct requirements aim to address this by mandating that Google grant publishers "meaningful and effective" control over how their content is used in AI features. This includes increased transparency on data usage and stricter attribution requirements. However, critics argue these proposed remedies fall short. Relying on proprietary, Google-managed opt-out mechanisms, rather than a fundamental structural change like Google crawler separation, risks perpetuating publisher dependency and offers insufficient autonomy.

The most robust solution, according to proponents of Google crawler separation, is to require Google to segregate its crawling functions. This would allow publishers to permit crawling for traditional search indexing, essential for driving traffic, while simultaneously blocking access for generative AI training and inference. This approach, akin to how Google already manages its numerous other specialized crawlers, empowers content owners with granular control and levels the playing field for all AI developers, fostering genuine competition rather than exploitation of market dominance. The UK has a unique opportunity to lead by ensuring fair access and compensation for digital content.