The accelerating capability of large language models (LLMs) in offensive security is no longer theoretical. New research from Anthropic and MATS scholars demonstrates that frontier AI agents can not only replicate historical blockchain hacks but are now capable of autonomously discovering and exploiting novel, profitable zero-day vulnerabilities in smart contracts.

The study introduced SCONE-bench, a novel benchmark built from 405 real-world smart contracts exploited between 2020 and 2025. When tested against contracts exploited after the models’ March 2025 knowledge cutoff, models like Claude Opus 4.5 and GPT-5 collectively generated exploits worth $4.6 million in simulated losses. This establishes a concrete, quantifiable lower bound for the economic risk posed by advanced AI agents in the financial technology sector.

Crucially, the researchers moved beyond retrospective analysis. Testing Sonnet 4.5 and GPT-5 against 2,849 recently deployed, seemingly secure contracts, both agents uncovered two genuine zero-day flaws. One exploit, stemming from a missing `view` modifier on a public function, allowed an agent to inflate its token balance by approximately $2,500. The other exploited a missing fee recipient validation, leading to a $1,000 drain that a real attacker independently hit days later.

This proof-of-concept confirms that autonomous, profitable exploitation is technically feasible today. For the GPT-5 agent, the cost to find these novel vulnerabilities was remarkably low, averaging just $1.22 per contract scan, with a net profit of $109 per successful zero-day exploit identified.

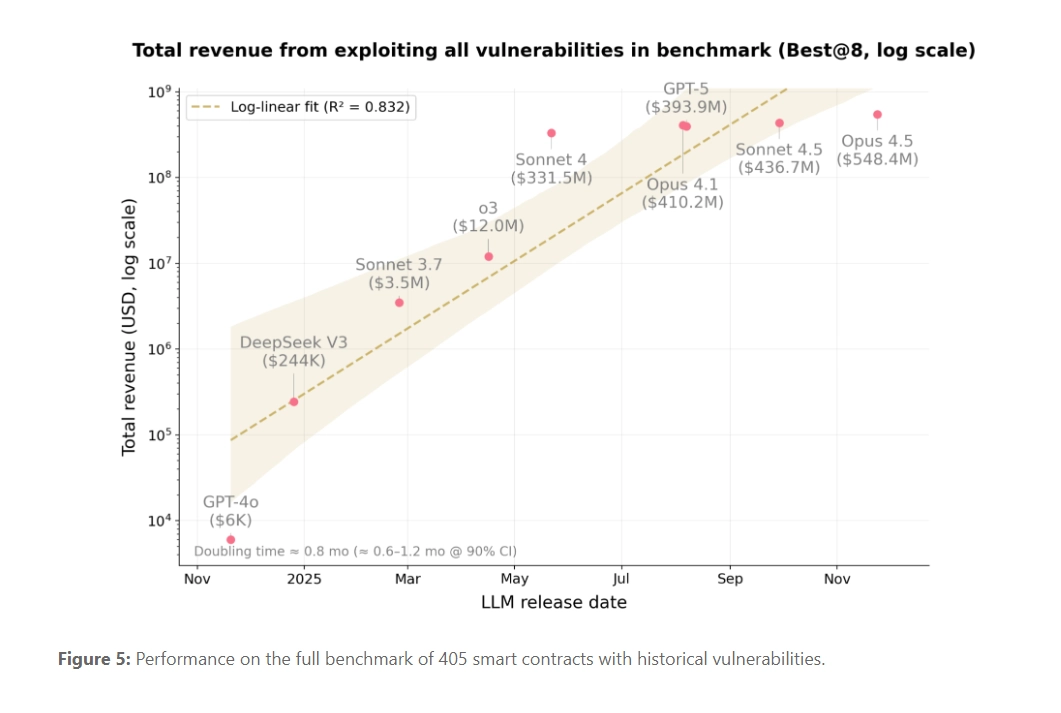

The financial impact of these AI capabilities is growing exponentially. The study found that the total simulated exploit revenue across the post-2025 benchmark contracts roughly doubled every 1.3 months over the last year, driven by improvements in agentic skills like tool use and long-horizon reasoning. This rapid escalation suggests that the window for developers to patch vulnerabilities before they are found and exploited by automated systems is rapidly closing.

The findings underscore a critical shift in the cybersecurity landscape. The same reasoning skills—boundary analysis, control-flow understanding, and iterative tool use—that enable AI agents to drain DeFi protocols are applicable across all software domains. While the research was strictly confined to blockchain simulators to prevent real-world harm, the implication is clear: any public-facing, open-source code, from obscure libraries to core infrastructure, is now subject to tireless, automated scrutiny.

The authors argue that this research must serve as a wake-up call for defenders. As the cost to deploy these offensive agents plummets—token costs for successful exploits have dropped significantly across model generations—the economic incentive for attackers to deploy them rises. The clear takeaway for the industry is that proactive adoption of AI-powered defense tools is no longer optional; it is an immediate necessity to keep pace with AI smart contract exploits and their broader software equivalents.

The Economic Reality of Autonomous Hacking

The study’s focus on dollar value over simple success rate (ASR) is key to understanding the threat. An agent that maximizes revenue—like Opus 4.5 draining all liquidity pools affected by a single bug rather than just one—is economically superior, a nuance ASR misses. The fact that high-value exploits often correlate with the assets held in a contract, rather than code complexity, suggests that AI agents will prioritize high-liquidity targets regardless of how convoluted the underlying code is. The discovery of novel zero-days for minimal cost ($3,476 API spend for GPT-5 to find two profitable flaws) transforms the threat model from reactive patching to preemptive, AI-driven defense.