AWS significantly enhances federated learning for fraud detection, empowering financial institutions to combat evolving fraud threats with heightened accuracy and privacy. This advanced approach leverages the Flower framework on Amazon SageMaker AI. It enables secure, collaborative model training without sharing sensitive raw data, directly addressing the $485.6 billion global fraud cost in 2023 and stringent privacy regulations like GDPR.

Traditional fraud models often rely on centralized data, leading to privacy concerns and overfitting. Federated learning allows multiple institutions to jointly train a shared model while keeping their data decentralized. This mitigates overfitting by learning from diverse fraud patterns across various datasets. The Flower framework stands out due to its framework-agnostic nature, seamlessly integrating with PyTorch, TensorFlow, and scikit-learn. While SageMaker excels in centralized ML, Flower is purpose-built for decentralized training, and their combination on AWS provides scalable, privacy-preserving workflows.

Advancing Federated Learning Fraud Detection

To further strengthen fraud detection, organizations utilize the Synthetic Data Vault (SDV). This Python library generates realistic synthetic datasets, simulating diverse fraud scenarios without exposing sensitive information. SDV helps federated learning models generalize better and detect subtle, evolving tactics. It also addresses data imbalance by amplifying underrepresented fraud cases, improving model accuracy and robustness. Synthetic data primarily serves as a validation dataset, ensuring privacy and consistency.

Fair evaluation is critical for federated learning models. A structured dataset strategy uses distinct training datasets from each institution. Instead of evaluating on a single dataset, a combined dataset ensures testing on a comprehensive distribution of real-world fraud cases. This reduces bias and improves fairness, preventing models from over-relying on one institution's data. Standard metrics like precision, recall, and F1-score measure performance, with particular attention to minimizing false negatives in sectors like insurance.

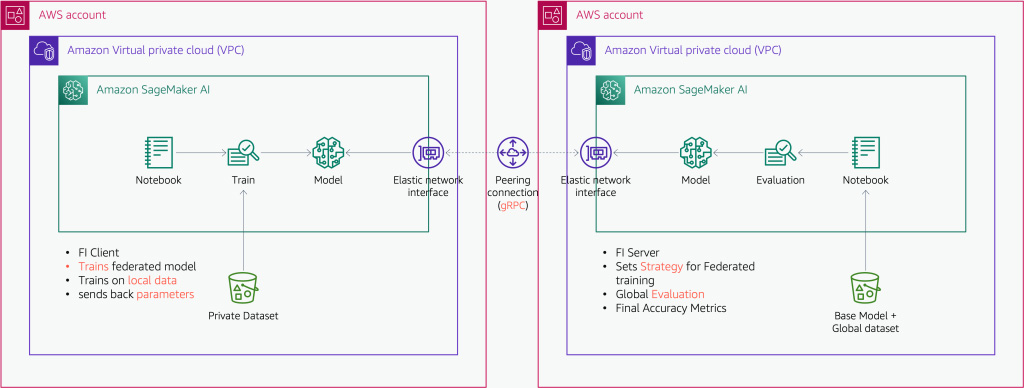

The implementation uses SageMaker AI and cross-account VPC peering. Each participant trains a local model on its own data, sharing only model updates—not raw data—with a central server. SageMaker orchestrates the entire training, validation, and evaluation process securely. This integration improves time to production, reduces engineering complexity, and supports strict data governance. The approach has demonstrated significant improvements in model performance and fraud detection accuracy. It reduces false positives, allowing analysts to focus on high-risk transactions more effectively.

While powerful, federated learning on SageMaker presents considerations. Managing client heterogeneity (data schemas, compute capacities) can be complex. The approach might not suit scenarios requiring real-time inference or highly synchronous updates, and it depends on stable connectivity. Organizations are exploring high-quality synthetic datasets to address these challenges, further enhancing model generalization and robustness. This methodology sets a new standard for financial security applications.