The relentless pursuit of wider, more complex neural networks just hit a significant milestone. Researchers at DeepSeek-AI have released a paper on Manifold-Constrained Hyper-Connections (mHC), a framework designed to upgrade the decade-old "residual connection" that powers nearly every modern AI model, from GPT-4 to Llama.

While standard residual connections act as a simple "highway" for data, mHC turns that highway into a multi-lane super-interchange—without the catastrophic crashes usually associated with such complexity.

The Innovation: Stability Through Geometry

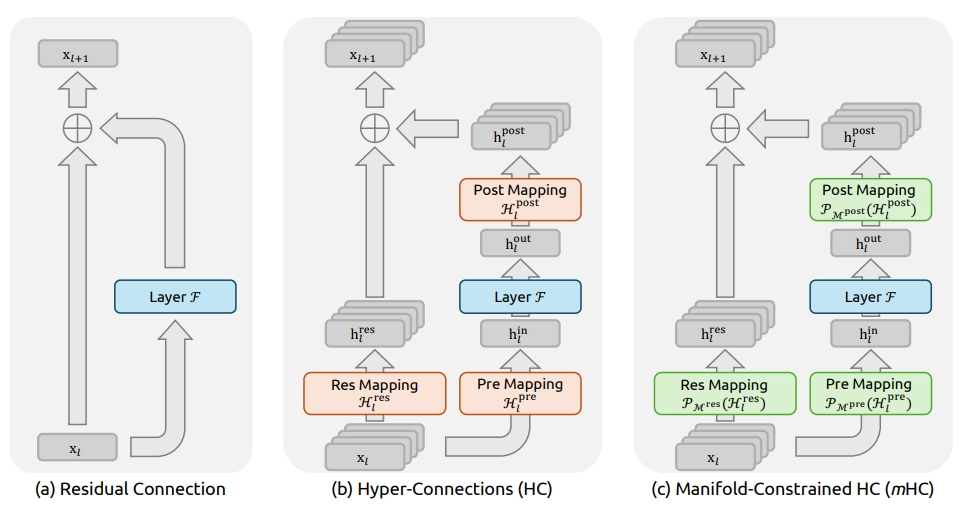

Since 2016, ResNets have relied on the identity mapping property: the idea that data should be able to pass through a layer unchanged. Recent attempts to expand this (Hyper-Connections) added "streams" of data to increase capacity but ran into a wall: the learnable gates controlling those streams would often amplify signals until they "exploded," causing the model to fail during training.

DeepSeek’s solution is mHC, which applies a mathematical constraint known as the Birkhoff Polytope. Instead of letting the model’s "mixing gates" take any value, mHC forces them to be doubly stochastic matrices.

- The Constraint: Every row and every column in the mixing matrix must sum to exactly 1.

- The Result: Mathematically, this ensures that the signal "energy" remains constant. It can be shuffled and mixed between streams, but it can never grow to infinity or vanish to zero.

Engineering Feat: Breaking the "Memory Wall"

Expanding the data stream by $4\times$ (the "expansion rate" used in the paper) typically slows down training significantly because the GPU has to move much more data in and out of its memory. This is often called the Memory Wall.

DeepSeek bypassed this through a suite of high-end systems engineering:

- TileLang Kernels: They wrote custom GPU code to "fuse" operations, meaning the GPU performs multiple mathematical steps on a piece of data before sending it back to memory.

- Selective Recomputation: To save VRAM, the model doesn't store all the data for every stream. Instead, it quickly re-calculates the necessary bits on the fly during the "backward pass" of training.

- Communication Overlapping: Using their DualPipe schedule, they managed to hide the extra data-transfer time behind the model's normal calculations.

The Bottom Line: mHC increases the effective width of the model by 400%, but only increases total training time by a mere 6.7%.

Benchmarks: Reasoning Gains

The performance gains are most visible in complex reasoning tasks. In their 27B parameter tests, the mHC architecture provided a massive boost over the standard baseline:

| Benchmark | Baseline (27B) | mHC (27B) | Gain |

| MMLU (General Knowledge) | 59.0 | 63.4 | +4.4 |

| GSM8K (Math Reasoning) | 46.7 | 53.8 | +7.1 |

| BBH (Logical Reasoning) | 43.8 | 51.0 | +7.2 |

The paper suggests that by widening the "residual stream," the model has more "working memory" to process complex thoughts, leading to better performance on hard logic and math problems.

Why It Matters

For the AI industry, this represents a shift in how we scale models. Instead of just adding more layers (making the model "taller") or more experts (making it "denser"), we can now make the internal data flow "wider" while maintaining perfect numerical stability.

DeepSeek has signaled that mHC is a "practical extension" that will likely become a standard component in the evolution of foundational models, potentially appearing in future DeepSeek-V4 or V5 iterations.