DeepSeek-AI has unveiled DeepSeek-OCR, a novel vision-language model (VLM) that promises to revolutionize how large language models (LLMs) process vast amounts of information. The core innovation lies in its ability to optically compress textual contexts, effectively expanding the effective "context window" for LLMs by up to tenfold. This breakthrough could dramatically enhance the efficiency and capability of AI systems, particularly for applications requiring the analysis of extensive documents.

Matthew Berman recently presented a deep dive into DeepSeek-AI’s latest innovation, DeepSeek-OCR, highlighting its potential to address a critical bottleneck in current LLM architectures. He explained how traditional text-based LLMs face significant computational challenges when processing long textual content, primarily due to the quadratic scaling of compute costs with sequence length. This limitation, often referred to as the context window problem, restricts the amount of information an LLM can consider at any given time to produce an optimal output.

DeepSeek-OCR tackles this by transforming textual information into a visual modality, representing text as an image. This seemingly counterintuitive approach leverages the inherent efficiency of visual data representation. Berman emphasized that "a single image containing document text can represent rich information using substantially fewer tokens than the equivalent digital text, suggesting that optical compression through vision tokens could achieve much higher compression ratios." This means that instead of feeding an LLM millions of text tokens, the same information can be conveyed through a significantly smaller number of visual tokens, leading to substantial computational savings and an expanded effective context.

The architecture of DeepSeek-OCR involves a sophisticated two-component system: DeepEncoder and DeepSeek-3B-MoE (Mixture of Experts) decoder. The process begins with an input image of text, such as a PDF, which is then broken down into 16x16 pixel patches. A Segment Anything Model (SAM) with 80 million parameters is employed to discern local details within these patches, identifying shapes of letters and other granular visual features. This local information is then down-sampled and compressed, reducing its size while preserving critical textual characteristics. Following this, a CLIP model, comprising 300 million parameters, integrates global attention, effectively piecing together the broader context and layout of the document. The final stage involves the DeepSeek-3B decoder, a 3-billion-parameter MoE model with 570 million active parameters, which translates the compressed visual representation back into coherent text. This intricate dance between visual encoding and textual decoding allows for efficient information transfer.

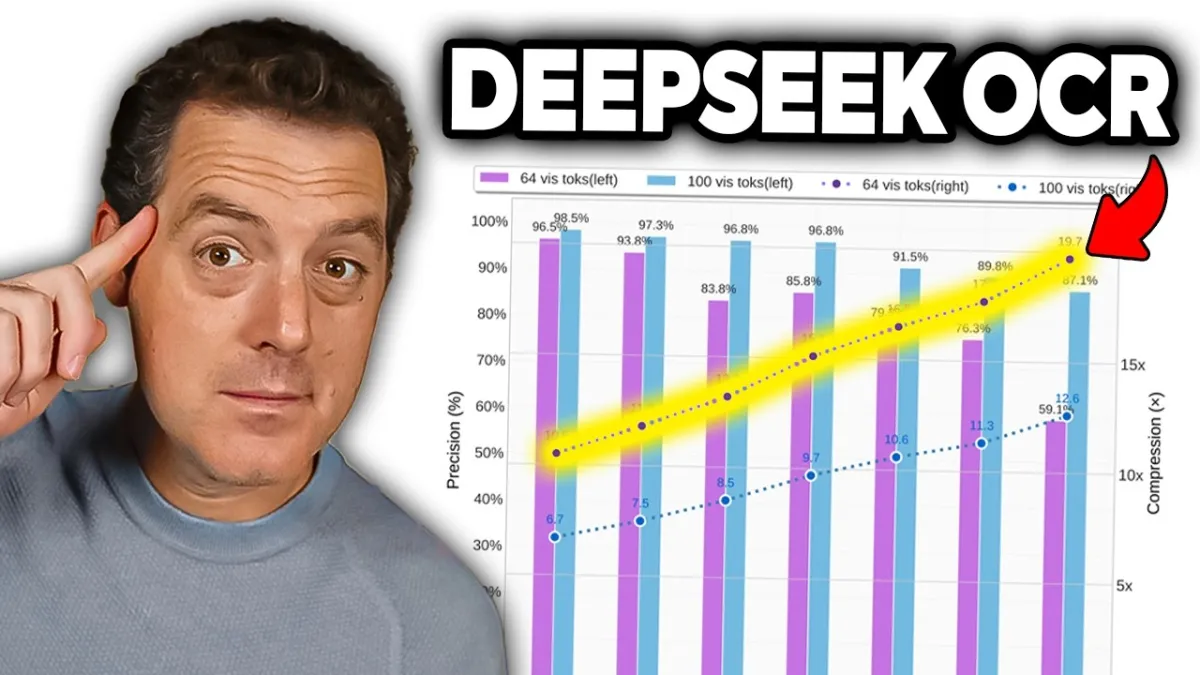

The model demonstrates impressive performance. According to the research paper, DeepSeek-OCR achieves "96%+ OCR decoding precision at 9-10x text compression," and "90% at 10-12x compression, and ~80% at 20x compression." While accuracy naturally decreases with higher compression ratios, these figures still represent a remarkable feat in maintaining readability and contextual integrity across significant data reduction. The ability to achieve such high compression with minimal accuracy loss is a testament to the model's robust design.

This approach introduces a profound paradigm shift. Andrej Karpathy, a prominent figure in AI, mused that "maybe it makes more sense that all inputs to LLMs should only ever be images." He highlights the benefits: "more information compression... shorter context windows, more efficiency... significantly more general information stream => not just text, but e.g. bold text, colored text, arbitrary images." This vision extends beyond mere text compression, suggesting a future where LLMs can inherently process a richer, multimodal input stream without the limitations of tokenizers, which Karpathy described as "ugly, separate, not end-to-end stage." The shift to image-based input also facilitates bidirectional attention, making LLMs significantly more powerful than those relying solely on autoregressive attention.

Another enthusiast, Brian Roemmele, captured the essence of DeepSeek-OCR's potential, exclaiming, "An entire encyclopedia compressed into a single, high-resolution image!" This hyperbolic yet illustrative statement underscores the scale of efficiency gains. The ability to condense vast textual corpora into a visually encoded format not only addresses current LLM context limitations but also opens doors for new forms of information processing and retrieval.

Related Reading

- Build AI Apps for free in Google AI Studio!

- ChatGPT Atlas Redefines Web Browsing with AI Integration

- ChatGPT Atlas Redefines Web Interaction with Browser-Native AI

To achieve this, DeepSeek-AI trained DeepSeek-OCR on a massive dataset of 30 million diverse PDF pages. This corpus included approximately 25 million pages in English and Chinese, with an additional 5 million pages across about 100 other languages. The data was meticulously annotated with both coarse and fine ground truths, ensuring the model's ability to handle complex document layouts and diverse linguistic content. This extensive training is crucial for the model's high precision and compression capabilities.

DeepSeek-OCR represents a significant leap in overcoming the context window limitations that have long plagued LLMs. By leveraging optical compression, it offers a pathway to more efficient, powerful, and multimodal AI systems, potentially reshaping how we interact with and extract insights from vast digital information.