The fragmented nature of early AI interactions, often requiring users to re-establish context with each new session, has been a silent productivity drain. Anthropic’s latest update to Claude directly addresses this by introducing a persistent memory feature, fundamentally altering how users can engage with the AI for complex, multi-stage projects. This development moves large language models closer to being true digital collaborators rather than mere query engines.

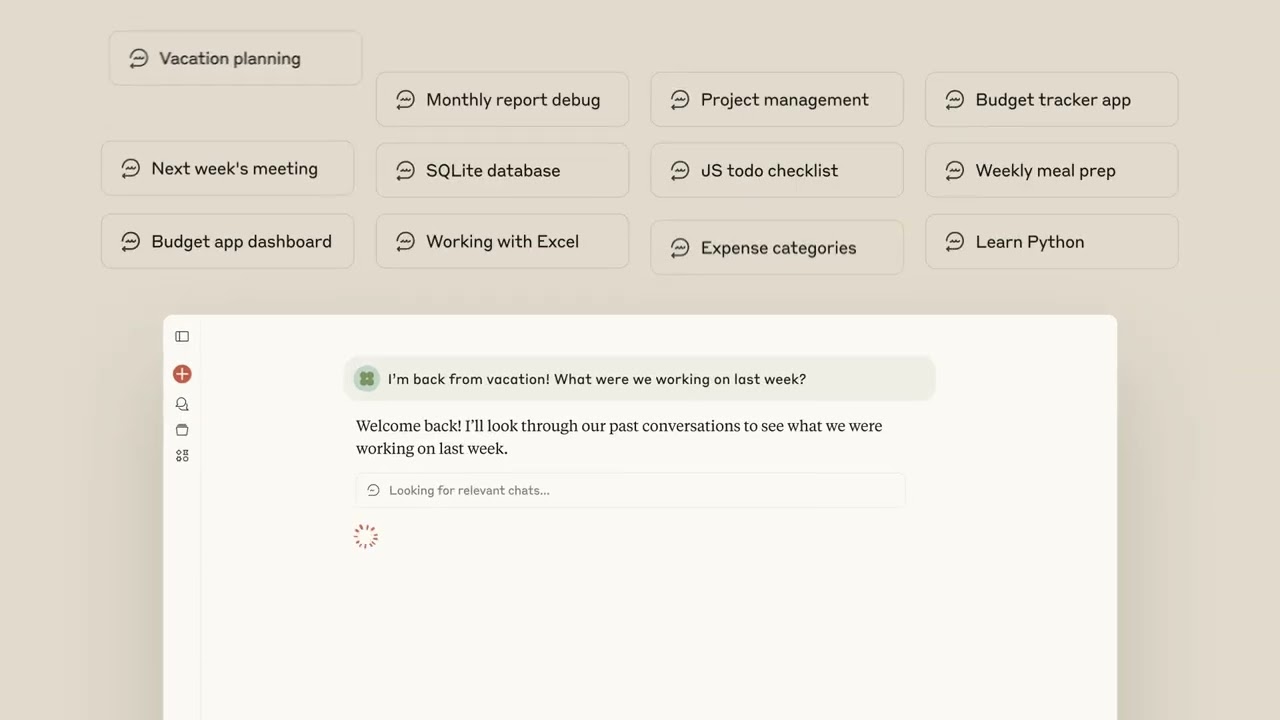

This concise product announcement showcases Claude’s new ability to recall previous conversations, allowing users to pick up exactly where they left off. The demonstration illustrates a seamless transition from a user’s vacation back into an ongoing development project, highlighting the practical application of this memory function.

The core insight here is a profound reduction in cognitive load. No longer does a user need to meticulously reconstruct the narrative of a project for their AI assistant. The AI itself maintains the thread. As the user in the demo queries, "I'm back from vacation! What were we working on last week?", Claude effortlessly retrieves and synthesizes past interactions, presenting a clear summary of the work in progress. This capability is critical for anyone managing intricate tasks, from software development to strategic planning, where continuity is paramount.

Claude's response exemplifies this advanced recall: "You were working on building a Python budget tracker app! Before vacation, you'd set up the SQLite database, implemented expense categories, and started the monthly reporting feature. We were debugging an issue where monthly totals weren't calculating correctly across categories - it was a problem with the date range filtering in your SQL queries. Would you like to continue debugging that issue, or move on to polishing the dashboard UI?" This granular recall, including specific technical details and outstanding issues, transforms the AI from a tool into a genuine project partner, capable of maintaining context across days or weeks.

This feature marks a significant step towards more natural and efficient human-AI collaboration. It eliminates the friction of restarting conversations, making the AI a more reliable and integrated part of daily workflows. For founders, VCs, and AI professionals, this is a clear signal of maturation in the LLM space, where the focus shifts from raw computational power to sophisticated, user-centric functionality. The ability to retain and leverage long-term memory will unlock new applications and increase the overall utility of AI systems, particularly for tasks requiring sustained effort and iterative development.