Clarifai, an AI platform company with roots stretching back to 2013, is making a bold new claim: its software can make standard GPUs run large language models faster than specialized, non-GPU hardware. The company today launched the Clarifai Reasoning Engine, an inference optimization layer designed specifically for the demanding, token-hungry workloads of AI agents.

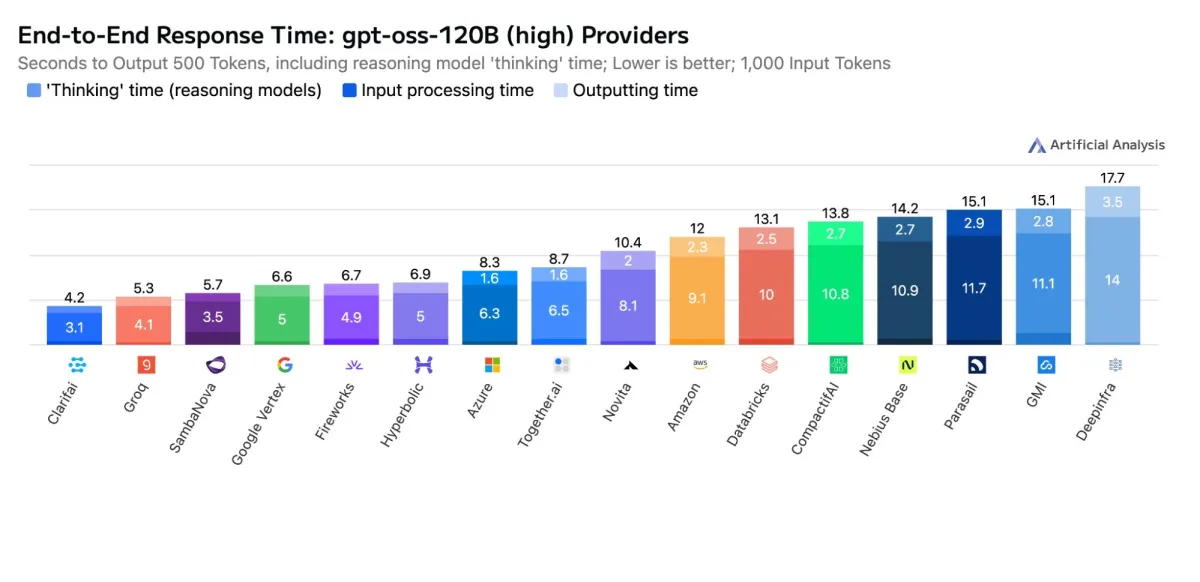

The announcement comes on the heels of new benchmarks from the independent firm Artificial Analysis. According to Clarifai, when running OpenAI’s 120-billion-parameter `gpt-oss` model, its new engine hit speeds of over 500 tokens per second with a time-to-first-token of just 0.3 seconds. These figures, Clarifai states, not only set new records for GPU-based inference but also surpassed the performance of some dedicated ASIC accelerators from other providers.

A Shot Across the Bow for Specialized Hardware

For years, the narrative in high-performance AI has been a race toward custom silicon. Companies have poured billions into developing specialized chips (ASICs) to eke out every last drop of performance for AI workloads. Clarifai’s results, if they hold up to wider scrutiny, suggest that the software stack is just as critical—and that there’s still plenty of untapped potential in the GPUs already dominating data centers.

This development is a direct challenge to the idea that enterprises need to invest in exotic hardware to scale intelligent applications. By wringing more power out of commodity GPUs, the Clarifai Reasoning Engine could offer a path to high performance without vendor lock-in, giving developers more flexibility and potentially lowering the astronomical costs associated with running large-scale AI.

“Agentic AI and reasoning workloads burn through tokens rapidly,” said Clarifai CEO Matthew Zeiler in a statement. “They require high throughput, low latency and low prices to drive viable customer use-cases.” He argues that this level of software optimization makes inference more affordable and can help custom model builders achieve performance on standard GPUs that was previously the exclusive domain of specialized hardware.

The engine is particularly tuned for agentic workflows, where an AI model might make numerous sequential calls to reason through a problem. Clarifai claims its platform features "adaptive performance," meaning it learns from repetitive tasks common in these workflows to continually improve speed over time without sacrificing accuracy.

The company is offering to work with customers to apply these software optimizations to their own custom models, promising a significant boost to both performance and operational economics.