Apple has quietly unveiled a new machine learning initiative, FastVLM, aimed at significantly improving the efficiency of vision language models (VLMs). In a new post on its Machine Learning Research blog, the company detailed its work on "Efficient Vision Encoding for Vision Language Models," signaling a strategic focus on optimizing how AI processes visual information. This move underscores Apple's ongoing commitment to advancing on-device AI capabilities, particularly in areas where visual understanding is paramount.

Vision language models represent a critical frontier in artificial intelligence, enabling systems to understand and generate content based on both visual inputs (like images or video) and textual prompts. These models are the backbone of features ranging from advanced image search and content moderation to sophisticated AI assistants that can describe what they "see." However, their power comes at a significant computational cost. Processing high-resolution visual data and integrating it with linguistic understanding is incredibly resource-intensive, often requiring powerful cloud-based servers.

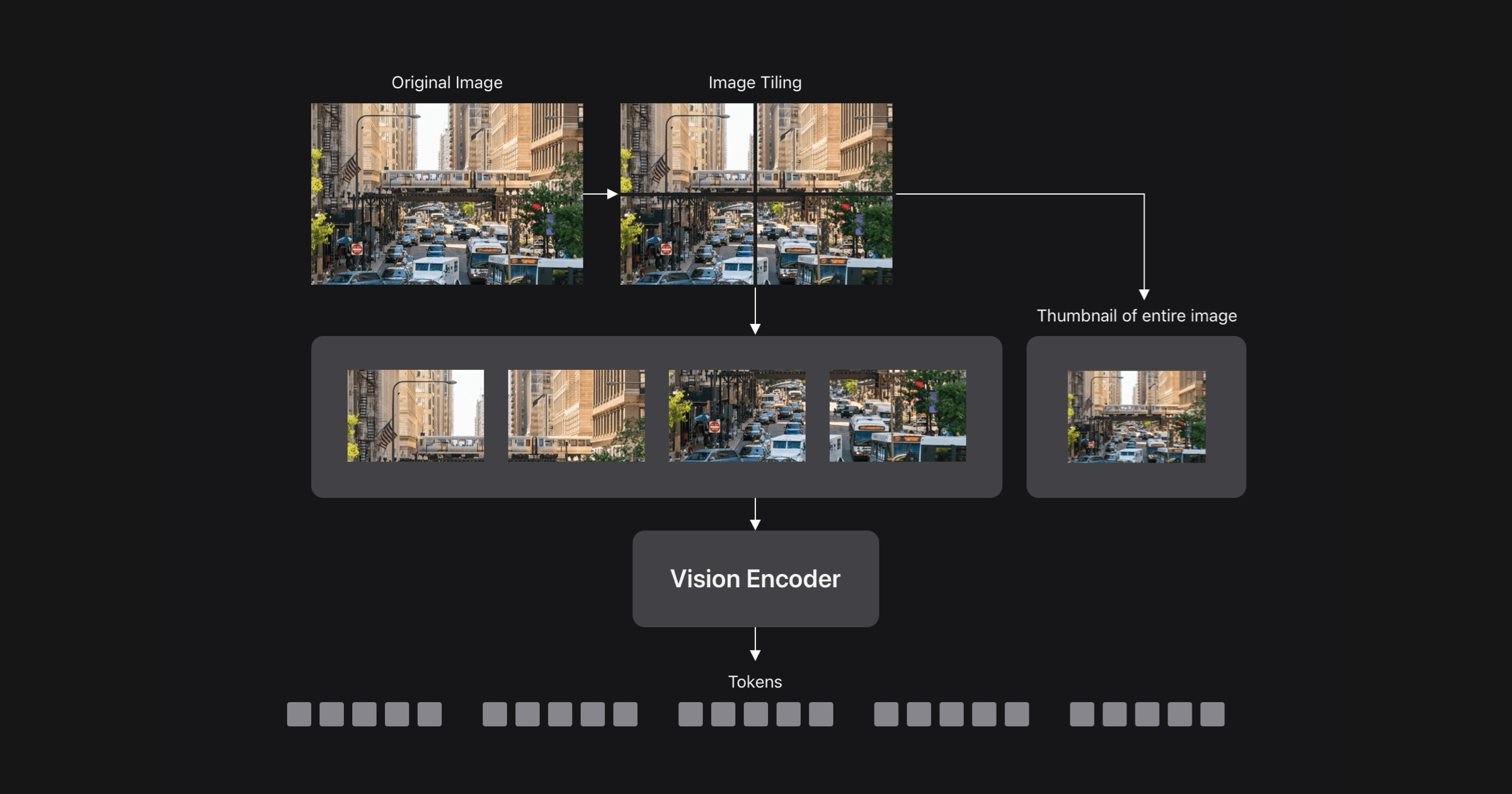

The core challenge FastVLM appears to address lies in "vision encoding." This is the initial, computationally heavy step where raw pixel data is transformed into a compact, meaningful representation that the language model can then interpret. Inefficient encoding can lead to slow inference times, high power consumption, and a reliance on remote data centers, limiting the potential for real-time, on-device AI applications. Apple's focus on efficiency here suggests a push to overcome these bottlenecks.

The Push for On-Device Intelligence

For Apple, the implications of more efficient VLMs are profound. The company has consistently emphasized the importance of on-device processing for privacy, speed, and reliability. Technologies like FastVLM could enable more sophisticated AI features to run directly on iPhones, iPads, Macs, and even the Vision Pro, without needing to send sensitive visual data to the cloud. Imagine an AI assistant that can instantly understand and respond to complex visual queries about your surroundings, or a photo app that can perform highly nuanced searches based on visual content, all processed locally.

This research aligns with a broader industry trend towards making large AI models more compact and performant for edge devices. While many companies are still grappling with the sheer scale of foundation models, Apple's announcement highlights a strategic investment in optimizing a specific, crucial component of the VLM pipeline. By making vision encoding more efficient, FastVLM could unlock a new generation of responsive, privacy-preserving AI experiences that are deeply integrated into Apple's hardware ecosystem. It's a clear signal that the company is not just adopting AI, but actively working to redefine its underlying architecture for its unique product philosophy.