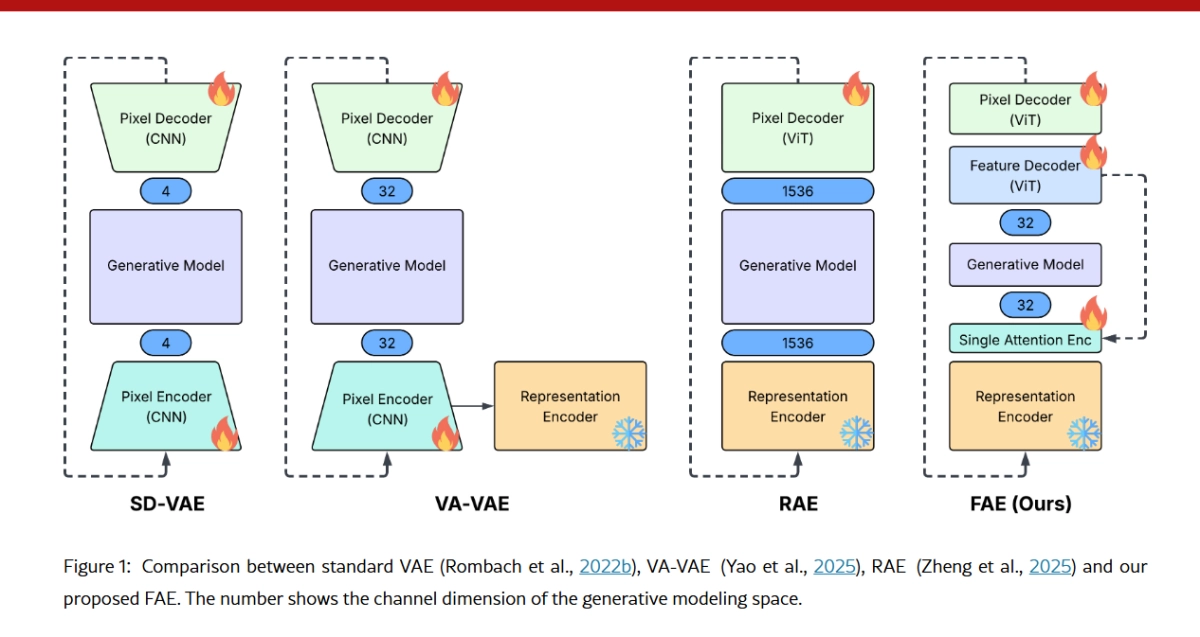

Generative AI is getting a major speed and efficiency boost, thanks to a surprisingly simple new framework from Apple researchers. The paper, "One Layer Is Enough: Adapting Pretrained Visual Encoders for Image Generation," introduces the Feature Auto-Encoder (FAE), a novel approach that dramatically slashes the complexity required to integrate massive, pre-trained visual encoders (like DINOv2 or SigLIP) into cutting-edge generative models (like Diffusion Models and Normalizing Flows).

The key takeaway? Apple's FAE can take the high-dimensional, semantic-rich "language" of a large, self-supervised vision model and compress it into a tiny, generation-friendly latent space using little more than a single attention layer.

The Big Problem: Feature Mismatch

Visual generative models, which include popular frameworks like Stable Diffusion, rely on a compressed "latent space" to work efficiently. But there's a fundamental conflict when integrating the latest and greatest visual encoders:

- Understanding Models (Encoders): Models like DINO need high-dimensional features (sometimes over 1,500 channels) to capture the diverse possibilities of a masked image region and perform well on complex "understanding" tasks.

- Generative Models (Decoders): Models like Diffusion Transformers (DiT) prefer low-dimensional latent spaces (often 32 to 64 channels). This makes the iterative denoising process more stable, converges faster, and is less resource-intensive.

Prior attempts to bridge this gap required complex alignment losses, auxiliary architectures, or major overhauls to the generative model itself.

FAE: A Minimalist Solution with Maximum Impact

FAE's genius lies in its minimalism and its two-stage decoding process:

1. The Single-Attention Encoder

Instead of a deep network, the FAE encoder uses just one self-attention layer followed by a linear projection. This layer's job is to compress the massive, high-dimensional features from a pre-trained encoder (which is kept frozen) into a compact latent vector $\mathbf{z}$ (e.g., $16 \times 16 \times 32$).

The researchers found that keeping the encoder minimal is critical. Overly complex encoders tend to "overfit" the simple task of feature reconstruction, discarding the valuable, subtle semantic information learned by the original, pre-trained model. The single attention layer efficiently removes redundant global information across patch embeddings while preserving core semantics.

2. The Double-Decoder Design

This is the central innovation. FAE separates the tasks of feature preservation and image synthesis:

- Feature Decoder: A lightweight Transformer takes the compact latent $\mathbf{z}$ and is trained to reconstruct the original, high-dimensional features ($\mathbf{\hat{x}}$) of the large encoder. This ensures the low-dimensional latent space retains the rich semantics of the frozen, pre-trained model.

- Pixel Decoder: A second decoder then takes these reconstructed features ($\mathbf{\hat{x}}$) as input to generate the final RGB image.

This decoupling allows the model to keep the generative process simple and stable (operating on the low-dim $\mathbf{z}$), while still leveraging the full semantic power of the massive vision model through the reconstructed features ($\mathbf{\hat{x}}$).

Performance: Faster Training, Near State-of-the-Art Quality

The FAE framework is generic, working with different visual encoders (DINOv2, SigLIP) and different generative model families (Diffusion Models and Normalizing Flows). Its performance is compelling:

- State-of-the-Art (SOTA) Convergence: On the ImageNet 256x256 benchmark, a diffusion model using FAE achieved an impressive FID score of 2.08 in just 80 training epochs. This is a dramatic acceleration compared to prior methods.

- Top Generation Quality: With more training (800 epochs), FAE reaches a near-SOTA FID of 1.29 with classifier-free guidance (CFG), and a SOTA FID of 1.48 without CFG.

- Text-to-Image: Even when trained on a relatively small dataset like CC12M, FAE-based text-to-image models achieved competitive FID scores (6.90 on MS-COCO with CFG), rivaling models trained on vastly larger, web-scale datasets.

- Preserved Semantics: The compact latent space retains the understanding capabilities of the original large encoders. For example, the paper shows that the FAE-compressed latents can still accurately match semantically similar parts (like an animal's head) across different images, proving that fine-grained information is preserved.

The team also successfully applied FAE to Normalizing Flows (STARFlow), a different family of generative models, demonstrating its universal applicability and yielding faster convergence and better quality than the baseline.

The Verdict

Apple's Feature Auto-Encoder (FAE) presents a highly pragmatic and effective solution to a long-standing problem in visual AI. By replacing complex alignment techniques with a simple, single-attention layer and a strategic double-decoder design, the researchers have managed to create a modular, efficient "interface" that plugs any powerful vision encoder into any latent generative model. This work paves the way for generative AI that is not only higher quality but significantly cheaper and faster to train