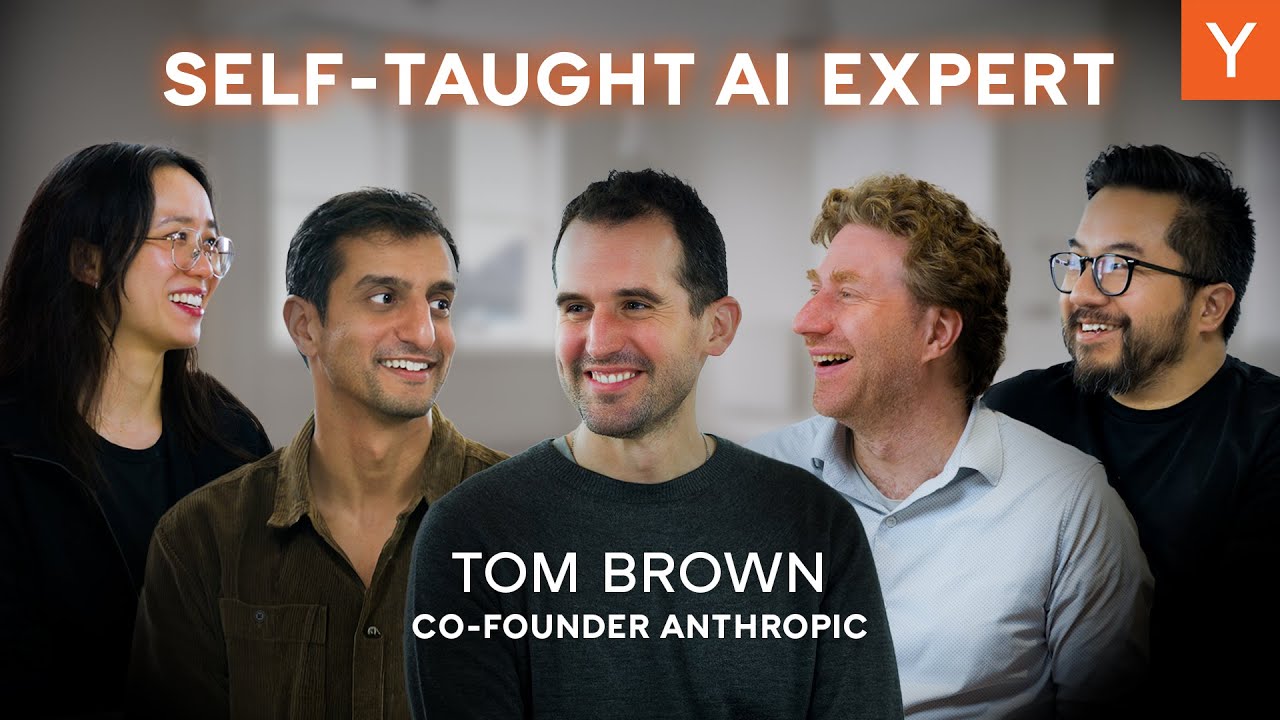

"Humanity is on track for like the largest infrastructure buildout of all time," observed Tom Brown, co-founder of Anthropic, during a recent Y Combinator Lightcone podcast. Speaking with Garry Tan, Harj Taggar, Diana Hu, and Jared Friedman, Brown offered a candid retrospective on his unconventional path from a self-taught engineer to a pivotal figure in the AI revolution, emphasizing the current monumental scale of AI development.

Brown’s journey began not in traditional big tech, but in the lean, high-stakes environment of YC startups like Linked Language and Grouper. This early experience, he posits, instilled a crucial "wolf" mindset, a stark contrast to the structured, task-driven roles found in larger corporations. This entrepreneurial grit, born from the necessity to "hunt for food" or face oblivion, proved more valuable than conventional software engineering skills in navigating the nascent, unpredictable world of AI research.

His transition into AI was catalyzed by the burgeoning field of deep learning, particularly the transformative discovery of scaling laws. Brown, who played a key role in building GPT-3 at OpenAI, noted the "very straight line" indicating reliably increased intelligence with more compute. This phenomenon, he explained, was a powerful signal that the "stupid thing that works" approach—throwing more computational resources at models—was yielding unprecedented results. This insight became a foundational principle for Anthropic, a company he co-founded with other OpenAI alumni.

Anthropic's latest product, Claude 3.5 Sonnet, exemplifies this philosophy, particularly its strong performance in coding. Brown admitted that the team didn't fully anticipate its success, but the market's enthusiastic adoption, especially among YC founders, provided clear validation. This "X-factor" beyond mere benchmarks, he suggests, points to a deeper utility. The focus for Anthropic has shifted to empowering the AI itself, treating Claude as a user who needs the right tools and context to perform effectively, rather than merely a black box to optimize.

The sheer scale of compute required for this endeavor is staggering. Brown underscored that the investment in AI infrastructure is set to surpass historical projects like the Apollo program and the Manhattan Project combined, potentially as early as next year. This rapid expansion presents challenges, particularly in securing power and data center capacity, especially within the US, which Anthropic aims to prioritize. The company’s multi-chip strategy, leveraging GPUs, TPUs, and Tranium chips, offers flexibility and optimization for different tasks, albeit with the complexity of managing diverse hardware ecosystems.

Anthropic’s internal culture fosters open communication and a strong mission-driven ethos. Brown highlighted that nearly all early hires joined for the mission, contributing to a transparent environment where "everything is on Slack, 100% of things on Slack, and within that, all public channels." This transparency, he believes, is key to maintaining a politics-free organization even as it scales rapidly to thousands of employees. The continuous, often surprising, improvements in models like Claude underscore the dynamic nature of AI development, where innovation often outpaces even the most ambitious predictions.