The Allen Institute for AI (AI2) has released Molmo2 VLM, a powerful new family of open-weight vision-language models that directly challenge proprietary systems like Gemini 3 Pro by mastering precise video grounding and tracking.

The strongest video-language models (VLMs) have long been locked behind corporate walls. While Google, OpenAI, and Anthropic battle over closed weights and proprietary data, the open-source community has struggled to build competitive foundations, often relying on synthetic data distilled from those very closed systems.

That dynamic just changed. The Allen Institute for AI (AI2) has released Molmo2 VLM, a new family of fully open-weight models that not only match the state of the art among open VLMs but demonstrate exceptional capabilities in video grounding that, on specific benchmarks, surpass even proprietary giants.

Molmo2 VLM is a direct shot across the bow of models like Gemini, focusing on a critical missing piece of multimodal AI: precise spatio-temporal grounding.

Grounding is the ability for a model to not just understand a video conceptually, but to pinpoint exactly *where* and *when* an object or event occurs in pixels and time. For applications ranging from robotics and industrial automation to advanced video search and accessibility, this capability is non-negotiable.

AI2’s research paper notes that while image grounding is becoming standard, video grounding remains limited even in proprietary systems. Molmo2 VLM bridges this gap, allowing users to ask complex queries like "How many times does the robot grasp the red block?" and receive not just a number, but precise point coordinates for each event across the video timeline.

The performance metrics are sharp and analytical. On video grounding tasks, Molmo2 VLM significantly outperforms existing open-weight models like Qwen3-VL. More notably, the best-in-class 8B Molmo2 model surpasses Google’s proprietary Gemini 3 Pro on key grounding benchmarks. For instance, Molmo2 achieved 38.4 F1 on video pointing compared to Gemini’s 20.0 F1, and 56.2 J&F on video tracking versus Gemini’s 41.1 J&F.

This is not a marginal improvement; it is a demonstrable leap in a capability that defines the utility of VLMs in real-world, high-precision environments.

The Data Advantage and Open Transparency

The reason Molmo2 VLM is so significant for the open-source ecosystem lies in its foundation: the data.

Many successful open models achieve high scores by using synthetic data generated by proprietary models—a form of distillation that creates a dependency on closed systems and raises transparency concerns. AI2 explicitly avoided this approach.

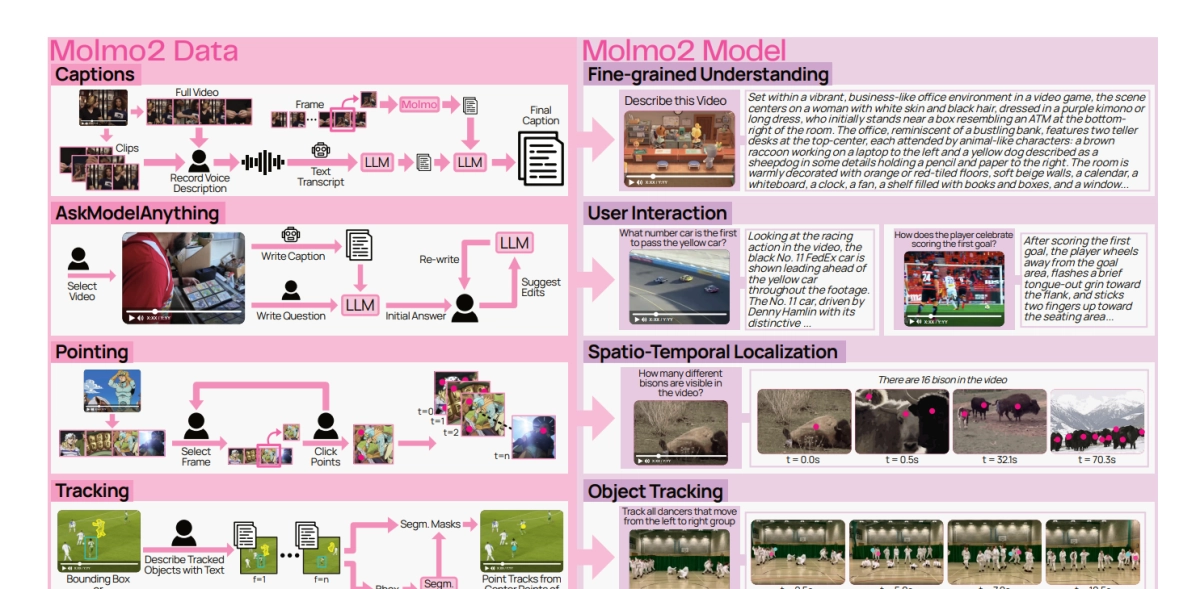

The core contribution of the Molmo2 project is a suite of nine novel datasets, all collected without relying on closed VLMs. These datasets target skills that are severely underrepresented in existing open data, particularly long-form video understanding and grounding.

These new datasets include:

- Dense Video Captioning: A corpus of 104,000 videos with captions averaging 924 words per video. This is substantially denser and more detailed than captions used in prior work like LLaVA-Video (547 words) or ShareGPT4-Video (280 words). AI2 achieved this density using a multi-stage pipeline where human annotators first narrated descriptions (allowing for more detail than text typing), which were then transcribed and enriched with frame-level visual details.

- Video Pointing and Tracking: Dedicated, large-scale datasets (over 520,000 instances) enabling the model to pinpoint specific moments and locations in space and time, and continuously track objects across complex video sequences.

- Long-Form QA: Human-authored question-answer pairs (212,000 instances) for multi-image and video inputs, created using a human-LLM collaboration loop that avoided proprietary model distillation.

By releasing the full training data, model weights (including 4B, 8B, and an OLMo-based 7B variant), and the training code, AI2 has provided the community with a transparent, high-quality foundation for future VLM research. This level of openness is crucial for accelerating innovation outside the control of major tech companies.

The training pipeline itself incorporates several innovations to handle the complexity of video and multi-image inputs efficiently. Molmo2 VLM utilizes a three-stage training process: image pre-training, joint video/image supervised fine-tuning (SFT), and a final short long-context SFT stage.

To manage the massive variability in input lengths—from short text answers to videos requiring 16,000+ tokens—AI2 developed an on-the-fly sequence packing algorithm and a message-tree encoding scheme. This system dramatically increased training throughput, fitting 3.8 examples into a single sequence during SFT and leading to a 15x efficiency gain.

Furthermore, AI2 introduced a novel token-weighting scheme during fine-tuning. This prevents long outputs, such as dense video captions, from dominating the loss function and degrading performance on short-answer tasks like multiple-choice QA. They also found that enabling bi-directional attention between visual tokens, even those from different frames, yielded notable performance gains.

Molmo2 VLM is not just a high-performing model; it is a strategic open-source intervention. It proves that foundational video understanding, including the critical capability of pixel-level grounding, can be achieved transparently and openly, without relying on the black boxes of proprietary systems. For developers building next-generation applications in robotics, surveillance, or complex data analysis, Molmo2 VLM provides the necessary open infrastructure to move beyond high-level descriptions and into precise, actionable spatio-temporal intelligence.