Frontier AI models have become excellent at generating boilerplate functions, but a new, rigorous test of their debugging capabilities suggests they are nowhere near ready to handle production outages.

A benchmark released today, OTelBench, tested 14 leading large language models on their ability to perform a fundamental Site Reliability Engineering (SRE) task: adding distributed tracing to microservices using the industry standard, OpenTelemetry (OTel). The results are a stark reality check for the AI SRE hype cycle.

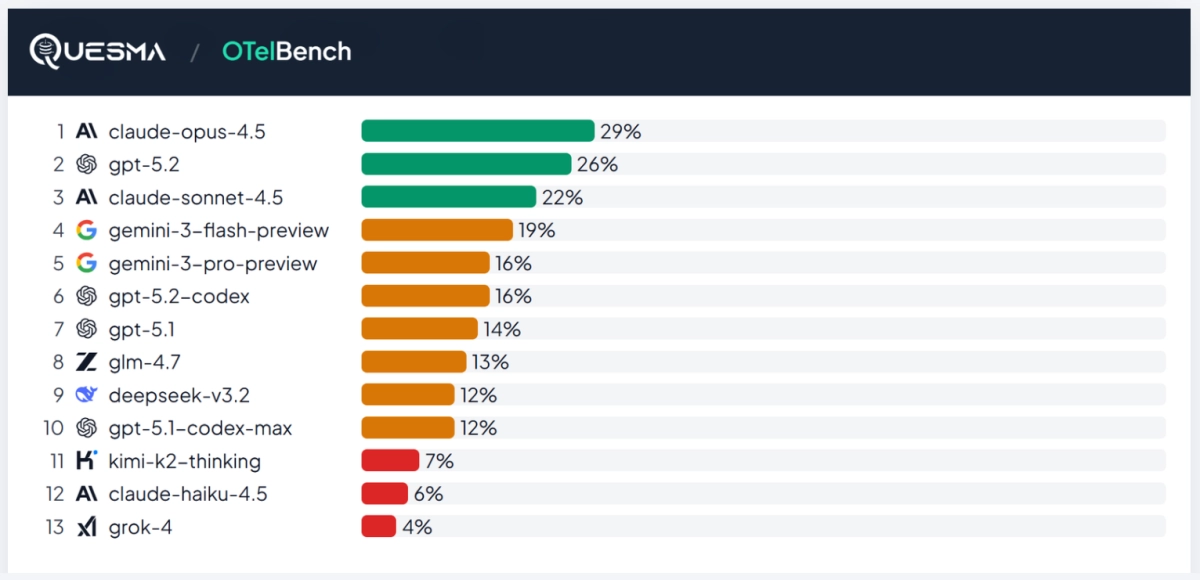

The overall pass rate across 23 tasks spanning 11 programming languages was a dismal 14%. Even the best performing model, Anthropic’s Claude Opus 4.5, succeeded only 29% of the time, while GPT 5.2 managed 26%.

Distributed tracing is essential for modern microservices architectures. When a user clicks "Login," that single action might hop across dozens of services. OTel provides the necessary instrumentation—code added to the application—to link these scattered events into a single, coherent timeline, allowing engineers to pinpoint where a request failed.

The OTelBench researchers, including Przemek Delewski and Jacek Migdał, designed tasks that would be trivial for a human SRE, involving short, clean microservices of around 300 lines of code. If the models cannot handle this, they certainly cannot handle the massive, legacy-ridden systems found in the real world.

The Context Gap and Silent Failures

The most critical failure mode identified by the benchmark was not compilation errors, but a profound lack of business context.

In one example, models were presented with a web service simulating two distinct user actions: a successful search and a failed token retrieval attempt. A human engineer would immediately recognize these as two separate user journeys, requiring two unique traces with different TraceIDs.

The LLMs failed this test consistently. Instead of generating two distinct traces, they mechanically instrumented every HTTP call and conflated both user actions into a single, confusing timeline. They successfully instrumented the low-level HTTP calls but failed to propagate the context correctly, treating the entire sequence as one flat list of events rather than two hierarchical trees.

This finding is crucial: many models produced code that compiled correctly but generated malformed or useless traces. For SRE work, "it builds" is not nearly enough. A malformed trace is arguably worse than no trace at all, as it provides misleading data during an outage.

The polyglot nature of modern systems also proved to be an insurmountable hurdle. The benchmark required models to work across 11 languages, reflecting the reality of cloud environments. While Go and C++ saw moderate success, models failed completely on several key languages. None of the 14 models solved a single task in Java, Ruby, or Swift, often struggling with dependency management and build systems—skills that extend far beyond simple code generation.

The researchers note that this requires polyglot backend development skills, including knowledge of CMake for C++ or module systems for Go, which are often past the training cut-off dates or outside the core focus of current AI development.

Cost and Efficiency Tradeoffs

While performance was low across the board, the benchmark did reveal significant differences in cost efficiency.

The best-performing model, Claude Opus 4.5, was also the most expensive. However, the budget-conscious winner was Gemini 3 Flash. Despite being 11 times cheaper and twice as fast as Claude Opus 4.5, Gemini 3 Flash achieved a 19% pass rate, substantially outperforming the more expensive Gemini 3 Pro, which scored only 16%. This suggests that for low-level SRE assistance, speed and cost efficiency currently dominate marginal gains in generalized intelligence.

The OTelBench results, released by QuesmaOrg, confirm that the promise of AI SRE remains largely marketing hype in early 2026. While LLMs can assist in writing functions, they lack the long-horizon reasoning and contextual awareness required to reliably instrument and debug complex distributed systems.