A heated Facebook post by Yann LeCun — Meta's Chief AI Scientist and one of the three "Godfathers of Deep Learning" — ignited a surprisingly rich public debate about one of the most consequential questions in AI development: should AI systems have explicit goals?

The exchange, sparked by remarks Yoshua Bengio made at the AI Impact Summit in Delhi, drew hundreds of comments from researchers, engineers, and AI practitioners — and ultimately revealed that the two Turing Award winners may not be as far apart as LeCun's initial post suggested.

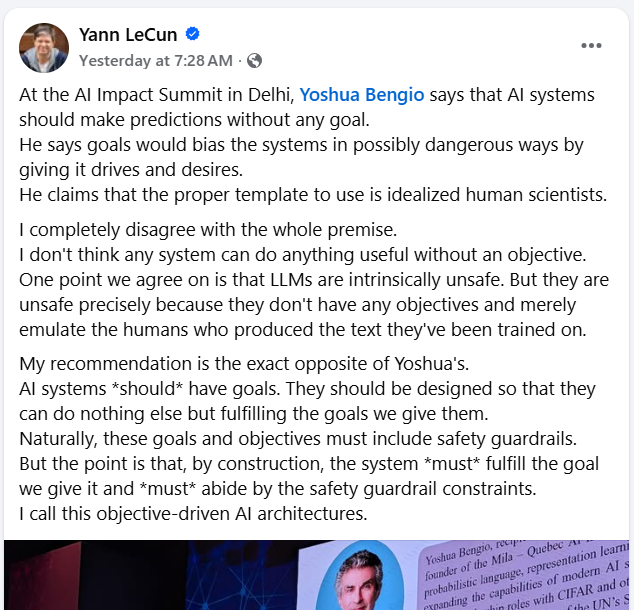

What LeCun Said

LeCun opened by taking sharp issue with Bengio's position that AI systems should make predictions without any goal, arguing that goal-free AI modeled on an "idealized human scientist" would be both impractical and counterproductive.

"I don't think any system can do anything useful without an objective," LeCun wrote, adding that LLMs are "intrinsically unsafe precisely because they don't have any objectives and merely emulate the humans who produced the text they've been trained on."

His prescription — what he calls "objective-driven AI architectures" — holds that AI systems should be explicitly engineered with goals and safety guardrails baked in by construction, such that the system literally cannot deviate from them.

Bengio Fires Back — Calmly

Bengio's reply was measured but pointed. He clarified that he never argued against AI having goals at all — only against AI having implicit or uncontrolled goals that are misaligned with human intentions, the kind that silently emerge from training without designers even realizing it.

"Once you have a non-agentic conditional probability estimator, you can of course use it to build an agent," Bengio wrote, describing his "Scientist AI" concept — a system whose training objective is to approximate Bayesian posterior predictions without developing a hidden agenda in the way frontier LLMs demonstrably do.

The distinction is subtle but important: Bengio isn't arguing for goal-free AI. He's arguing for making the difference between a prediction system and an agentic decision-maker explicit and controllable — separating the cognitive from the executive layer.

Where They Actually Agree

Buried beneath the sparring is a striking amount of common ground. Both researchers agree that current LLMs are unsafe. Both agree that implicit goals are dangerous. And both ultimately advocate for some form of explicit goal specification combined with harm-avoidance mechanisms.

The sharpest synthesis came from Balázs Kégl, who noted that "there are no predictions without goals — the question is whether the goals are explicit or implicit." Bengio immediately endorsed this framing. LeCun's entire architecture proposal rests on making goals explicit and controllable — which is structurally consistent with what Bengio described.

Why This Debate Matters

The exchange isn't just academic sparring. It maps directly onto competing design philosophies currently playing out across the AI industry.

LeCun's JEPA (Joint Embedding Predictive Architecture) research at Meta explicitly pursues objective-driven architectures. Bengio's work leans toward probabilistic reasoning systems with careful agentic constraints layered on top. Both approaches are live research bets with real downstream implications for whether frontier AI systems are auditable, aligned, and safe at scale.

Contributor Kenneth C. Zufelt III offered what may be the most pragmatic synthesis in the comments: a clean architectural separation between a non-agentic cognition layer — free to model, predict, and simulate — and a strictly governed executive layer that controls what actions actually get committed to the world.

That framing happens to describe what both LeCun and Bengio are reaching toward. The disagreement may be more about emphasis and risk prioritization than fundamental philosophy.

The Bottom Line

If you strip away the Facebook post format and the initial mischaracterization, what you have is two of the field's most credible thinkers converging on the same core problem: how do you build AI systems that are genuinely useful while ensuring their objectives remain transparent, auditable, and aligned with human intent?

The answer, it seems, requires both of their frameworks — explicit goal specification and a clean separation between prediction and agency. The industry would do well to treat this not as a fight to win, but as a design spec to build from.