Perplexity AI today launched the Deep Research Accuracy, Completeness, and Objectivity (DRACO) Benchmark, an open-source tool designed to evaluate AI agents based on how users actually conduct complex research. The move aims to bridge the gap between AI models excelling at synthetic tasks and those capable of serving authentic user needs.

DRACO is model-agnostic, meaning it can be rerun as more powerful AI agents emerge, with Perplexity committing to publishing updated results. This also allows users to see performance gains directly reflected in products like Perplexity.ai's Deep Research feature.

Evaluating AI's Research Prowess

Perplexity AI touts its Deep Research capabilities as state-of-the-art, citing strong performance on existing benchmarks like Google DeepMind's DeepSearchQA and Scale AI's ResearchRubrics. This performance is attributed to a combination of leading AI models and Perplexity's proprietary tools, including search infrastructure and code execution capabilities.

However, the company realized that existing benchmarks often fail to capture the nuanced demands of real-world research. DRACO was developed from millions of production tasks across ten domains, including Academic, Finance, Law, Medicine, and Technology, to address this limitation.

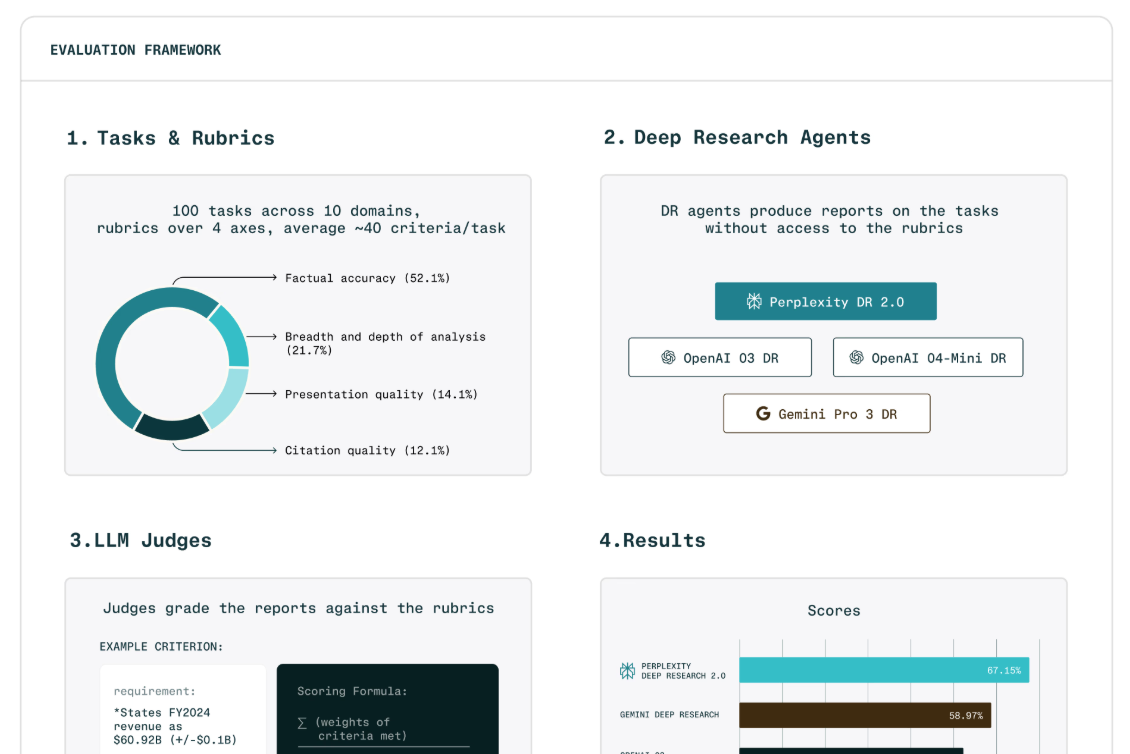

Unlike benchmarks that test narrow skills, DRACO focuses on synthesis across sources, nuanced analysis, and actionable guidance, all while prioritizing accuracy and proper citation. The benchmark features 100 curated tasks, each with detailed evaluation rubrics developed by subject matter experts.

Production-Grounded Evaluation

The tasks within the DRACO Benchmark originate from actual user requests on Perplexity Deep Research. These requests undergo a rigorous five-stage pipeline: personal information removal, context and scope augmentation, objectivity and difficulty filtering, and final expert review. This process ensures the benchmark reflects authentic user needs.

Rubric development was a meticulous process involving a data generation partner and subject matter experts, with approximately 45% of rubrics undergoing revisions. These rubrics assess factual accuracy, breadth and depth of analysis, presentation quality, and source citation, with weighted criteria and penalties for errors like hallucinations.

Responses are evaluated using an LLM-as-judge protocol, where each criterion receives a binary verdict. This method, grounded in real-world search data, transforms subjective assessment into verifiable fact-checking. Reliability was confirmed across multiple judge models, maintaining consistent relative rankings.

Tooling and Findings

The DRACO benchmark is designed to evaluate agents equipped with a full research toolset, including code execution and browser capabilities. This allows for a comprehensive assessment of advanced AI research agents.

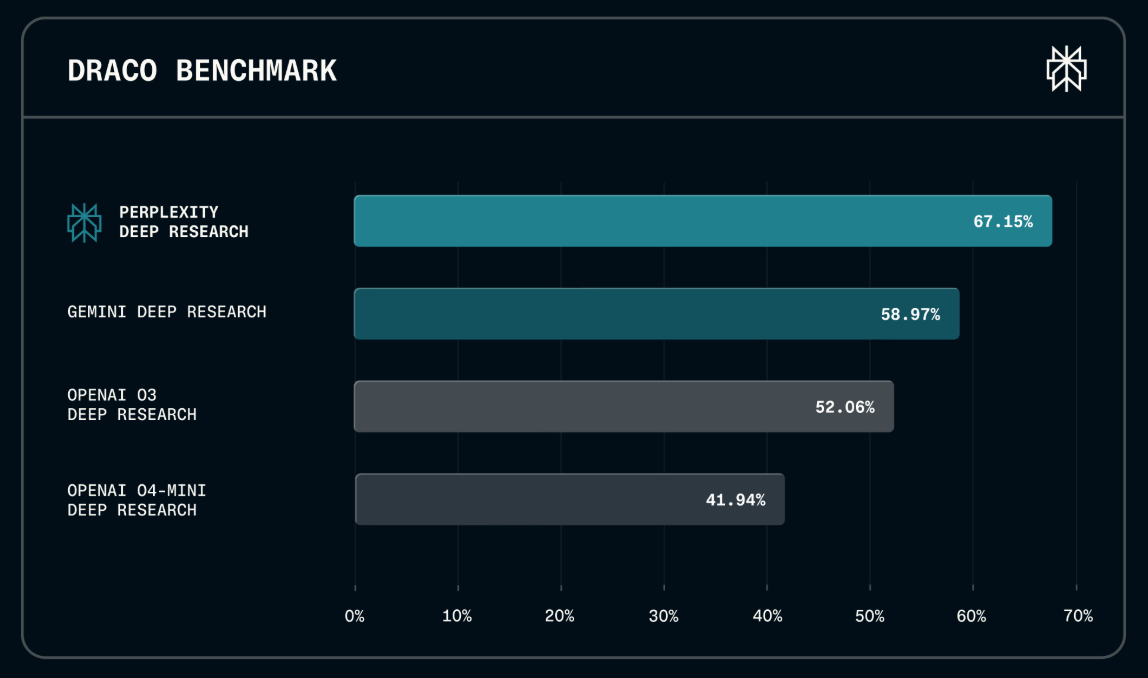

In evaluations across four deep research systems, Perplexity's Deep Research demonstrated the highest pass rates across all ten domains, notably excelling in Law (89.4%) and Academic (82.4%). This translates to more reliable AI-generated answers for users in critical fields.

Performance gaps widened significantly in complex reasoning tasks, such as Personalized Assistant and 'Needle in a Haystack' scenarios, where Perplexity outperformed the lowest-scoring system by over 20 percentage points. These areas mirror common user needs for personalized recommendations and fact retrieval from extensive documents.

Perplexity's Deep Research also led in Factual Accuracy, Breadth and Depth of Analysis, and Citation Quality, underscoring its strength in dimensions crucial for trustworthy decision-making. Presentation Quality was the only area where another system showed comparable performance, indicating that core research challenges lie in factual correctness and analytical depth.

Notably, the top-performing system also achieved the lowest latency, completing tasks significantly faster than competitors. This efficiency, combined with accuracy, is attributed to Perplexity's vertically integrated infrastructure, optimizing search and execution environments.

The Road Ahead

Perplexity plans to continuously update DRACO benchmark evaluations as stronger AI models emerge. Improvements to Perplexity.ai's Deep Research will also be reflected in benchmark scores.

Future iterations of the benchmark will expand linguistic diversity, incorporate multi-turn interactions, and broaden domain coverage beyond the current English-only, single-turn scope.

The company is releasing the full benchmark, rubrics, and judge prompt as open source, empowering other teams to build better research agents grounded in real-world tasks. Detailed methodology and results are available in a technical report and on Hugging Face.