Gartner’s latest Emerging Market Quadrant (eMQ) for Generative AI Specialized Cloud Infrastructure arrives at a pivotal moment, as enterprises shift from early-stage model experimentation to globally distributed deployment strategies. The result is a rapidly reshaping ecosystem that no longer resembles the cloud market of the past decade.

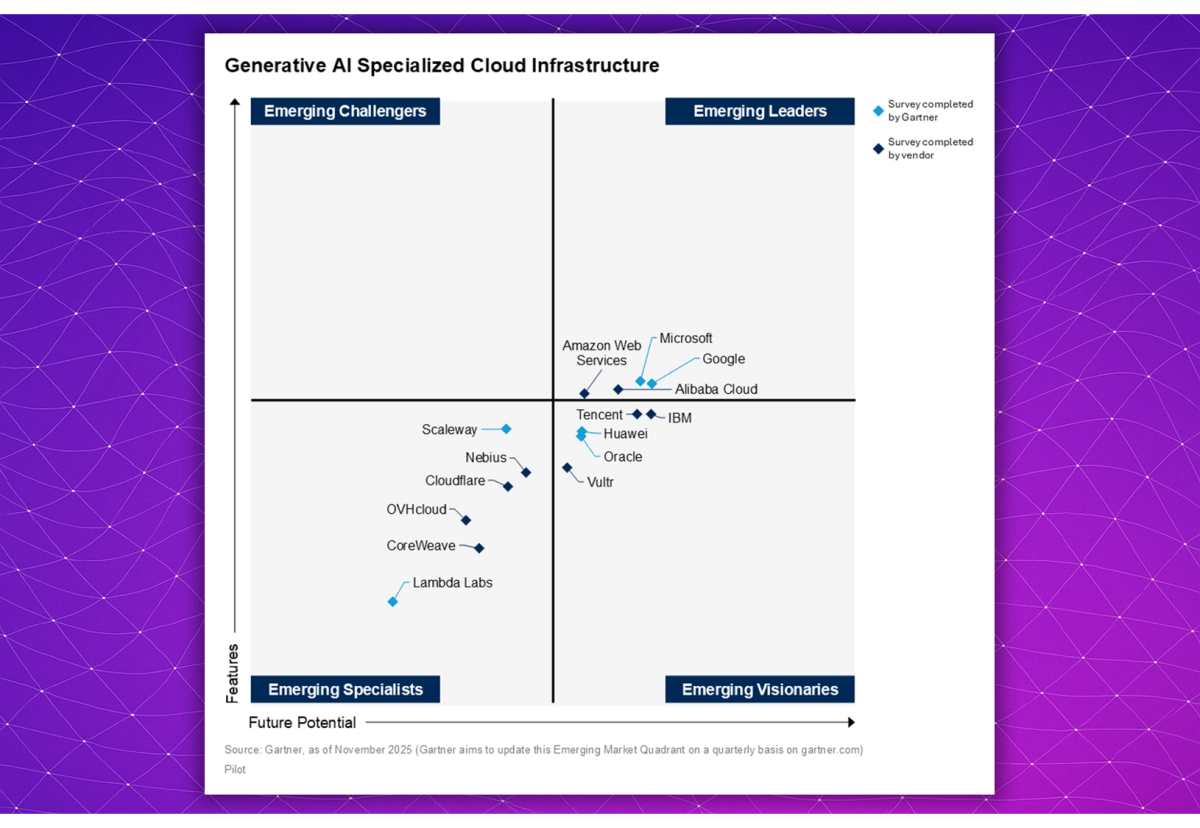

In the 2025 edition, the Emerging Leaders quadrant is unsurprisingly dominated by the four hyperscalers that have built the largest GPU and AI-optimized compute footprints: Microsoft, Google, AWS, and Alibaba Cloud. Their positioning reflects large-scale procurement pipelines for accelerators, mature orchestration stacks, global networking fabrics, and tightly integrated enterprise ecosystems that make them the default choice for many large organizations.

But the real story of this year’s quadrant lies outside the top-right corner. The spread of companies across the Visionaries and Specialists categories shows how generative AI infrastructure is decentralizing - and how an increasingly diverse set of providers is competing for different slices of the market.

The Emerging Visionaries quadrant features a mix of global enterprise vendors and fast-moving alternative clouds. IBM, Tencent, Huawei, and Oracle appear here, each attempting to expand their AI infrastructure portfolios to compete more directly with the market leaders. Their presence reflects investments in high-density GPU clusters, sovereign cloud regions, and new AI-specific networking stacks.

Independent cloud providers also factor into this quadrant, signaling a shift in how organizations evaluate AI deployment strategies. Among them, Vultr stands out for its ability to bring new accelerator generations-such as AMD Instinct MI355X, MI325X, and MI300X-to market earlier than many larger competitors. This pattern of rapid hardware availability, combined with globally distributed regions and transparent pricing, is contributing to Vultr’s growing credibility as an alternative hyperscaler for generative AI workloads. As enterprises push into production workloads and face availability constraints, cost complexity, and lock-in pressures on the hyperscalers, they are increasingly exploring alternative platforms with more flexible pricing and deployment models. This trend highlights the growing relevance of independent clouds in the broader AI infrastructure conversation.

The Emerging Specialists quadrant, meanwhile, includes players such as CoreWeave, Lambda Labs, Cloudflare, OVHcloud, Scaleway, and Nebius. These companies tend to operate with narrower focus areas - whether that’s GPU-dense clusters for training, developer-centric ML stacks, edge inference networks, or strong regional sovereignty guarantees. Their placement underscores how the generative AI market is fragmenting into specialized deployment needs rather than converging toward a single architectural model.

In parallel with these market dynamics, new hardware availability is also influencing how organizations think about infrastructure choice. New hardware availability is also influencing infrastructure decisions, with early access to the latest accelerator generations reshaping cost and performance expectations for large scale AI workloads. These GPUs deliver strong throughput, improved power efficiency, and advanced acceleration features, while integrating with full cloud networking and storage stacks - a combination that is beginning to reshape cost-performance expectations for large-scale training and AI inference.

Taken together, this year’s eMQ paints a picture of a sector in transition. The hyperscalers still lead in end-to-end capability and scale, but they no longer define the entire trajectory of AI infrastructure. Instead, a multi-layered ecosystem is emerging: major enterprises upgrading their stacks, alternative clouds gaining traction, and specialist providers carving out high-value niches.

For organizations planning their 2026 and 2027 AI roadmaps, the message is clear. The infrastructure market is diversifying, not consolidating. And as generative AI workloads evolve from isolated model execution to globally distributed, latency-sensitive pipelines, the range of credible providers - and the range of architectural choices - is poised to grow even further.