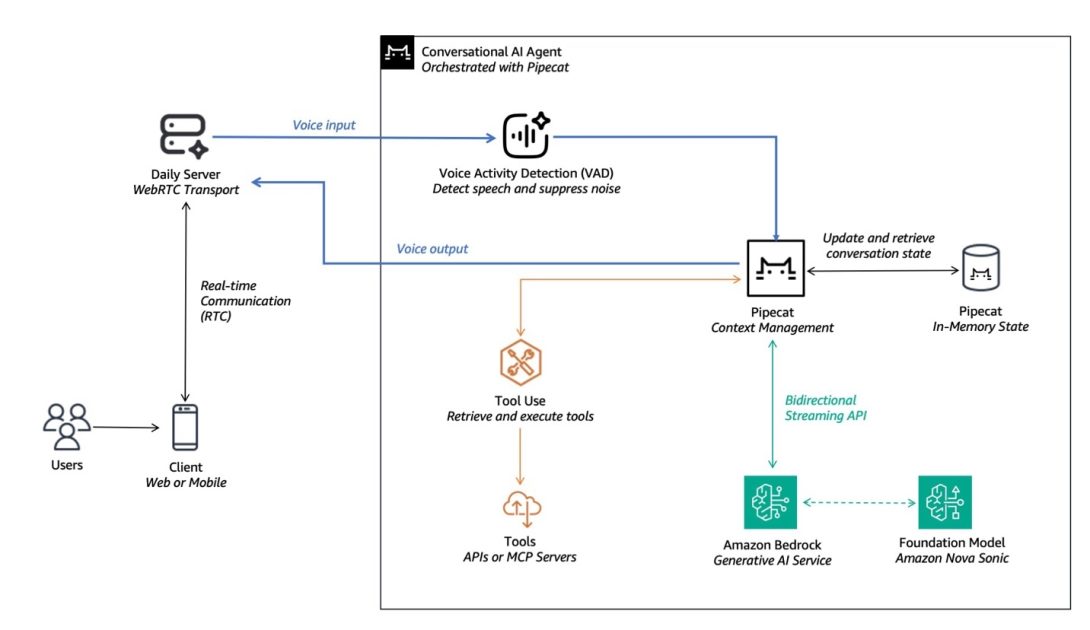

AWS and Pipecat announce enhanced capabilities for building intelligent AI voice agents. This collaboration integrates Amazon Nova Sonic, a new speech-to-speech foundation model, directly into the open-source Pipecat framework (v0.0.67). Nova Sonic simplifies voice AI development, offering real-time, human-like conversations with superior performance.

Previously, building voice AI agents often required orchestrating multiple cascaded models for Automatic Speech Recognition (ASR), Natural Language Understanding (NLU), and Text-to-Speech (TTS). This approach introduced latency and could lose conversational nuances. Amazon Nova Sonic unifies these components into a single model. It processes audio in real-time with one forward pass, significantly reducing latency. The model dynamically adjusts responses based on acoustic characteristics and conversational context, recognizing subtleties like pauses and turn-taking cues. This creates more fluid and contextually appropriate dialogue. Nova Sonic also supports tool use and agentic RAG with Amazon Bedrock Knowledge Bases, enabling agents to retrieve information and perform actions.

Streamlining Intelligent AI Voice Agent Development

The integration of Nova Sonic into Pipecat offers developers a straightforward path to implement state-of-the-art speech capabilities. Kwindla Hultman Kramer, CEO of Daily.co and Pipecat Creator, highlights Nova Sonic's "leap forward" for real-time voice AI. He notes its bidirectional streaming API, natural voices, and robust tool-calling capabilities. This allows developers to build agents that understand, respond, and take meaningful actions like scheduling appointments. AWS and Pipecat also plan future integrations with Amazon Connect and the Strands agentic framework, aiming to transform contact centers and enable sophisticated multi-agent workflows. Developers can access a comprehensive code example to get started with Amazon Nova Sonic and Pipecat, customizing conversation logic and model selection for specific needs.

Further enhancing agentic capabilities, the platform supports delegating complex queries to external agents, such as Strands Agents. This "agent as tools" pattern allows the voice agent to offload multi-step tasks and complex reasoning. For instance, a Strands agent can process a query like "What is the weather near the Seattle Aquarium?", then execute multiple tool calls (e.g., search places, get weather) to provide a summarized response. This makes building intelligent AI voice agents more accessible and powerful than ever.