The video demonstration of Anthropic’s Claude, specifically highlighting its new interactive tool integration, is not a minor feature update; it illustrates the fundamental shift from generative AI as a static content engine to an interactive, multi-modal workflow agent. This capability transforms the large language model (LLM) from a powerful assistant into the central operating layer for complex business tasks, challenging the established paradigm of enterprise software integration and demanding attention from VCs and technology leaders focused on operational efficiency. The product showcase centers on a user attempting to manage a dashboard redesign rollout, requiring rapid coordination across design, data validation, project management, and communication tools.

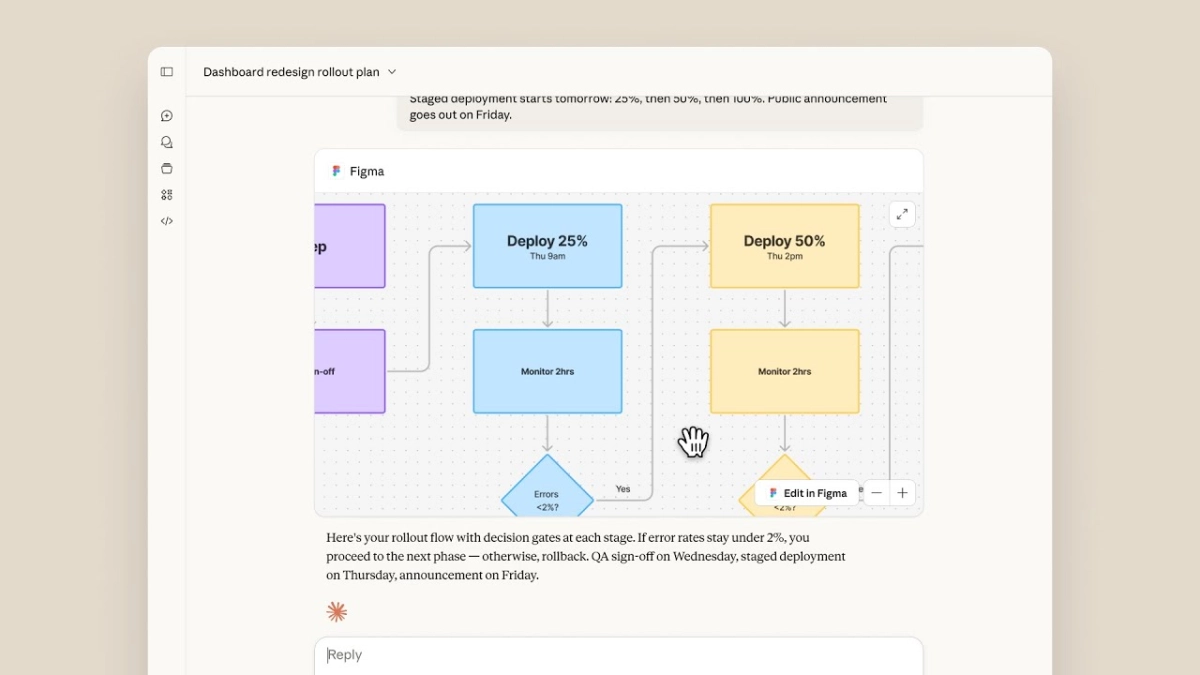

The user, identified as Jared, initiates a complex, multi-stage request entirely within the Claude interface, asking the LLM to map out a rollout plan for a dashboard redesign, specifying stages: "25%, then 50%, [and] public announcement goes out on Friday." Claude, using its Opus 4.5 model, immediately translates this prompt into a structured, visual deliverable. Rather than simply describing the process, the LLM integrates with Figma to generate a complete, editable flowchart. This diagram is not a static image but a fully realized workflow, complete with defined stages, required monitoring times ("Monitor 2hrs"), and critical decision gates, such as checking if "Errors < 2%?" before proceeding to the next deployment phase, or triggering a rollback. This initial step underscores the first core insight: the AI is demonstrating true operational agency by not just generating content, but by structuring and visualizing complex conditional logic within an external application.

The workflow continues interactively, mimicking the natural, iterative thought process of a product manager. After the flowchart is presented, the user prompts the system for data validation, stating: "Nice! Pull up the engagement data so I can validate we're ready." Claude instantly shifts context, integrating with Amplitude to pull and visualize key product metrics. The resulting line chart compares the staging group (new widgets) against the control group (current), showing the "Avg. widget interaction per user" over the last seven days. The system not only retrieves the data but analyzes it, concluding that the staging group's engagement "is up about 40% compared to control." This instantaneous, cross-platform data validation is critical, allowing the user to make a high-stakes deployment decision without the friction of switching tabs, logging into separate dashboards, and manually synthesizing the findings.

This seamless, real-time movement between diagramming (Figma) and analytics (Amplitude) without leaving the conversational interface is the primary value proposition for the enterprise. For founders and operational leaders, the elimination of context-switching friction translates directly into accelerated decision cycles and reduced cognitive load. The LLM is effectively serving as the unified command center for the entire operational stack.

Once validation is complete, the workflow moves to execution. The user instructs Claude: "Numbers look good! Go ahead and add the tasks to the Dashboard Redesign project." Claude then integrates with Asana, operationalizing the staged rollout plan. It creates a dedicated "Rollout" section in the project, populated with detailed tasks—from "QA Sign off" to "Monitor 50% rollout"—assigning owners (Priya for technical steps, David for the announcement) and setting priority levels and deadlines. This is a profound demonstration of an LLM moving from ideation and analysis to direct task management and execution within core enterprise systems. The AI is no longer just providing answers; it is proactively managing the project schedule.

The final stage of the demonstration involves communication synthesis. The user asks Claude to draft a quick summary for a meeting attendee, Conor, via Slack. Claude synthesizes the entire preceding conversation and actions—the validated readiness to ship, the positive engagement data, and the confirmed deployment schedule—into a concise, actionable message draft. The user makes a minor edit to the closing, changing "Let me know if you have any concerns" to the more urgent "Please flag anything before 9 am tomorrow!" before sending the message directly through the interface. This capability closes the loop, showing that Claude can manage the entire lifecycle of a project phase: planning, visualization, data validation, execution, and communication. The key takeaway for the insider audience is the strategic positioning: Anthropic is not just selling a model, but selling an enterprise orchestration layer that coordinates the actions of existing SaaS tools, fundamentally altering the user’s relationship with their digital workplace.