OpenAI has made a significant move, releasing gpt-oss, a suite of "state-of-the-art open-weight language models" that represents a notable shift in their strategy and offers powerful new tools for the AI community. AI commentator Matt Wolfe detailed the specifics of this release, highlighting OpenAI's delivery on a long-anticipated promise and the models' impressive capabilities. This development, particularly the decision to release the actual model weights under a flexible license, has immediate implications for startups, venture capitalists, and AI professionals navigating the rapidly evolving generative AI space.

The gpt-oss models are available in two sizes: a 120 billion parameter version and a more compact 20 billion parameter version. A critical distinction emphasized by Wolfe is that these are "open-weight" models, meaning OpenAI is "actually releasing the weights to these models," rather than just providing open-source code for an API. This distinction is crucial for developers seeking full control and the ability to fine-tune and deploy models on their own infrastructure, including consumer hardware.

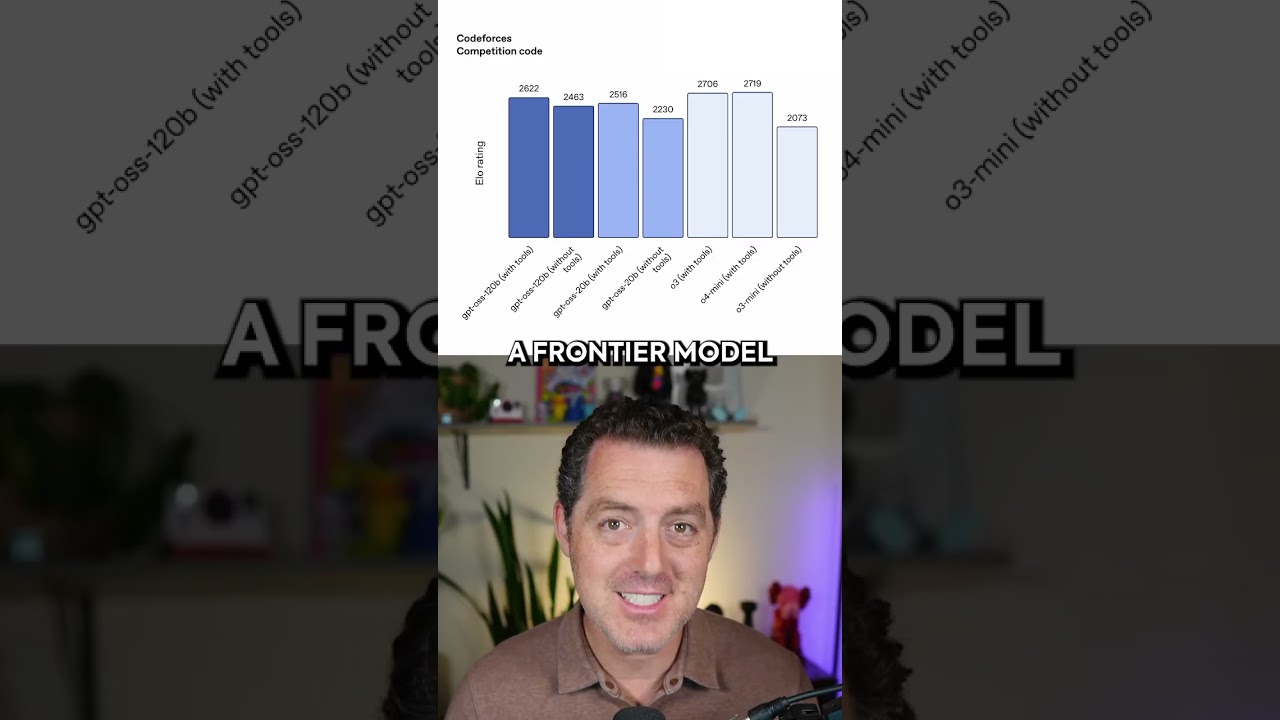

One of the most compelling aspects of gpt-oss is its performance relative to established frontier models. Benchmarks from the Codeforces competition, a rigorous test of coding ability, illustrate this prowess. The gpt-oss-120b model, when equipped with tools, achieved an ELO rating of 2622. Wolfe noted that this is "extremely comparable" to OpenAI's own internal "frontier model," O3, which scored 2706 with tools.

Even more striking is the performance of the smaller gpt-oss-20b model. This 20 billion parameter version, also with tools, scored 2516, demonstrating a competitive edge that is "just as comparable given its size." Such robust performance from a smaller, open-weight model is a game-changer, indicating that high-level AI capabilities are becoming more accessible and less resource-intensive. These models are optimized for efficient deployment on consumer hardware, a key factor for startups and researchers with limited access to enterprise-grade compute.

This release signals a strategic pivot for OpenAI, traditionally known for its closed, API-driven models. By offering open-weight models under the permissive Apache 2.0 license, OpenAI is directly engaging with the burgeoning open-source AI ecosystem, which has seen significant traction from Meta's Llama series and other initiatives. This move could be interpreted as a response to market demand for more transparent, customizable, and deployable AI solutions, potentially aiming to broaden OpenAI's influence and establish its models as a foundational layer for diverse applications. The models were trained using advanced reinforcement learning techniques informed by OpenAI's most sophisticated internal models, ensuring their quality and advanced reasoning capabilities, particularly for tasks requiring strong tool use. This positions gpt-oss as a powerful, cost-effective alternative for real-world applications, capable of beating "most humans on the entire planet at coding."