The promise of AI moving beyond mere conversational interfaces into direct, autonomous action within our digital workspaces has long been a subject of intense speculation. With the introduction of Claude for Chrome, that promise takes a significant leap towards reality, transforming the browser into a genuinely intelligent agent capable of executing complex, multi-step workflows. This development signals a profound shift in how we interact with software, moving from human-driven commands to AI-orchestrated operations.

The video, titled "Let Claude handle work in your browser," presents a compelling demonstration of Claude for Chrome, a new browser extension from Anthropic. It showcases the AI's ability to "see, click, type, and navigate web pages," executing intricate workflows across various web applications without explicit, step-by-step human intervention for each action. This represents a critical evolution from AI as a co-pilot to AI as an active, independent executor of tasks, fundamentally altering the calculus of digital productivity.

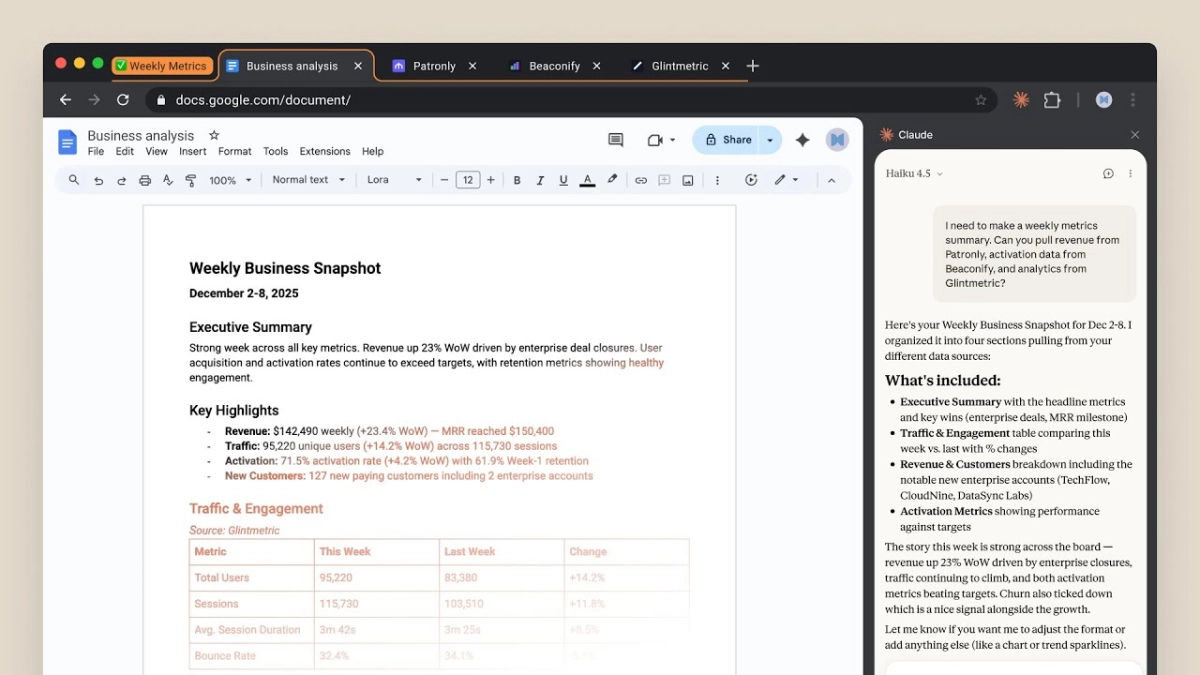

One of the primary demonstrations highlights Claude's capability in data aggregation and analysis. Faced with the prompt, "I need to make a weekly metrics summary. Can you pull revenue from Patronly, activation data from Beaconify, and analytics from Glintmetric?", Claude for Chrome springs into action. It autonomously opens multiple browser tabs, navigates to specific dashboards within each application—Patronly's "Revenue Overview," Beaconify's "Subscriber Analytics," and Glintmetric's "Traffic Analytics"—and extracts the pertinent data. The system then synthesizes this information into a Google Doc, generating a "Weekly Business Snapshot" complete with an executive summary, key highlights, and detailed metrics, all formatted coherently.

This workflow underscores a core insight: the AI's ability to understand a high-level, abstract goal and decompose it into a sequence of actionable steps across disparate web environments. It's not merely parsing text but interpreting UI elements and data points from complex dashboards. This capacity for seamless data integration from diverse SaaS platforms, often a laborious manual process, dramatically reduces the overhead for business intelligence and reporting, offering founders and VCs real-time, consolidated views without extensive human effort.

The second demonstration delves into iterative document editing and revision, showcasing Claude’s contextual understanding and adaptability. The user issues a command: "Hey! Can you go through the comments in this deck and make the changes? Resolve each one when done." What follows is a rapid-fire sequence of edits to a Google Slides presentation, addressing comments from multiple stakeholders. Claude meticulously adds authors to the title slide, changes the company name to lowercase to match a style guide, incorporates a customer quote and Forrester Research citation on a problem slide, and simplifies text while adding a competitive comparison table to a solution slide. It further updates Q2 numbers to Q3 actuals, integrates customer logos, adds team bios, and refines a Go-to-Market Strategy slide with an 18-month revenue projection.

The sheer breadth and accuracy of these changes, often requiring nuanced interpretation of feedback, reveal an AI that moves beyond superficial text manipulation. It demonstrates an understanding of design principles, data accuracy, and brand guidelines, executing a comprehensive document overhaul with remarkable efficiency. For AI professionals, this highlights the model's advanced reasoning and ability to maintain context across a complex, multi-slide document. For founders, it means significantly accelerated iteration cycles for critical presentations, allowing more time for strategic thought rather than tedious revisions.

Perhaps the most compelling demonstration showcases Claude Code's ability to bridge design concepts with live code implementation. Presented with the instruction, "update our homepage to match this design [Image #1]," along with an attached image, Claude operates within a terminal environment. The system first states, "I'll explore the current codebase first to understand what we're working with, then implement the new design," indicating a deliberate, analytical approach. It then proceeds to modify the `index.html` file, and critically, the video illustrates the immediate, real-time update of the local homepage in the browser to perfectly align with the provided visual design.

This capability represents a significant leap in development workflows. It's a powerful fusion of multimodal understanding—interpreting a visual design input—with direct code generation and live deployment. The implications for product development cycles are profound: reducing the friction between design and engineering, enabling faster prototyping, and allowing non-technical stakeholders to directly influence front-end changes. This agentic approach to development could reshape how software is built, offering unprecedented speed and responsiveness to market demands.

The overarching insight derived from Claude for Chrome's capabilities is the profound shift towards genuinely autonomous AI agents. This is not merely an incremental improvement in AI assistance; it is a fundamental redefinition of the human-computer interface, where the AI becomes an active participant in problem-solving and task execution within the browser environment. For startup ecosystem leaders, this technology suggests a future where operational bottlenecks are significantly alleviated, enabling leaner teams to achieve disproportionate output. For defense and AI analysts, it signals the emergence of highly capable digital assistants that can navigate and synthesize information from vast and varied web sources, potentially transforming intelligence gathering and analysis. This new paradigm of AI interaction promises to unlock unparalleled levels of productivity and innovation across virtually every industry.