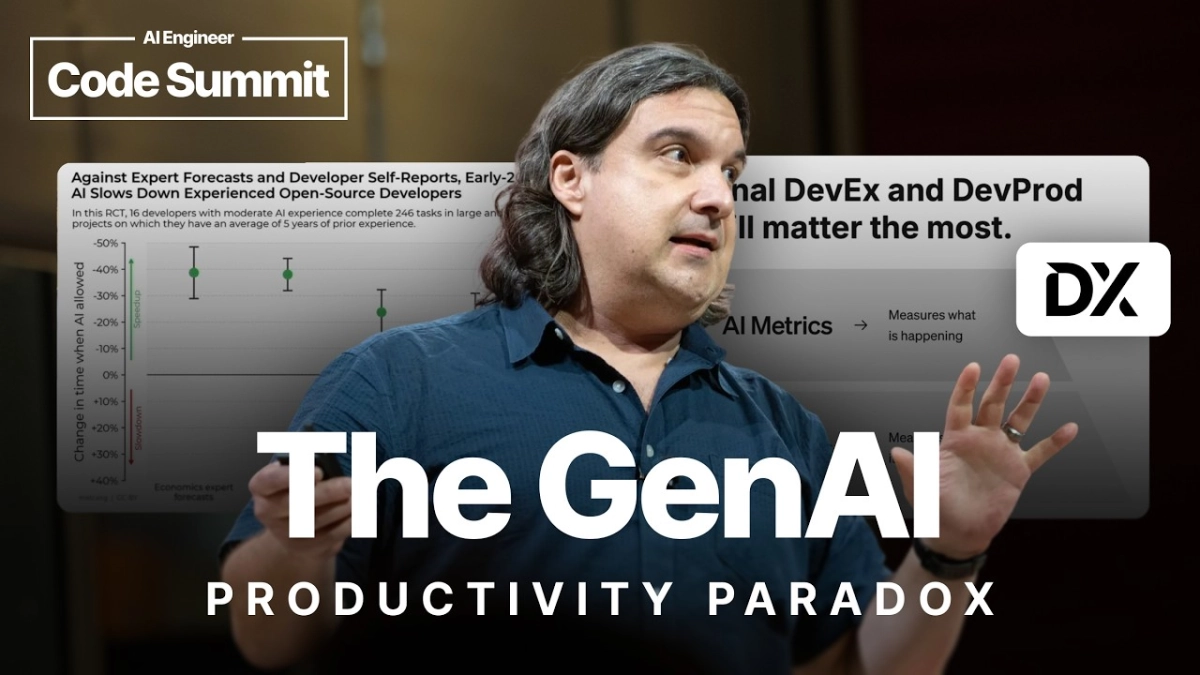

The pervasive narrative surrounding generative AI often paints a picture of universal productivity gains, a tide lifting all engineering boats. Yet, as Justin Reock, Deputy CTO at DX (acquired by Atlassian), illuminated at the AI Engineer Code Summit, this simplistic view obscures a far more complex reality. While some organizations harness AI for significant advancements, many grapple with its adoption, even experiencing detrimental effects.

Reock's presentation, "Leadership in AI Assisted Engineering," delivered to an audience of founders, VCs, and AI professionals, peeled back these layers, revealing that the true impact of AI is not evenly distributed. He argued that to realize meaningful returns on AI investments, leadership must take accountability for establishing best practices, enabling engineers, measuring impact accurately, and ensuring proper guardrails are in place.

The early hype suggested straightforward productivity boosts. Google, for instance, reported a 10% increase in engineer productivity. However, other studies, like the now "infamous METR study," showed a 19% decrease in productivity for experienced open-source developers using code assistance, even as they felt more productive. This disconnect between perception and reality is critical. Reock underscored this with DX's own aggregated data, which, when averaged, showed modest gains across metrics like change confidence, code maintainability, and a slight reduction in change failure rates.

However, "Industry averages hide the big picture of quality impact." When DX broke down the same metrics by individual company, the picture changed dramatically. Some companies saw a 20% increase in change confidence, while others experienced a 20% decrease. Similar extreme volatility was observed in code maintainability and change failure rates, with some organizations shipping "as much as 50% more defects" than before AI adoption. This profound variability demands a deeper understanding beyond mere statistical averages.

A core reason for this uneven distribution lies in human factors, particularly psychological safety. Reock referenced Google's seminal "Project Aristotle," a 2012 study that aimed to identify characteristics of high-performing teams. Google initially assumed the recipe involved a combination of high performers, experienced managers, and unlimited resources. "They were wrong. The high performing teams were the ones with greatest psychological safety." This insight is paramount for AI adoption. Leaders must proactively address the fear of AI, framing it as a "force multiplier for performance" that augments capabilities, rather than replacing jobs. As Reock succinctly put it, "AI's not coming for your job, but somebody really good at AI might take your job."

Effective AI leadership also necessitates a holistic approach to integration. For most organizations, the bottleneck in the SDLC has never been the sheer act of writing code. Focusing AI solely on code generation, while providing some productivity gains, often overlooks larger inefficiencies. As Eli Goldratt famously stated, "An hour saved on something that isn't the bottleneck is worthless." Reock emphasized that the biggest payoffs are awarded to companies that integrate AI across the entire SDLC, including code reviews, incident management, refactoring, and documentation.

Measuring the true impact of AI requires a robust framework. Reock highlighted three types of metrics: telemetry (what's happening with the tech), experience sampling (identifying best use cases and quantifying ROI), and self-reported data (measuring adoption and satisfaction). DX's AI Measurement Framework normalizes these into three dimensions: Utilization, Impact, and Cost. Transparency is key here; organizations must have "open metrics discussions" and clearly communicate what's being tracked and why, fostering trust rather than "black box" surveillance.

To unblock usage and foster a culture of trust, leaders should leverage self-hosted and private models to keep sensitive data within their trust boundaries. Partnering with compliance teams early can co-design workflows that meet regulatory obligations without stifling innovation. Furthermore, thinking creatively around barriers, such as using synthetic datasets or anonymization, can circumvent privacy blockers.

Ultimately, AI adoption must be tied to employee success. Providing both education and adequate time for engineers to learn and experiment with new techniques is crucial. Developers who embrace and leverage AI will outperform those who resist. This proactive investment in skill development ensures that engineers view AI as an opportunity to enhance their careers, not a threat.

Reock's insights underscore that successful leadership in AI-assisted engineering transcends simply deploying cutting-edge technology. It demands a strategic, human-centric approach that prioritizes psychological safety, transparent measurement, and thoughtful integration across the entire development lifecycle. By focusing on these foundational elements, organizations can navigate the complexities of AI adoption, transforming potential volatility into sustained, meaningful impact.