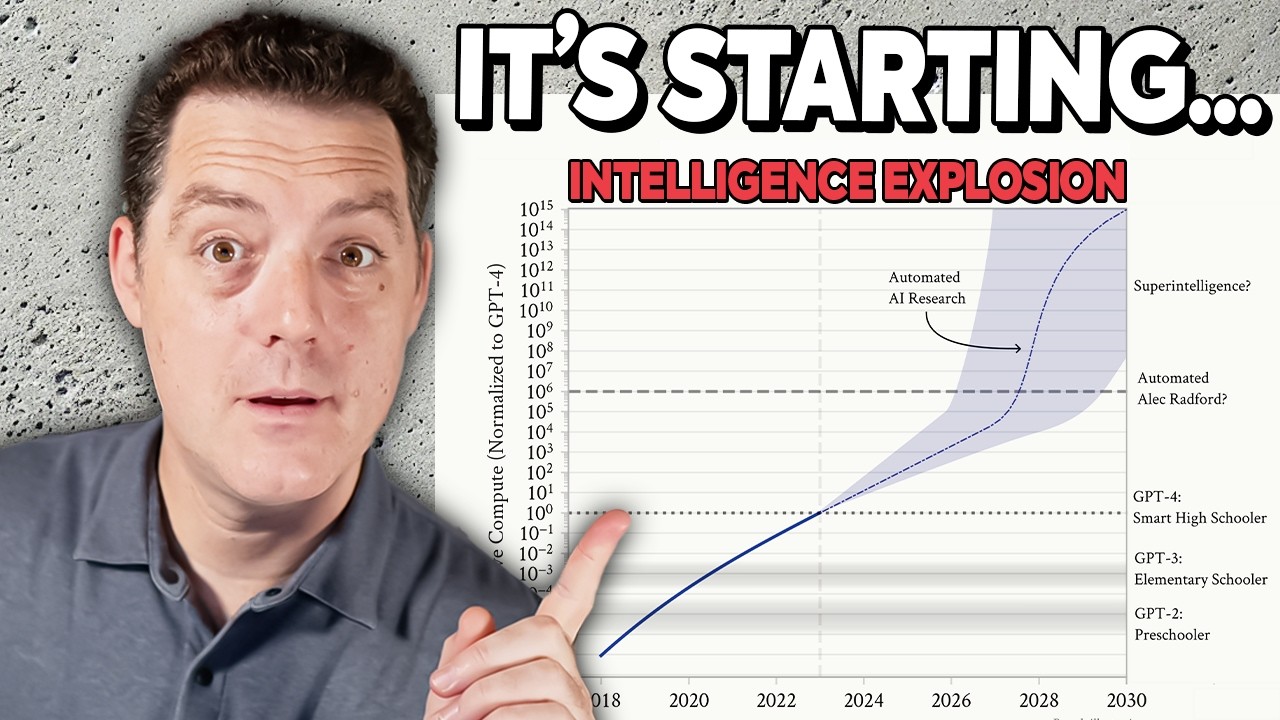

Matthew Berman, in his latest video, heralded a pivotal shift in artificial intelligence, declaring it the "AlphaGo moment for model architecture discovery." He articulated a future where AI systems transcend human limitations, autonomously generating and refining their own designs, thereby unlocking exponential innovation in the field. This profound development, centered around the ASI-ARCH paper, promises to redefine the pace and scope of AI progress.

Berman’s commentary unpacked the significance of the ASI-ARCH framework, a groundbreaking system presented in a recent scientific paper. The core premise, as he explained, is to overcome the current bottleneck in AI innovation: human cognitive capacity. Historically, advancements in AI, such as OpenAI's models gaining "thinking capabilities" or the invention of the Transformer architecture, originated from human ingenuity. This human-centric approach, however, inherently limits progress to a linear scale.

The inspiration for this paradigm shift draws directly from DeepMind's AlphaGo, the AI that famously defeated the world's best Go players. Crucially, AlphaGo Zero, a later iteration, achieved superhuman performance by learning entirely through self-play, without any human strategic input. As Berman emphasized, "When AI is able to learn on its own, without human input, it is able to discover things that humans couldn't, or at least haven't." This ability to self-iterate and uncover novel solutions, unburdened by conventional human thought, is the cornerstone of the ASI-ARCH system.

ASI-ARCH operates as a closed evolutionary loop, mimicking a scientific research process. It features a "Researcher" module that proposes new neural network architectures based on historical data and human literature. An "Engineer" then implements these ideas into executable code, trains the models in a real environment, and automatically corrects any errors. This self-healing capability ensures that promising novel approaches aren't discarded due to coding bugs.

Finally, an "Analyst" module evaluates the training and performance results, identifying insights and learning from successes and failures. This knowledge is then fed back into the "Cognition Base" for the Researcher, completing the loop and enabling continuous, autonomous improvement. Berman highlighted that "the only limitation is the amount of compute given to it." This marks a significant transition where the constraint shifts from human intellect to computational resources.

The efficacy of this autonomous research is already evident. ASI-ARCH conducted 1,773 autonomous experiments, consuming over 20,000 GPU hours. From these, an impressive 106 architectures achieved state-of-the-art results, surpassing previous public models. This demonstrates the system's capacity for genuine, high-impact architectural discovery.

The video also included a brief sponsorship segment for Box AI, illustrating how businesses can leverage advanced models from providers like OpenAI and Anthropic to streamline document management and extract insights without needing to build complex RAG architectures internally. Box AI aims to simplify enterprise-level integration of cutting-edge AI, offering secure and compliant solutions for thousands of organizations.

The implications of self-improving AI systems like ASI-ARCH are vast. By removing the human bottleneck, the pace of scientific discovery in AI, and potentially other fields like biology and medicine, is poised for exponential acceleration. This new era promises breakthroughs that human researchers, with their inherent cognitive biases and limitations, might never conceive.