H Company has unveiled Holo2, a new family of large-scale Vision-Language Models (VLMs) engineered to power multi-domain GUI agents. These agents are designed to interpret, reason over, and act within real digital environments, including web, desktop, and mobile interfaces. Moving beyond static perception, Holo2 emphasizes navigation and multi-step task execution, building on the UI localization and screen understanding capabilities of its predecessor, Holo1.5. Significant advancements have been made in policy learning, action grounding, and cross-environment generalization.

Holo2 Models and Capabilities

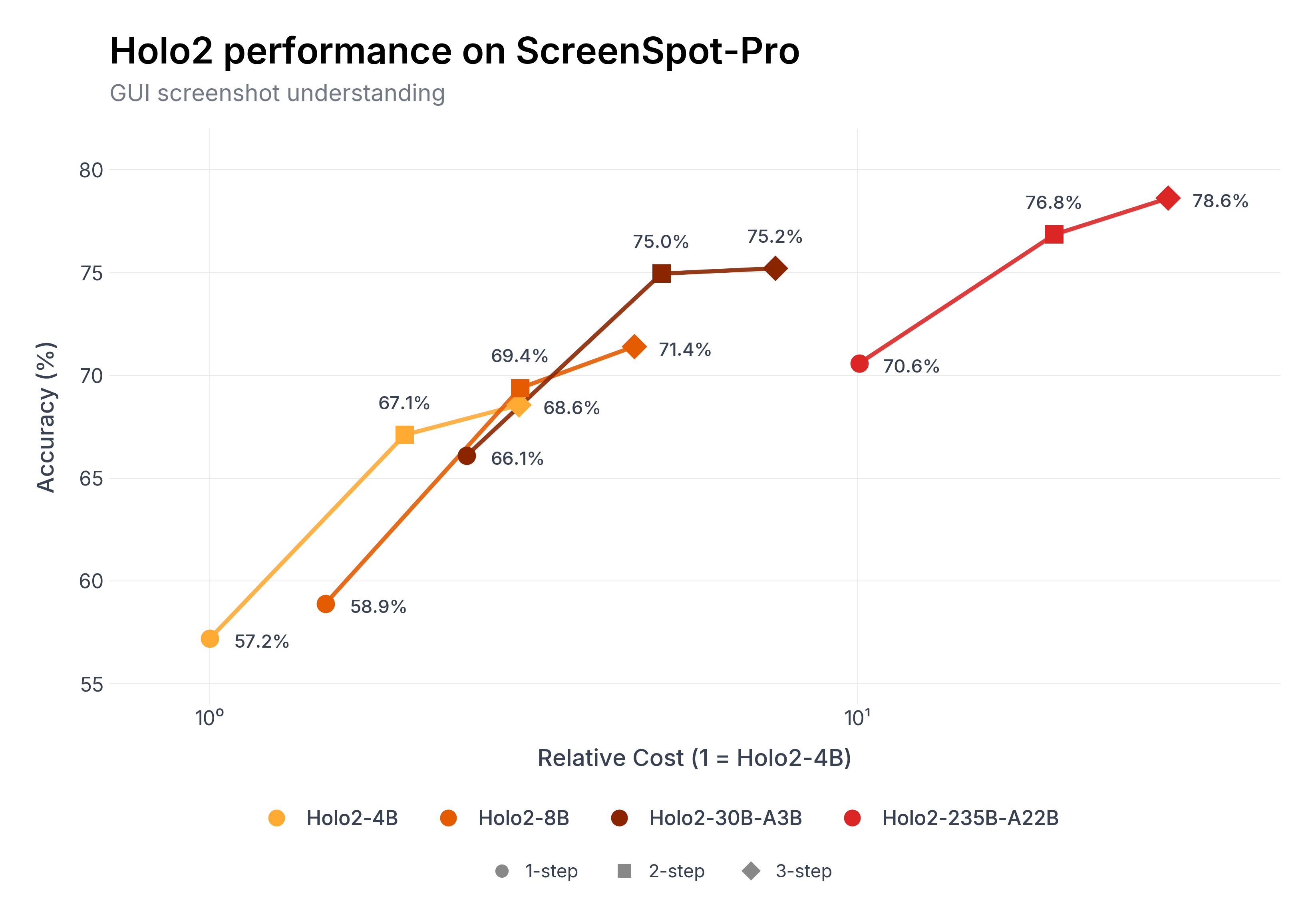

The Holo2 series comprises four distinct model sizes: Holo2-4B and Holo2-8B are fully open-sourced under the Apache 2.0 license. Holo2-30B-A3B and Holo2-235B-A22B are available under a research-only license, with commercial licensing requiring direct contact with H Company. These models are positioned as reliable and efficient foundations for next-generation computer use agents, such as the Surfer-H agent. Developed by H Company, these vision language models are fine-tuned from Qwen/Qwen3-VL-235B-A22B-Thinking. The training strategy involves a multi-stage pipeline utilizing proprietary data for UI understanding and action prediction, combined with open-source datasets, synthetic data, and human annotations. This is followed by supervised fine-tuning and online reinforcement learning (GRPO) to achieve state-of-the-art performance.

A key innovation in the Holo2 models is 'agentic localization,' which addresses the challenges of pinpointing small UI elements in high-resolution, 4K interfaces. This iterative refinement process boosts accuracy, yielding relative gains of 10-20% across all Holo2 sizes. Notably, the Holo2-235B-A22B model achieves 70.6% accuracy on the demanding ScreenSpot-Pro benchmark in a single step, and an impressive 78.5% within three steps, setting a new state-of-the-art for GUI grounding benchmarks. The Holo2 foundational models demonstrate superior performance across various UI localization benchmarks, outperforming previous models and establishing new industry standards.