Perplexity just kicked down one of the biggest doors in artificial intelligence. The AI search company announced today that it has developed and open-sourced a method for running massive, trillion-parameter models on standard Amazon Web Services (AWS) cloud infrastructure, a feat that was previously considered impractical.

This breakthrough effectively dismantles a critical barrier that has kept the most powerful AI models locked away inside elite research labs with bespoke, high-end hardware.

For years, a frustrating paradox has governed the cutting edge of AI. The most capable models, like Mixture-of-Experts (MoE) architectures with a trillion or more parameters, are so enormous they require the coordinated power of multiple computers to even exist. While solutions emerged for this multi-node problem, they were almost exclusively tailored for specialized, expensive networking hardware like NVIDIA’s ConnectX-7 with InfiniBand.

This created a two-tiered AI world. If you had access to a custom-built supercomputer, you could run the giants. If you were like most researchers, startups, or even large companies relying on the more generalized infrastructure of a cloud provider like AWS and its Elastic Fabric Adapter (EFA), you were out of luck. The techniques simply didn't translate, making deployments on the cloud prohibitively slow and costly. As Perplexity noted in its announcement, this lack of cloud portability has been a major bottleneck for the entire industry.

Bridging the Cloud Divide

Perplexity’s solution, detailed in a new research paper, is a set of highly optimized software components called kernels. These kernels manage the incredibly complex task of routing data between different GPUs across multiple machines—a process central to making MoE models work. According to the company, their kernels are the first to achieve “viable performance” for this task on AWS EFA.

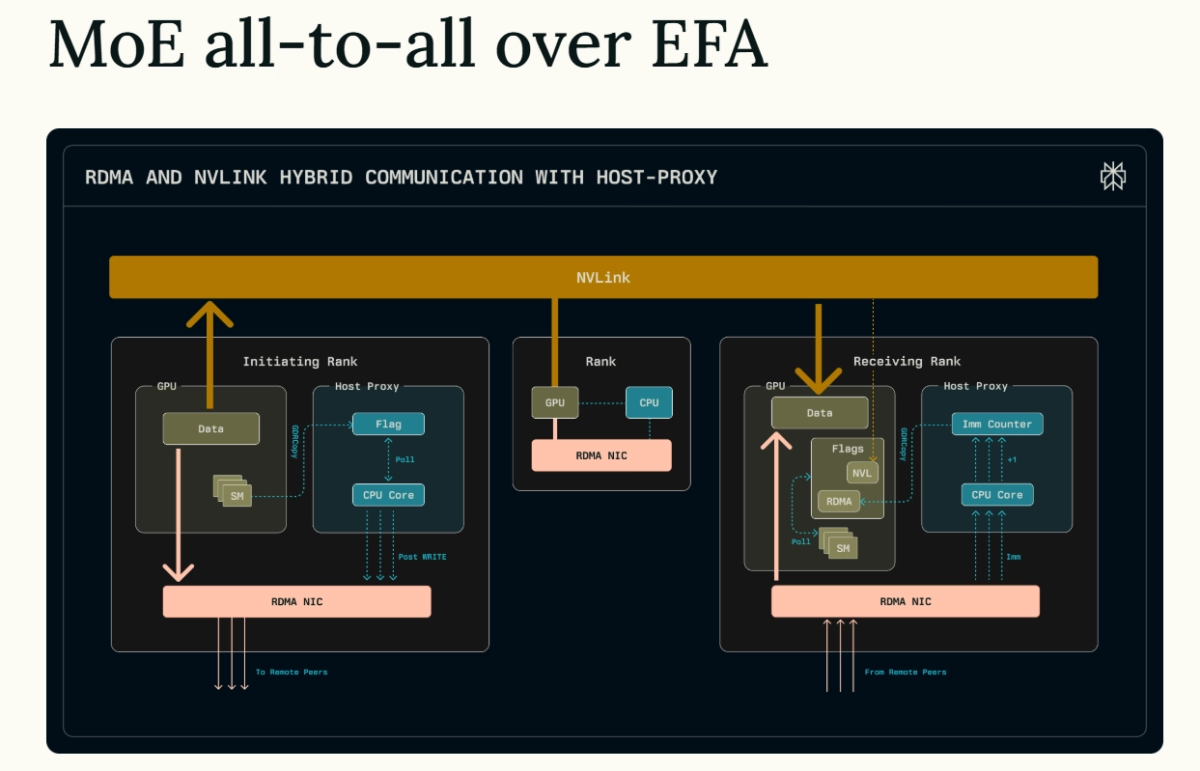

The technical approach is a clever hybrid CPU-GPU architecture. It works around the specific limitations of EFA that have stymied previous efforts, which often required a direct, unimpeded line between the GPU and the network card. Perplexity’s system uses a host CPU thread as a proxy to manage network transfers, but does so with a specialized “TransferEngine” that batches operations and minimizes the overhead that plagued earlier attempts.

The results speak for themselves. In benchmarks, Perplexity’s solution doesn’t just work on AWS—it excels. When tested on the high-end NVIDIA ConnectX-7 hardware, it performs competitively with, and in some cases surpasses, state-of-the-art methods like DeepEP. But the real victory is on AWS. The company demonstrated that its kernels enable the deployment of models like the 671-billion-parameter DeepSeek-V3 and the colossal 1-trillion-parameter Kimi-K2 on standard AWS p5en instances equipped with H200 GPUs.

Running Kimi-K2 is a particularly significant proof point. Perplexity notes the model is physically too large to fit on a single 8x H200 node, making an efficient multi-node solution not just a nice-to-have, but an absolute necessity. Before this, serving a model of that scale on AWS at a practical speed was a pipe dream.

By open-sourcing these kernels, Perplexity is essentially handing the broader AI community a key to the kingdom. Researchers and developers no longer need access to a multi-million dollar, custom-built cluster to experiment with and build upon the world’s most advanced AI architectures. They can now leverage the scale and accessibility of AWS. This could dramatically accelerate innovation, allowing more people to build products powered by AI that can reason with greater nuance and intelligence.

Perplexity says it is working directly with AWS to push performance even further, promising future optimizations that will continue to reduce latency.

As of today, the wall between elite research hardware and the public cloud has been seriously breached. The era of trillion-parameter models on AWS has officially begun.