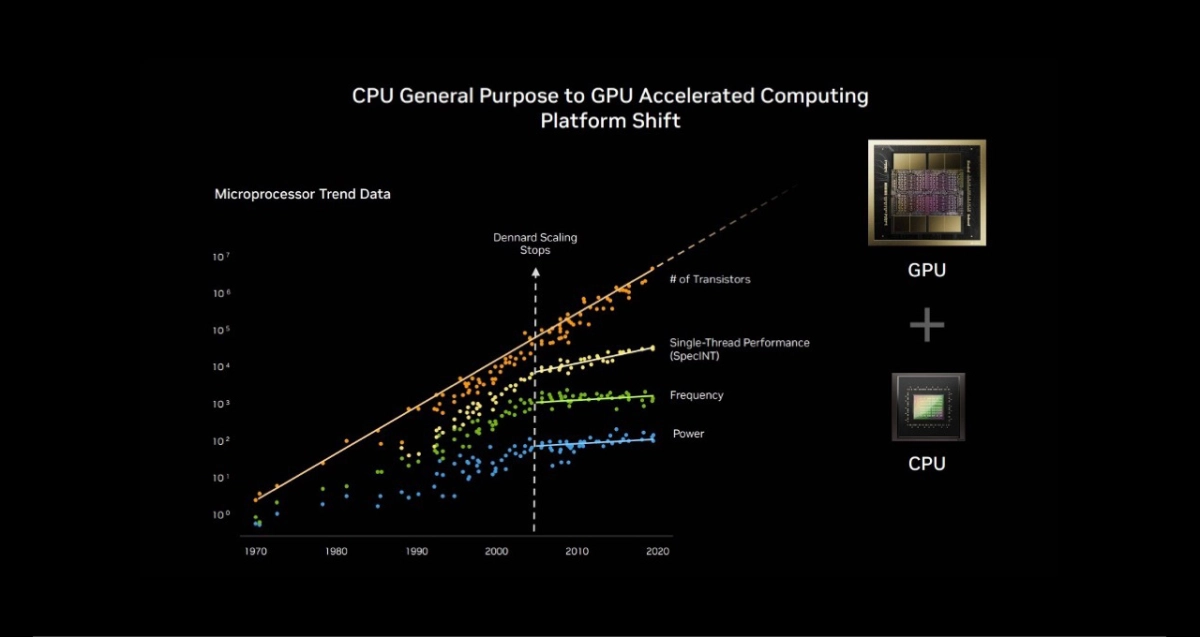

NVIDIA's accelerated computing platform is fundamentally reshaping the landscape of high-performance computing and artificial intelligence, signaling a profound re-architecture of modern computation. This platform now dominates supercomputing benchmarks previously held by traditional CPUs, enabling a new era of AI, science, business, and global computing efficiency. This historic transition from CPU-centric serial processing to GPU-powered parallel architectures marks the definitive end of Moore's Law's unchallenged reign and the rise of a new computational paradigm.

The computing world is witnessing a seismic shift, with NVIDIA accelerated computing now dominating supercomputing benchmarks previously held by traditional CPUs. Over 85% of the TOP100 supercomputers, a subset of the TOP500 list, currently leverage GPUs, marking a historic transition from serial processing to massively parallel architectures. This paradigm shift, ignited by AlexNet's 2012 demonstration of AI learning on gaming GPUs, has made exascale computing practical by delivering a 4.5x energy efficiency advantage over CPU-only systems. NVIDIA's record-breaking Graph500 performance, processing 2.2 trillion vertices with just 8,192 H100 GPUs compared to 150,000 CPUs for the next best result, underscores a monumental total cost of ownership advantage. Crucially, this evolution extends beyond hardware, encompassing a co-designed full-stack platform of networking, memory, storage, orchestration, and the indispensable CUDA-X software ecosystem, where significant speedups truly materialize.

This shift to GPUs forms the bedrock for AI's future, guided by the three scaling laws: pre-training, post-training, and test-time compute. Pre-training scaling established that increasing datasets, parameters, and compute predictably enhances model performance, a feat only feasible with GPU acceleration, as demonstrated by NVIDIA's highest performance across all MLPerf Training benchmarks. Following foundational model creation, post-training involves extensive refinement through techniques like reinforcement learning from human feedback, pruning, and distillation, demanding compute often rivaling pre-training itself. Test-time scaling, the newest and potentially most transformative law, powers mixture-of-experts architectures and agentic AI, requiring dynamic, recursive compute that will exponentially drive inference infrastructure demand. Together, these laws define a complete AI lifecycle, where NVIDIA accelerated computing powers every stage from initial learning to sophisticated reasoning and deployment.

From Virtual AI to Physical Intelligence

NVIDIA accelerated computing is already transforming industries, enabling hyperscalers to transition from classical machine learning to generative AI, particularly in areas like search and recommender systems. With CUDA GPUs, pre-training scaling allows models to learn from massive datasets of clicks and preferences, while post-training fine-tunes personalization for specific domains, yielding billions in sales from even a 1% gain in relevance. Generative AI, supported by NVIDIA platforms across all leading models and 1.4 million open-source variants, is reshaping robotics, autonomous vehicles, and software-as-a-service. This evolution extends to agentic AI, systems that perceive, reason, plan, and act autonomously, functioning as digital colleagues to accelerate productivity across diverse sectors from legal research to logistics. This represents a foundational shift, moving AI from a purely virtual technology into tangible, real-world applications.

The ultimate frontier is physical AI, embodying intelligence in robots of every form, a development that demands a specialized computing stack. This requires NVIDIA DGX GB300 for training complex vision-language-action models, NVIDIA RTX PRO for rigorous simulation and validation in a virtual world built on Omniverse, and Jetson Thor for real-time execution of reasoning VLA models. A breakthrough moment for robotics is anticipated within years, with autonomous mobile robots, collaborative robots, and humanoids poised to disrupt manufacturing, logistics, and healthcare. Morgan Stanley projects 1 billion humanoid robots and $5 trillion in revenue by 2050, underscoring the profound economic implications of this deep integration of AI into the physical economy. According to the announcement, NVIDIA CEO Jensen Huang emphasizes that AI is no longer merely a tool but a force performing work, poised to transform every one of the world's $100 trillion markets, initiating a virtuous cycle that redefines the entire computing stack.

The dominance of NVIDIA accelerated computing in supercomputing and its foundational role in the three scaling laws signify a profound re-architecture of computing itself. This trajectory promises not just incremental improvements but breakthroughs across every discipline, from drug discovery and fusion simulation to the widespread deployment of intelligent, autonomous physical systems. The era of AI-driven supercomputing, powered by NVIDIA's full-stack platform, is here, fundamentally altering how industries operate and how humans interact with technology.