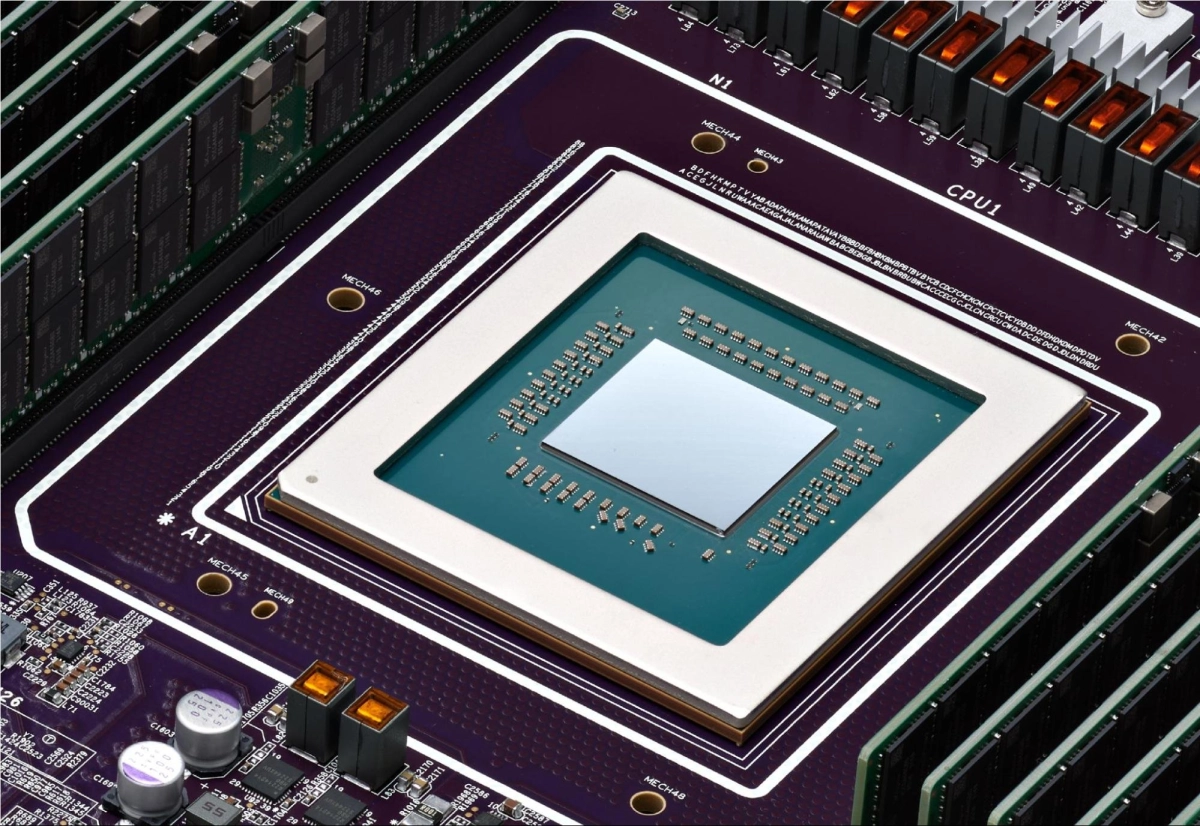

Google is making a bold play for the future of artificial intelligence, announcing its seventh-generation Tensor Processing Units, dubbed Ironwood, alongside new Arm-based Axion virtual machines. Unveiled on November 6, 2025, these custom-designed chips are Google's latest salvo in the escalating compute arms race, specifically targeting the burgeoning "age of inference" where the focus shifts from merely training massive AI models to efficiently serving them at scale.

The company's VP/GM of AI & Infrastructure, Amin Vahdat, and VP & GM of Compute and AI Infrastructure, Mark Lohmeyer, highlighted a critical industry pivot. As models like Gemini and Claude become ubiquitous, the challenge isn't just building them, but powering the "useful, responsive interactions" that define modern AI applications. This new era, characterized by constantly evolving model architectures, the rise of complex agentic workflows, and near-exponential demand for compute, demands a fresh approach to infrastructure. Google believes its vertically integrated, custom silicon strategy is the answer.

Ironwood TPUs are positioned as the workhorse for these demanding workloads, from large-scale training and reinforcement learning to high-volume, low-latency AI inference. Google claims a staggering 10x peak performance improvement over its previous TPU v5p and more than 4x better performance per chip compared to the TPU v6e (Trillium) for both training and inference. This makes Ironwood Google's "most powerful and energy-efficient custom silicon to date," set to become generally available in the coming weeks.

The scale Google is talking about is immense. Ironwood superpods can link up to 9,216 chips, boasting a mind-boggling 9.6 Tb/s Inter-Chip Interconnect (ICI) networking and access to 1.77 Petabytes of shared High Bandwidth Memory (HBM). This isn't just about raw power; it's about overcoming data bottlenecks for the most demanding models. Reliability is also a key focus, with Optical Circuit Switching (OCS) technology designed to dynamically reroute around interruptions, ensuring near-constant uptime even at GigaWatt scale.

Crucially, Ironwood isn't a standalone offering. It's a core component of Google's AI Hypercomputer, an integrated supercomputing system that weaves together compute, networking, storage, and software. This holistic approach, Google argues, is what delivers system-level performance and efficiency, with an IDC report cited claiming a 353% three-year ROI for AI Hypercomputer customers. The software stack supporting Ironwood is equally critical, with enhancements to open-source frameworks like MaxText and new features in Google Kubernetes Engine (GKE) and vLLM aimed at maximizing efficiency and reducing latency for inference workloads.

The Axion Complement

While Ironwood handles the heavy lifting of AI acceleration, Google isn't neglecting the general-purpose compute that underpins these intelligent applications. The company is expanding its custom Arm-based Axion portfolio with the N4A virtual machine, now in preview, and the C4A metal bare-metal instance, coming soon. N4A promises up to 2x better price-performance than comparable x86-based VMs, making it ideal for microservices, containerized applications, and data analytics – the operational backbone of AI workflows.

This dual-pronged strategy underscores Google's commitment to controlling the entire stack, from silicon to software. By offering both specialized AI accelerators like Ironwood and efficient general-purpose CPUs like Axion, Google aims to provide a comprehensive, cost-effective, and highly performant platform for the evolving demands of AI development and deployment. The message is clear: Google wants to be the default infrastructure provider for the next generation of AI, from the largest frontier models to the smallest agentic workflows.