The landscape of large language models is undergoing a significant shift, as AI2 introduces Bolmo, a new family of byte-level language models. This development directly challenges the long-standing dominance of subword tokenization by offering a practical, high-performing alternative. Bolmo represents a crucial step in making byte-level language models a viable choice for developers and researchers, moving them beyond mere research curiosities.

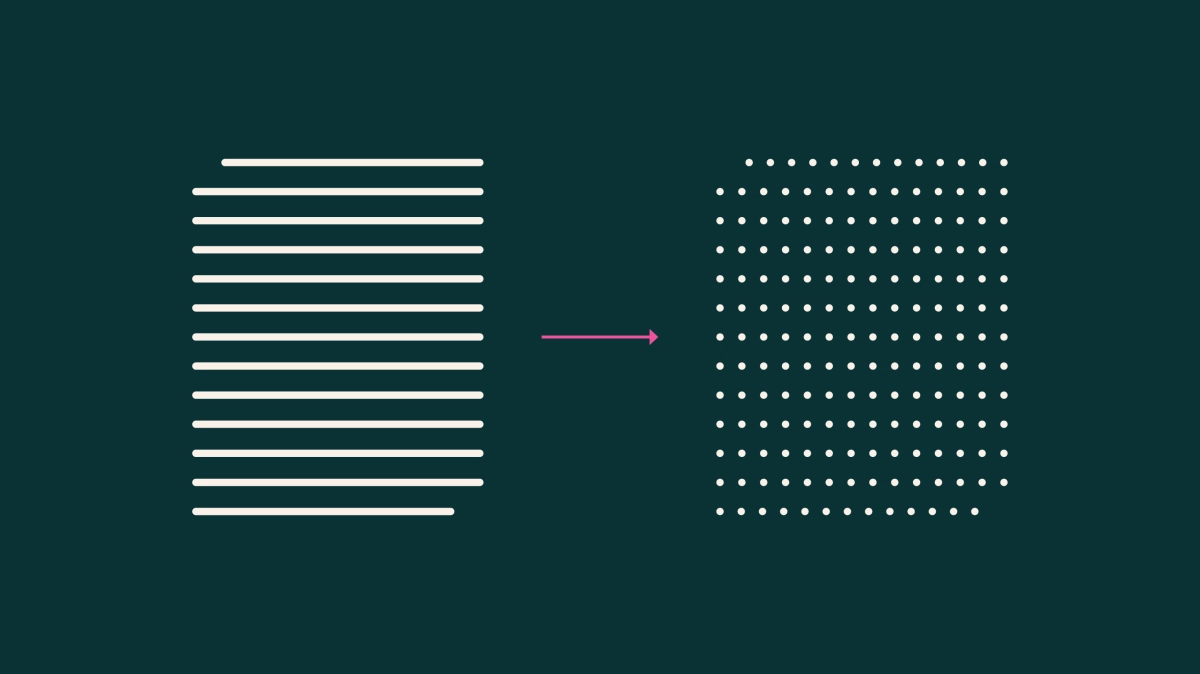

For years, nearly all advanced language models have relied on subword tokenization, breaking text into opaque chunks like "▁inter" or "national." While successful, this approach carries notable drawbacks, including poor character-level understanding, awkward handling of rare words and whitespace, and a rigid vocabulary that struggles across diverse languages. Byte-level models, which operate directly on raw UTF-8 bytes, promise to resolve these issues by inherently understanding text at its most granular level, but their high training costs have kept them from widespread adoption. According to the announcement, building competitive byte-level models traditionally meant starting from scratch, a costly and time-consuming endeavor that lagged behind the rapid advancements seen in subword models.

Bolmo tackles this challenge head-on by "byteifying" existing Olmo 3 models rather than training entirely new ones. This innovative strategy reuses the robust transformer backbone and capabilities already developed, retrofitting them into a flexible byte-level architecture through a relatively short additional training run. The result is a family of fully open byte-level language models, Bolmo 7B and Bolmo 1B, that can match or even surpass state-of-the-art subword models across various tasks, all while remaining practical to train and deploy. This approach significantly lowers the barrier to entry for developing and utilizing byte-level systems, opening new possibilities for more nuanced text processing.

Performance and Practicality Redefined

Bolmo's architecture is a sophisticated latent tokenizer language model, processing text as bytes while leveraging the power of the Olmo 3 transformer. It employs a three-stage process: embedding each UTF-8 byte through a lightweight local encoder, using a non-causal boundary predictor to group bytes into variable-length "patches," and then feeding these patches into the global Olmo 3 transformer. This design allows Bolmo to benefit from the established scale and sophistication of the Olmo 3 backbone, effectively extending its capabilities into the byte space without discarding prior investment in data curation or architecture. The two-stage training process, initially freezing the transformer and then unfreezing it, ensures efficiency while allowing the model to fully exploit byte-level information.

The performance gains are particularly evident where byte-level understanding is critical. Bolmo 7B not only performs comparably to the original subword Olmo 3 7B on broad benchmarks but substantially outperforms it on character-focused tasks like CUTE and EXECUTE, improving accuracy by nearly twenty points on character aggregates. Crucially, Bolmo 7B also emerges as the strongest overall against other byte-level models of similar size across code, math, and character-level understanding. Beyond raw performance, Bolmo addresses the common concern of speed; its mLSTM-based local models and dynamic pooling achieve competitive decoding speeds, and its dynamic hierarchical setup allows compression to be a "toggleable knob." This flexibility means users can smoothly trade off speed and fidelity without redesigning the entire model, a significant advantage over subword models that hit a bottleneck with large vocabularies.

Perhaps one of the most impactful aspects for the industry is Bolmo's seamless integration into existing model ecosystems, enabling "zero-cost upgrades" for post-trained models. By applying task arithmetic—merging the difference between base and post-trained subword Olmo 3 transformer weights into Bolmo—instruction-following skills can be transferred without any additional training. This capability was demonstrated on the IFEval benchmark, where a byteified Bolmo base, after weight merging, essentially matched the performance of the original post-trained Olmo 3 checkpoint. This suggests a powerful paradigm: once a strong open model is byteified, much of its surrounding ecosystem, including fine-tunes and RL runs, can be reused via lightweight weight merging, drastically reducing the effort and cost of developing byte-level applications.

Bolmo represents a pivotal moment for byte-level language models, transforming them from a theoretical advantage into a practical, competitive reality. By demonstrating a reproducible blueprint for "byteifying" strong subword models, AI2 has opened new avenues for fine-grained text control, enhanced multilingual support, and more efficient model development. This innovation promises to accelerate research into richer tokenization behaviors and scalable byte-level systems, ultimately leading to more robust and adaptable language models for a wider range of applications.